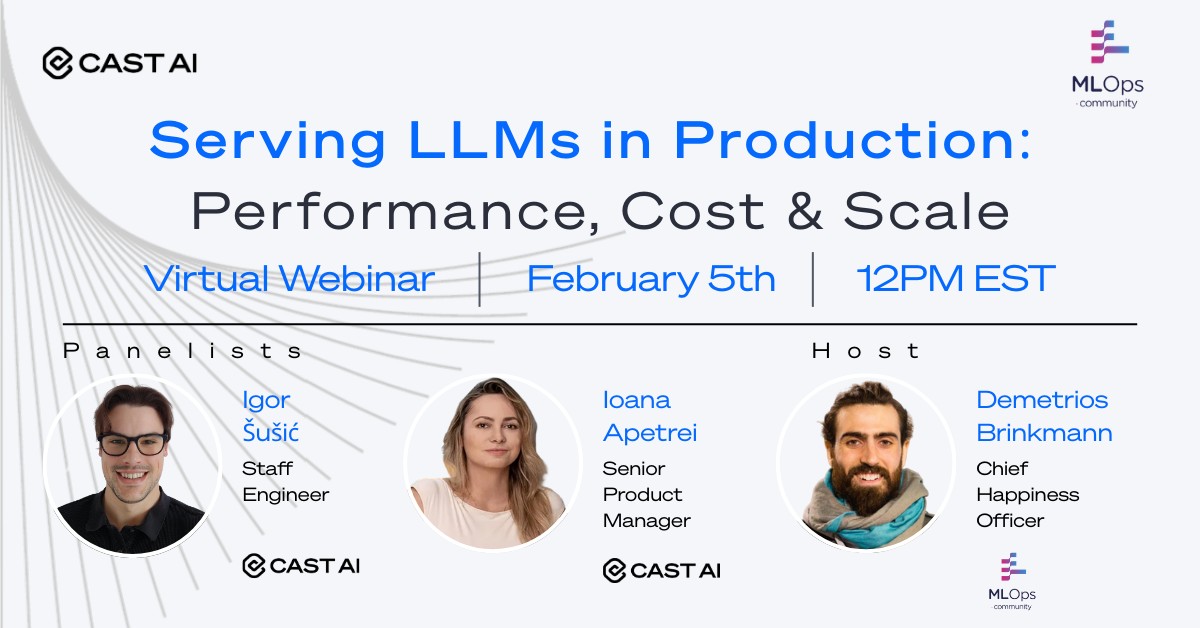

Experimenting with LLMs is easy. Running them reliably and cost-effectively in production is where things break.

Most AI teams never make it past demos and proofs of concept. A smaller group is pushing real workloads to production—and running into very real challenges around infrastructure efficiency, runaway cloud costs, and reliability at scale.

This session is for engineers and platform teams moving beyond experimentation and building AI systems that actually hold up in production.

We’ll skip the hype and focus on the challenges that show up once traffic, latency, and cost matter:

--------------------------------------------------------------------------------------------------------------------------------

Using concrete, real-world examples—including agentic and multi-model workloads—we’ll walk through patterns that teams are successfully running in production today.

You’ll learn:

If you’re ready to move past “it works on my machine” and build AI systems that are efficient, reliable, and production-ready, this session is for you.

[Register Now]