LLMs

November 21, 2024

Price per token is going down. Price per answer is going up.

# Token prices

# LLM

# ROI

Cost Per Token Is Down, But Cost Per Answer Is Up – Here’s Why

Demetrios Brinkmann

Please don’t be that guy. Please don’t let it get to me. I have so much other stuff to do.

Ahhhh Fuck!

Our expectations and use cases from LLMs expand so the cost to us to use them (as well as the cost to train the models and operate the services) is actually going up even as cost/token goes down.

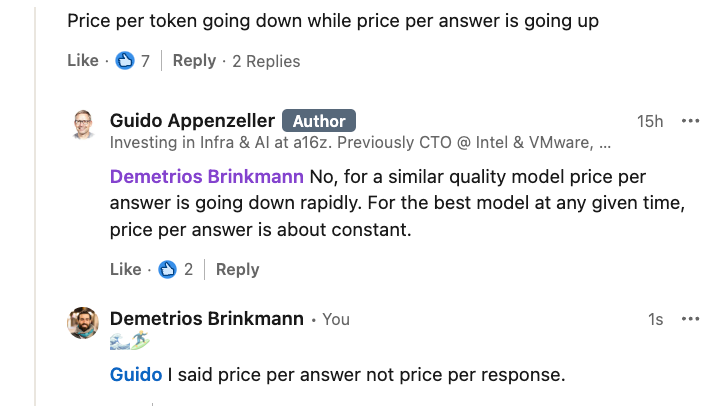

Yes, price per token output is rapidly falling. That does not mean that things are getting cheaper. Nobody in their right mind will argue price per token is going up or even staying constant.

But price per answer?

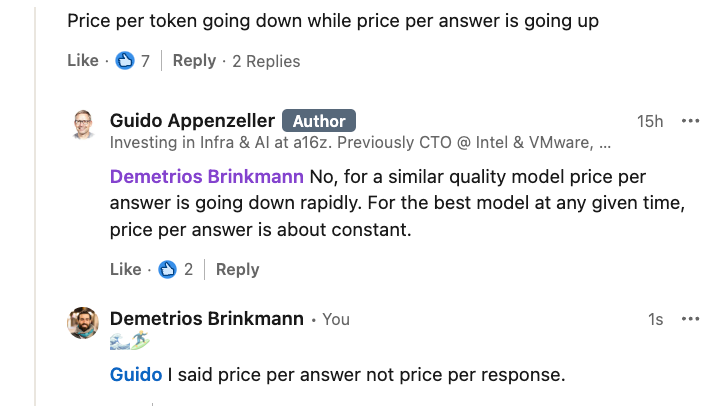

That is not cheaper. And when I said that I was hit with a bit of disagreement. So allow me to explain myself.

Token output is not the same as getting answers.

The expectation of AI being able to handle complexity translates to system complexity on the backend. Before common practice was to make one LLM call, get a response, and call it a day. Now things have changed.

Look at the whole user experience from a systems level.

Forget about reasoning for a moment. Forget about o1 that abstracts away extra LLM calls on the back end and charges the end user a higher price. Forget about LLMs as a judge and all that fancy stuff. We will get to that later.

For now, look at me as the user of an AI system.

I am increasingly asking more complex questions of my AI. I am expecting it to “do” more complex tasks for me. Much more than just asking questions to chatgpt. I want to get various data points summarize them, send it to colleagues.

I am expecting more from AI. I turn to it with increasingly more complex questions.

My expectation translates to asking questions I wouldnt have thought AI could handle a year ago. My expectation translates into longer and longer prompts using more and more of the context window.

This expectation of AI being able to handle complexity translates to system complexity on the backend. Whereas before common practice was to make one LLM call, get a response, and call it a day. Things have changed. I am not saying that use case no longer exists, but I am saying it's less common.

But that is not the AI revolution we were promised. That is not the big productivity gain this hype wave was built on. Companies giving their employees an enterprise license to use Open AI/Anthropic is not what will boost the nations GDP.

What is valuable is AI that ‘does stuff’. So when we expect ‘actions’ we talk agents.

You know what agents come with?

You guessed it, more LLM calls.

LLMs as a judge? More LLM calls. Reasoning and planning? LLMs to carry out the tasks the planning LLM created? More LLM calls.

Context RAG so the chunks you send to the LLM are enriched with more information than an LLM provides? More LLM calls.

You read a paper and saw a new technique to boost the quality of your LLM output? You want to use LLMs as a jury to take the average of various LLMs judging the output.

More LLM calls.

The architecture needed to meet my high expectations means my AI system is not a simple one off API call anymore.

So yes, the price of a one off LLM call that gives me a response is plummeting, while the price to get an answer from a complicated architecture that make various LLM calls means that your price per answer is going up.

Allow me to illustrate this with a real-life scenario.

I expect Cursor to implement a major feature and refactor multiple files by breaking the task down into 10 sub-tasks and executing them in parallel (all LLM calls) then recombining all the various diffs into a coherent code change.

But actually, I would take it a step further and say just because I get a response, does not mean that I got an answer.

Humans relate better to stories. Here is a story then.

Donné and Ioannis spoke at the Agents in Production conference about the data analyst agent they built.

(Side note — the capabilities of the agent are awesome and Donné was very transparent with the challenges they had while building. Highly worth a watch.) But back to the example.

In our story, I need to gather insights about the previous years sales form the MLOps online merch shop. After I have my insights I will write a briefing for leadership to decide what SKUs stay vs go.

So I ask my data analyst agent a few ‘simple’ questions:

- What were the total sales for the year?

- Which SKU performed the best in total?

- Which seasons saw the most sales?

Then maybe I start to spice it up:

- Which SKU performed best in winter? Summer? etc?

- Are there any colors that performed better in certain times of the year?

- Did any surge in sales come from the MLOps Conferences we had? If so what was the top-performing SKU?

Each one of these questions is creating various LLM calls. (we still haven’t talked about reasoning or LLMs as a judge. We will get to that)

And let me reiterate, I have lots of responses, however, I do not have my answer.

But Open Source!

This is the point where many of you will jump in and say what about small fine-tuned open-source models.

I know. I hear you. And you are kinda arguing my exact point.

Think about small open-source fine-tuned models from a holistic view:

- Who fine-tuned the model? An MLE a SWE? how much is their salary?

- How many GPU hours did you use to fine-tune the model?

- Where did you get that data from and who labeled it? Another model? A human? (Sounds expensive)

- Where are you hosting that model and how? Managed inference? Roll your own? If you are renting those GPUs I hope you have some good alerting set up.

So while I do agree that a small domain specific fine-tuned model is going to be amazingly cheap, don’t forget, you will need many of these models.

Add the cost of creating each fine-tuned model to the cost per answer

And then factor in the human hours of deciding if all that work is worth it. Debugging the system when the end result isn’t up to par is a wild goose chase.

- Did you fine-tune the model right?

- Did you have to contract a new vendor to give you ‘easier’ fine-tuning capabilities? Is the bug in their system?

- Did you give it enough high-quality data?

- Should you use a bigger model?

- Maybe it’s the agent architecture that doesn’t work with domain-specific models?

(It pains me to make this meme. I love open source and want to see it flourish. but I also gotta call a spade a spade. That being said, each use case is different and each comes with its own constraints. Take the meme with a grain of salt)

Debugging the AI system when the end result isn’t up to par is a wild goose chase.

Anyway.

Back to me needing to give me boss the executive summary of this year’s sales so they can make some high quality decisions.

You may remember, I still don’t have my answer. I need to ask about a few more sales stats with respect to seasons, colors, and sizes. I also want to see which products were the most returned out of our catalogue.

And…BOOM! I just broke the system.

My agent stops responding.

Until now the agent was playing nicely with my simple prompts about the data. But when I ask it to go check the data and then change systems to cross reference that data with another group of data…guess what?

I need to do a little prompt finagling.

Before going down the prompting rabbit hole let's recap where I am at.

I have some sense of whats going on with the sales. I still do not have an answer. Oh and by the way, we still haven’t talked about LLM’s as a judge, let alone a jury. I promise will get to that.

So I sit there staring at the context window, trying to word what I am looking for differently. I use a few tricks I have seen my favorite AI influencers talk about on X. I reference the prompt guide I bought last summer when I realized I need to learn more about AI. I find a tool that helps me craft better prompts online.

Each time I test a new prompt you know what that equals? More LLM calls.

And finally, with some divine intervention, it returns what I asked for...Kinda. I put my hands together and pray its not a hallucination.

This is the point that I feel like I have all the information I need to make a conclusion. I can give a briefing to leadership on which SKUs to rotate out for the next year. So I stuff all the responses into the context window and ask the AI to create me an executive summary.

The only problem?

My recommendations are insanely incorrect due to me basing these insights on low-quality LLM output.

Why is the output low quality you say?

My data analyst agent creators didn’t use LLMs as a Judge.

Or Jury.

So yes, agentic RAG, big brain little brain, LLMs as a judge, Context RAG, and a few gullible, busy humans mean that cost per token is going down but cost per answer is going up.

Let us end with a thought experiment

Turning our attention to what all this means for the LLM providers (google, anthropic, openai etc.) How do the unit economics work?

If the cost of training a model is going up, revenue per token is going down, and the number of calls to get a good answer is increasing (because of the shift in the complexity of the tasks people are executing), what does that do to the whole ecosystem in the mean time?

But hey what do I know? I was recently awarded the title of knowing nothing about agents by Podcaster-k4j.

Take all that I say with a healthy dose of skepticism.

Thank you Todd Underwood, Meb, Luke Marsden, and Devansh Devansh for your invaluable feedback.

Dive in

Related

31:55

Video

Going Beyond Two Tier Data Architectures with DuckDB // Hannes Mühleisen // DE4AI

By Hannes Mühleisen • Sep 18th, 2024 • Views 676

51:30

Video

Don't Listen Unless You Are Going to Do ML in Production

By Kyle Morris • Mar 10th, 2022 • Views 610

31:55

Video

Going Beyond Two Tier Data Architectures with DuckDB // Hannes Mühleisen // DE4AI

By Hannes Mühleisen • Sep 18th, 2024 • Views 676

51:30

Video

Don't Listen Unless You Are Going to Do ML in Production

By Kyle Morris • Mar 10th, 2022 • Views 610