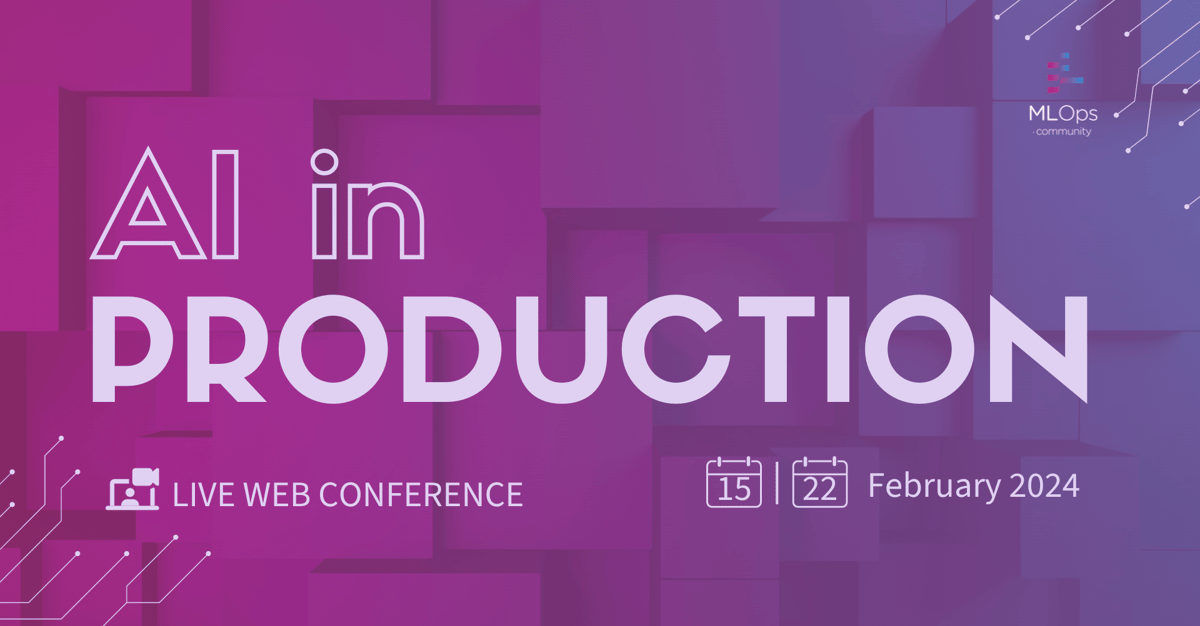

LIVESTREAM

AI in Production

# AI

# ML

# MLOps

# AI Agents

# LLM

# Finetuning

Large Language Models have taken the world by storm. But what are the real use cases? What are the challenges in productionizing them?

In this event, you will hear from practitioners about how they are dealing with things such as cost optimization, latency requirements, trust of output and debugging.

You will also get the opportunity to join workshops that will teach you how to set up your use cases and skip over all the headaches.

Speakers

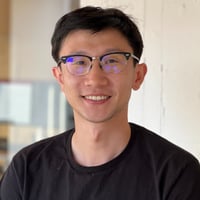

Philipp Schmid

AI Developer Experience @ Google DeepMind

Linus Lee

Research Engineer @ Notion

Holden Karau

Software Engineer @ Netflix (Day) & Totally Legit Co (weekends)

Kai Wang

Group Product Manager - AI Platform @ Uber

Alejandro Saucedo

Director of Engineering, Applied Science, Product & Analytics @ Zalando

Shreya Rajpal

Creator @ Guardrails AI

Faizaan Charania

Senior Product Manager, ML @ LinkedIn

Olatunji Ruwase

Principal Research Sciences Manager @ Microsoft

Shreya Shankar

PhD Student @ UC Berkeley

Amritha Arun Babu

AI/ML Product Leader @ Klaviyo

Nyla Worker

Director of Product @ Convai

Jason Liu

Independent Consultant @ 567

Maria Vechtomova

MLOps Tech Lead @ Ahold Delhaize

Maxime Labonne

Senior Machine Learning Scientist @ --

Hien Luu

Head of ML Platform @ DoorDash

Sarah Guo

Founder @ Conviction

Başak Tuğçe Eskili

ML Engineer @ Booking.com

Cameron Wolfe

Director of AI @ Rebuy Engine

Aarash Heydari

Technical Staff @ Perplexity AI

Dhruv Ghulati

Product | Applied AI @ Uber

Katharine Jarmul

Principal Data Scientist @ Thoughtworks

Diego Oppenheimer

Head of Product @ Hyperparam

Julia Turc

Co-CEO @ Storia AI

Aditya Bindal

Vice President, Product @ Contextual AI

Annie Condon

AI Solutions Engineer @ Acre Security

Ads Dawson

Senior Security Engineer @ Cohere

Greg Kamradt

Founder @ Data Independent

Willem Pienaar

Co-Founder & CTO @ Cleric

Arjun Bansal

CEO and Co-founder @ Log10.io

Jineet Doshi

Staff Data Scientist @ Intuit

Andy McMahon

Director - Principal AI Engineer @ Barclays Bank

Meryem Arik

Co-founder/CEO @ TitanML

Hannes Hapke

Principal Machine Learning Engineer @ Digits

Eric Peter

Product, AI Platform @ Databricks

Matt Sharp

AI Strategist and Principle Engineer @ Flexion

Daniel Lenton

CEO @ Unify

Rex Harris

Founder, AI Product Lead @ Agents of Change

Jonny Dimond

CTO @ Shortwave

Arnav Singhvi

Research Scientist Intern @ Databricks

David Haber

CEO @ Lakera

Sam Stone

Head of Product @ Tome

Andriy Mulyar

Cofounder and CTO @ Nomic AI

Mihail Eric

Co-founder @ Storia AI

Phillip Carter

Principal Product Manager @ Honeycomb

Salma Mayorquin

Co-Founder @ Remyx AI

Jerry Liu

CEO @ LlamaIndex

Lina Paola Chaparro Perez

Machine Learning Project Leader @ Mercado Libre

Austin Bell

Staff Software Engineer, Machine Learning @ Slack

Stanislas Polu

Software Engineer & Co-Founder @ Dust

David Aponte

Senior Research SDE, Applied Sciences Group @ Microsoft

Charles Brecque

Founder & CEO @ TextMine

Agnieszka Mikołajczyk-Bareła

Senior AI Engineer @ CHAPTR

Louis Guitton

Freelance Solutions Architect @ guitton.co

Yinxi Zhang

Staff Data Scientist @ Databricks

Donné Stevenson

Machine Learning Engineer @ Prosus Group

Abigail Haddad

Lead Data Scientist @ Capital Technology Group

Atita Arora

Developer Relations Manager @ Qdrant

Andrew Hoh

Co-Founder @ LastMile AI

Arjun Kannan

Co-founder @ ResiDesk

Rahul Parundekar

Founder @ A.I. Hero, inc.

Michelle Chan

Senior Product Manager @ Deepgram

Bryant Son

Senior Solutions Architect @ GitHub

Alex Cabrera

Co-Founder @ Zeno

Zairah Mustahsan

Staff Data Scientist @ You.com

Jiaxin Zhang

AI Staff Research Scientist @ Intuit

Stuart Winter-Tear

Head of AI Product @ Genaios

Chang She

CEO / Co-founder @ LanceDB

Matt Bleifer

Group Product Manager @ Tecton

Anthony Alcaraz

Chief AI Officer @ Fribl

Vipula Rawte

Ph.D. Student in Computer Science @ AIISC, UofSC

Kai Davenport

Software Engineer @ HelixML

Adam Becker

IRL @ MLOps Community

Biswaroop Bhattacharjee

Senior ML Engineer @ Prem AI

Alex Volkov

AI Evangelist @ Weights & Biases

John Whaley

Founder @ Inception Studio

Denny Lee

Sr. Staff Developer Advocate @ Databricks

Anshul Ramachandran

Head of Enterprise & Partnerships @ Codeium

Philip Kiely

Head of Developer Relations @ Baseten

Almog Baku

Fractional CTO for LLMs @ Consultant

Omoju Miller

CEO and Founder @ Fimio

Demetrios Brinkmann

Chief Happiness Engineer @ MLOps Community

Ofer Hermoni

AI Transformation Consultant @ Stealth

Agenda

2024-02-152024-02-22

Track View

Engineering Track

Product Track

Workshop

From4:30 PM

To4:50 PM

GMT

Tags:

Engineering Stage

Opening / Closing

Welcome - AI in Production

Speakers:

From4:50 PM

To5:20 PM

GMT

Tags:

Engineering Stage

Keynote

Anatomy of a Software 3.0 Company

+ Read More

Speakers:

From5:20 PM

To5:50 PM

GMT

Tags:

Engineering Stage

Keynote

From Past Lessons to Future Horizons: Building the Next Generation of Reliable AI

+ Read More

Speakers:

From5:50 PM

To6:20 PM

GMT

Tags:

Engineering Stage

Presentation

Productionizing Health Insurance Appeal Generation

+ Read More

Speakers:

From6:20 PM

To6:40 PM

GMT

Tags:

Engineering Stage

Break

Musical Entertainment

Speakers:

From6:40 PM

To6:50 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Navigating through Retrieval Evaluation to demystify LLM Wonderland

+ Read More

Speakers:

From6:50 PM

To7:00 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Graphs and Language

+ Read More

Speakers:

From7:00 PM

To7:10 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Explaining ChatGPT to Anyone in 10 Minutes

+ Read More

Speakers:

From7:10 PM

To7:40 PM

GMT

Tags:

Engineering Stage

Presentation

Making Sense of LLMOps

+ Read More

Speakers:

From7:40 PM

To7:50 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Productionizing AI: How to Think From the End

+ Read More

Speakers:

From7:50 PM

To8:00 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Evaluating Large Language Models (LLMs) for Production

+ Read More

Speakers:

From8:00 PM

To8:30 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Vision Pipelines in Production: Serving & Optimisations

+ Read More

Speakers:

From8:26 PM

To8:33 PM

GMT

Tags:

Engineering Stage

Break

Guess the Speaker - Quiz

Speakers:

From8:33 PM

To9:00 PM

GMT

Tags:

Engineering Stage

Presentation

Charting LLMOps Odyssey: challenges and adaptations

+ Read More

Speakers:

From9:00 PM

To9:10 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Evaluating Language Models

+ Read More

Speakers:

From9:10 PM

To9:30 PM

GMT

Tags:

Engineering Stage

Presentation

Evaluating Data

Speakers:

From9:30 PM

To9:40 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Helix - Fine Tuning for Llamas

+ Read More

Speakers:

From9:40 PM

To9:58 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Let's Build a Website in 10 minutes with GitHub Copilot

+ Read More

Speakers:

From10:00 PM

To10:10 PM

GMT

Tags:

Engineering Stage

Lightning Talk

RagSys: RAG is just RecSys in Disguise

+ Read More

Speakers:

From10:10 PM

To10:20 PM

GMT

Tags:

Engineering Stage

Break

A Dash of Humor

+ Read More

Speakers:

From10:20 PM

To10:30 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Machine Learning beyond GenAI - Quo Vadis?

+ Read More

Speakers:

From10:30 PM

To10:40 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Navigating the Emerging LLMOps Stack

+ Read More

Speakers:

From11:00 PM

To11:10 PM

GMT

Tags:

Engineering Stage

Lightning Talk

Accelerate ML Production with Agents

+ Read More

Speakers:

From11:08 PM

To11:38 PM

GMT

Tags:

Engineering Stage

Presentation

A Survey of Production RAG Pain Points and Solutions

+ Read More

Speakers:

Event has finished

February 22, 4:30 PM GMT

Online

Organized by

MLOps Community

Event has finished

February 22, 4:30 PM GMT

Online

Organized by

MLOps Community