LIVESTREAM

AI in Production 2025

# AI in Production

# AI

We’re Back for Round Two!

The AI in Production 2025 event builds on the momentum of last year, with a better focus on the toughest challenges of deploying AI at scale.

LLMs and AI applications are advancing rapidly, but production hurdles haven’t gone away. This year, we’re tackling the hard stuff—managing costs, meeting latency requirements, debugging complex systems, building trust in outputs and agents of course.

Hear straight from the people making it happen and see how they’re solving problems you might be facing today.

Speakers

Shreya Rajpal

Creator @ Guardrails AI

Afshaan Mazagonwalla

AI Engineer @ Google Cloud Consulting

Vasu Sharma

Applied Research Scientist @ Meta (FAIR)

Amy Hodler

Executive Director @ GraphGeeks

Jessica Talisman

Senior Information Architect @ Adobe

Raza Habib

CEO and Co-founder @ Humanloop

Erik Bernhardsson

Founder @ Modal Labs

Hala Nelson

Author and Professor of Mathematics @ James Madison University

Merrell Stone

Research, Strategic Foresight and Human Systems @ Avanade

Bassey Etim

Senior Director - Content Strategy @ Pluralsight

Erica Greene

Director of Engineering, Machine Learning @ Yahoo

Guanhua Wang

Senior Researcher @ Microsoft

Sishi Long

Staff Software Engineer @ Uber

Michael Gschwind

Distinguished Engineer @ NVIDIA

Chiara Caratelli

Data Scientist @ Prosus Group

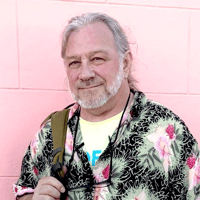

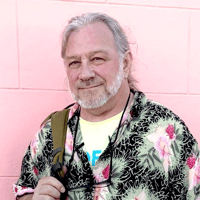

Paco Nathan

Principal Developer Relations Engineer @ Senzing

Egor Kraev

Head of AI @ Wise

Monika Podsiadlo

Voice AI @ Google DeepMind

Gaurav Mittal

Staff Software Engineer @ Stripe

Aditya Gautam

Machine Learning Technical Lead @ Meta

Weidong Yang

CEO @ Kineviz

Alessandro Negro

Chief Scientist @ GraphAware

Samuel Partee

CTO & Co-Founder @ Arcade AI

David Hughes

Principal Solution Architect - Engineering & AI @ Enterprise Knowledge

Brianna Connelly

VP of Data Science @ Filevine

Sebastian Kukla

Digital Transformation-North America @ RHI Magnesita

Sahil Khanna

Senior machine learning engineer @ Adobe

Dimitrios Athanasakis

Principal AI Engineer @ AstraZeneca

Tanmay Chopra

Founder / CEO @ Emissary

Aisha Yusaf

Founder @ Orra

Rex Harris

Founder, AI Product Lead @ Agents of Change

Julia Gomes

Senior Product Manager @ Inworld AI

Ron Chrisley

Professor of Cognitive Science and AI @ University of Sussex

Ezo Saleh

Founder @ Orra

Vaibhav Gupta

CEO @ Boundary ML

Tom Shapland

CEO @ Canonical AI

Ilya Reznik

Head of Edge ML @ Live View Technologies

Daniel Svonava

CEO & Co-founder @ Superlinked

Rahul Parundekar

Founder @ A.I. Hero, inc.

Mohan Atreya

Chief Product Officer @ Rafay

Joshua Alphonse

Head of Product @ PremAI

Demetrios Brinkmann

Chief Happiness Engineer @ MLOps Community

Qasim Wani

CTO @ Advex

Agenda

Stage 1

Stage 2

Stage 3

From3:00 PM

To3:20 PM

GMT

Tags:

Opening / Closing

Welcome to AI in Production 2025

Speakers:

From3:25 PM

To3:50 PM

GMT

Tags:

Keynote

The LLM Guardrails Index: Benchmarking Responsible AI Deployment

+ Read More

Speakers:

From3:55 PM

To4:20 PM

GMT

Tags:

Presentation

Graph Retrieval - Let Me Count The Ways

+ Read More

Speakers:

From4:25 PM

To4:50 PM

GMT

Tags:

Presentation

Bridging the gap between Model Development and AI Infrastructure

+ Read More

Speakers:

From4:55 PM

To5:05 PM

GMT

Tags:

Break

Break

From5:05 PM

To5:30 PM

GMT

Tags:

Presentation

Eval Driven Development: Best Practices and Pitfalls When Building with AI

+ Read More

Speakers:

From5:35 PM

To6:00 PM

GMT

Tags:

Presentation

Building Consumer Facing GenAI Chatbots: Lessons in AI Design, Scaling, and Brand Safety

+ Read More

Speakers:

From6:05 PM

To6:35 PM

GMT

Tags:

Panel Discussion

Building Platforms for Gen AI Workloads vs Traditional ML Workloads

+ Read More

Speakers:

From6:35 PM

To6:45 PM

GMT

Tags:

Break

Break

From6:45 PM

To7:10 PM

GMT

Tags:

Presentation

Unlocking AI Agents: Fixing Authorization to Get Real Work Done

+ Read More

Speakers:

From7:15 PM

To7:40 PM

GMT

Tags:

Presentation

Introducing the Prompt Engineering Toolkit

+ Read More

Speakers:

From7:45 PM

To7:55 PM

GMT

Tags:

Break

Break

From7:55 PM

To8:20 PM

GMT

Tags:

Presentation

LLM in Large-Scale Recommendation Systems: Use Cases and Challenges

+ Read More

Speakers:

From8:25 PM

To8:50 PM

GMT

Tags:

Presentation

Doxing the Dark Web

+ Read More

Speakers:

From8:55 PM

To9:20 PM

GMT

Tags:

Closing Keynote

The AI Developer Experience Sucks so Let's Fix it – The Story of Modal

+ Read More

Speakers:

Event has finished

March 12, 3:00 PM GMT

Online

Organized by

MLOps Community

Event has finished

March 12, 3:00 PM GMT

Online

Organized by

MLOps Community