Best Practices Towards Productionizing GenAI Workflows

Speaker

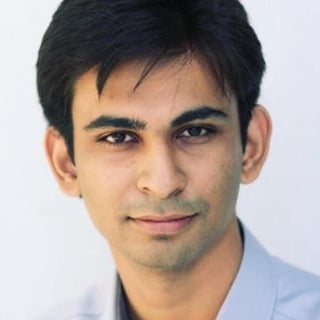

Savin Goyal has a diverse and extensive work experience in the field of technology. Savin is currently serving as the Co-Founder & CTO at Outerbounds, where they actively seek top-tier talent and focus on the intersection of systems, machine learning, and design.

Prior to that, Savin worked at Netflix as a Software Engineer, specializing in machine learning infrastructure. Savin led the ML Infra team and developed Metaflow, a platform widely adopted by productive ML teams in Fortune 500 companies.

SUMMARY

Savin Goyal, the Co-founder and CTO of Outerbounds and former Netflix tech lead discusses Metaflow, an open-source platform for managing machine learning infrastructure. He explores "Gen AI" and its impact on personalized customer experiences, emphasizing data's crucial role in ML infrastructure, including storage, processing, and security. Savin highlights Metaflow's orchestration capabilities, simplifying deployment for data scientists through Python-based infrastructure as code. The platform addresses engineering challenges like optimizing GPU usage and handling multitenant workloads while emphasizing continuous improvement and reproducibility. Goyal advocates for agile experimentation and the development of "full stack data scientists," presenting Metaflow as a solution for securely connecting to data warehouses and generating embeddings.

TRANSCRIPT

Join us at our first in-person conference on June 25 all about AI Quality: https://www.aiqualityconference.com/

Savin Goyal [00:00:00]: Okay, cool. Let's get started. My name is Savin. I'm the co founder CTO at Outerbounds. And Outerbounds is a startup where we are building an ML platform, and we have been at it for close to two and a half years. And before that, along with my co founder, I was at Netflix, building Netflix's ML platform. So many of the things that we built part the recommendation experience that you might be experiencing every day when you stream content from Netflix. So if you like your recommendations, then definitely bulk of the work that we ended up doing found its way there.

Savin Goyal [00:00:38]: If you do not like your recommendations, then I left Netflix two and a half years ago would have much to do with it. But while we were at Netflix, we got the opportunity to not only build a platform that served the needs of Netflix, but we also ended up open sourcing much of our work under the name of this project called Metaflow, which as of today, powers many other large enterprises that you might already be familiar with. As a matter of fact, not only your netflix recommendations are powered through Metaflow, but your Amazon Prime Video recommendations are also powered through that ecosystem. If you send your DNA sample to 23 andme for any kind of analysis, then they'll sort of run their algorithms through our systems. If you bank with Goldman Sachs, or if you are looking for investment advice from Morningstar, then it's very likely that those products are using our technology behind the scenes. But today, I'm here to basically talk about Gen AI and what does the ML infrastructure ecosystem looks like in this context? Just earlier in the evening, I was talking to a few of you in the crowd who are actually already building gen AI enabled new experiences. So there was like one gentleman here who is actually building out an experience where you can basically have natural language search that powers your ecommerce experience. So imagine if, let's say, you are a new age wayfair.

Savin Goyal [00:02:10]: You want to upend their business model and you want to provide a much better buying experience, then one mechanism that you may have is you may want to sort of strap on an NLP experience to figure out the actual furniture that somebody may want to buy and sort of really fine tune their experience in terms of what kind of furniture may look the nicest in their apartment, and you can sort of optimize for pricing and things of that nature. And let's say if I'm one of these users, and if I go on this website and maybe I want a new chandelier or I want a new lamp, and I may want to sort of search for it. And then this entire experience may be very heavily personalized for me. Right. I mean, this already happens today when you go to, let's say, Amazon.com or even when you are on Netflix, everybody's screen looks very different. Netflix really understands what kind of content you want to watch. So as soon as you log in, every single piece of content is optimized for you. Amazon understands your buying habits.

Savin Goyal [00:03:10]: Amazon also understands what it can deliver to you in the most cheapest manner, as well as most quickly. And all of those things also factor into any kind of search results that you see. Right. And you would definitely want to make sure that if, let's say, you are relying on any of these gen AI models, then you are able to embed many of the intelligence that might be part and parcel of your specific enterprise into it. Right. Imagine if you have higher margins on any kind of lumps, then maybe you'll sort of want to showcase that a lot more to your end users. If, let's say, you understand that your end user cares about a specific kind of architecture or subscribe to a specific kind of design philosophy, then you may also want to personalize the actual description of the product so that the chances that somebody may want to actually buy that product sort of goes up. And those are all plenty of different areas where genai can come in and help.

Savin Goyal [00:04:06]: Right? So, for example, if we just sort of look at this sort of simple product, you could have, let's say, an LLM powered search experience. You could have personalized discovery. You can sort of create images on the fly. Maybe the user sort of provides some prompts describing what their apartment looks like, and you can visualize what this piece of furniture may look like while sort of sitting there in their living room. You may optimize the descriptions that you have to sort of optimize for your conversion rates. So plenty of different mechanisms where you can basically use Gen AI, you can use LLMs, all the cool things that presenters before me have spoken about to really sort of supercharge somebody's buying experience. Now, one important thing here is that in this entire experience, it's not one single model. It's a collection of different models, a collection of different approaches that is powering an experience.

Savin Goyal [00:05:05]: And this is something that we sort of see quite often, that when it comes to actual experiences, actual use cases within enterprises, it's actually quite rare that you might just be using one model or one specific way of engaging with generative AI models. And it's usually sort of like a collection of few different approaches. And not only that, there could be other machine learning techniques as well. That may not be strictly what you would call sort of like falls under the bucket of Chen AI, for example. You may want to forecast what your inventory looks like. Of course, you wouldn't want to very heavily push a sofa to your customers when you know that that might be sort of like stuck in the Suez Canal for many, many months. You may want to sort of optimize for the lifetime value of your customers. Maybe you have some marketing campaign that's also going on and you would want to sort of attribute the buying decision that somebody has to sort of back to that marketing campaign so that you can optimize your marketing budgets going forward as well.

Savin Goyal [00:06:06]: And those could very well not really be part of, let's say, your LLM strategy, but maybe sort of like part of what we now all call old school machine learning. And now the reality is that as an organization, you have to care about all of these problems, right? Like if you are shipping an actual product out there to your paying customers, then you have to worry about the universe of this problem, the entire total cent. And that sort of then starts presenting itself problems across sort of the entire layer cake of machine learning infrastructure that I'll sort of go towards. So you can imagine all of these problems, they start with data. The presenter right before me, they sort of very accurately sort of called it out that all of these problems, ultimately, at the end of the day, they are rooted in data. You might be dealing with large volumes of data, you might be dealing with small volumes of data. You might be somewhere in between. But depending on the actual use case, you have to really sort of think through.

Savin Goyal [00:07:03]: What kind of data sets do you have access to? How do you think about storing that data? How do you process that data? How do you secure access to that data, especially if that information is something that could be quite sensitive? And then on top of that, of course, data by itself doesn't really have a lot of value. You have to run some sort of compute on top of it, right? It could be as simple as processing that data so that it can be consumed by other systems. Well, it could be training your logistic regression models, training neural nets on top of it, building rack systems, fine tuning your models. Or if let's you have access to a huge GPU fleet and you have millions of dollars to burn, maybe you want to sort of just create your own foundation model as well. And this compute, it's oftentimes not really a one time activity, right? Data science has science in its name. So it's very experimental. You don't really know ahead of time if the results that you are going to get are really going to hit the mark. And even if they do, there's always potential to do better.

Savin Goyal [00:08:07]: So you have to sort of get into a zone where you are able to iterate very quickly as well as run many experiments in a very cheap manner. So how do you sort of orchestrate these workloads, author these workloads? How do you version these workloads so you're able to sort of benchmark their performance and then sort of figure out that, okay, if I tweak these settings, or if I try a new data set, or if I try a knit new approach, then are my metrics moving in the right direction? How do you do that effectively across a wide spectrum of machine learning styles becomes really, really important in any organization. And of course, what we have spoken about up until now is how do you process the data? How do you get to a model? But then how do you deploy? Right? And deployment can mean many different things. If, let's say we talk about machine learning systems, traditional machine learning, it could mean that, hey, I have this model. Can I host this model as an endpoint? Can I deploy this model inside, let's say, a cell phone device, so that I can do on device inferencing? Maybe. I've sort of had the resources to train my own foundation model. How do I host that foundation model? It can be sort of really huge to fit in sort of like one single machine. If I'm building a rack system, then what does it mean to deploy a rack system? And what does it mean to really sort of make sure that it's sort of like reliably deployed? Many different flavors come in, in any organization.

Savin Goyal [00:09:32]: And it's also very likely that the SLAs that you would want to associate with any of these deployment strategies could also significantly vary. Right, servicing, let's say, 100 million members or 200 million members that Netflix has for their recommendation systems. The kind of deployment strategy that you would need would look very different than, let's say, if you wanted to deploy a model for some internal dashboard, then maybe it doesn't require sort of like as much trigger per se, but in an organization, you would need maybe sort of like both of those approaches. And then, of course, the other thing that data scientists have to worry about day in and day out is that everything that we have spoken about up until now has been all around the infrastructure side of the house. But then there is the actual data science aspect as well. If you're worrying about prompt engineering, then how do you write better prompts? If you're thinking about training a model, then traditional machine learning, how do you think about feature engineering? Or should you be using PyTorch Tensorflow? Should you be rolling out your own algorithmic implementation? Those are all the questions that data scientists want to tackle. But then what we have seen, what we observed back at Netflix as well, was that most data scientists, they would end up spending a significant fraction of their time just battling infrastructure concerns. Right? And that is also an area that they are not really interested in.

Savin Goyal [00:10:54]: I mean, they are very technical, very smart, but then, of course, if they wanted to solve engineering problems or infrastructure problems, then they would have chosen to be an infrastructure engineer. There's a reason why they like doing data science work. But unfortunately, given sort of like, the state of platform availability in any organization, their actual effectiveness is highly limited. And that was basically the goal that we had with our work at Netflix, that how can we basically ensure that data scientists can be full stack data scientists, right? If you think about, let's say, software engineering, since the 80s, there used to be a time when every organization used to have a very massive engineering team to get even the simplest of engineering tasks done. You would have software engineers, you would have release managers, you would have QA engineers, you would have database admins. And now, with the DevOps movement, what has really happened is that we have enabled a software engineer to go end to end. There is now an inherent expectation that you can have from a software engineer that they can be product minded, they can actually ship an entire product end to end, as well as manage its lifecycle. And we want to make sure that we can have the same expectation from a team of data scientists as well, that they can basically roll with a business metric that they need to optimize.

Savin Goyal [00:12:15]: They can bring in their own business foresight, create machine learning models, not only stop at creating these machine learning models, but really, actually go all out and build machine learning systems and be responsible for managing the evolution of those machine learning systems in the longer term. So let's see sort of how that sort of plays out. This is, in many ways, what might be true in most organizations today, especially in the genai ecosystem, where as they sort of look into running their entire business, there could be a variety of different approaches that an organization might need. So, for example, let's say if there's a CX system that needs to be built out, that might be relying on some sort of like fine tuned LLMs or some rack systems. There may be an actual product that a customer may be using, and that might be relying on some personalized recommendations that could be a function of your traditional machine learning models. They could also be consuming embeddings that are generated through your LLMs. Maybe you're not only limited to sort of like textual models, but also multimodal models as well, for a variety of different use cases. Now, for the second half of my talk, I'm just going to give you a quick walkthrough of some of the problems that people sort of run into when they are building these data driven systems and how some of the systems that we have built can sort of come in handy.

Savin Goyal [00:13:42]: Of course, this is sort of like very high level, but if you have questions, please come talk to me. I'll have plenty of links towards the end of the talk as well, if you're curious to learn more. So I wouldn't go deep into what is a rag system. I think presenters before me have done an excellent job at that. But let's sort of think about, let's say, the data aspect of building rack systems and what does that sort of look like? So of course you have your data warehouse. That data warehouse has access to some data. Maybe that data sort of gets refreshed in some cadence. You have access to, let's say, some off the shelf model could be llama, two mistral mixture.

Savin Goyal [00:14:20]: And sort of like, there's always sort of like a knit new model that's sort of like coming up. And maybe you have invested in a vector DB as well. I mean, this could be sort of like as simple as having some embeddings lying in s three to sort of like working with some vendor to sort of store your vector embeddings. Now, with metaflow, what we basically enable a data scientist to do is to author these workflows in a very simple and straightforward manner. So metaflow is a solution that's sort of like rooted in python. So we expect our data scientists to be rather proficient in writing Python code. And now you can imagine sort of like one of the first things that you would want to do when you are, let's say, creating sort of like the semantic cache of sorts is you'd want to first get access to the model, right? And you would also want to keep track of what was the model that you used to generate these embeddings. Because these models, they also change over time.

Savin Goyal [00:15:18]: Many times they can change beneath you without you sort of even recognizing that the model vendor has actually sort of changed those model weights. So it's usually really important that you are able to snapshot that model. If you have access to that model while you are actually generating these embeddings, you would want to access this data from which you are generating these embeddings in a rather secure manner. So within metaflow, we provide many capabilities so that you are able to very securely connect to your data warehouses, which can be rather important, especially if you are part of an organization that might be rather security conscious. And then you may want to generate these embeddings for every single shard of the data that you have, right? Of course, this isn't really a problem if you're working on small data, but if you have rather large volume of data, if, let's say, for example, at Netflix, we used to generate embeddings from all the raw video that Netflix would sort of accumulate almost every single day as it is shooting new TV shows and movies. And there would be new different versions of the models that the data science team is coming up with as well. So there was sort of like this constant generation of embeddings that would happen internally, and that used to consume quite a huge amount of compute as well. For us, in our case back then, we used to write all of these embeddings back into three.

Savin Goyal [00:16:45]: It sort of like worked phenomenally well for us. But you can imagine now, if you go to a data scientist, and if you tell them that, okay, you have to process these rather huge video files, and you have to generate embeddings out of this, then in many ways, it becomes sort of like a nontrivial data engineering problem. And we want to make sure that it doesn't sort of end up being the case that a data scientist then feels encumbered, that they are being asked to do something that is pretty much sort of like out of their reach. So in this particular example, maybe you want to generate embeddings on top of GPU instances. And with metaflow, it's as simple as just like specifying that this specific task needs to run on an instance with one GPU allocated to it. And what metaflow will do is it will automatically figure out how to sort of launch that instance that has a single GPU. If that instance has multiple GPUs, then it will reserve only one single GPU, so that other GPUs can be used by other workloads. It'll automatically figure out how to move the user code onto these instances in the cloud so that your data scientist does not have to be an expert in cloud engineering or Kubernetes anymore, and off they go.

Savin Goyal [00:17:56]: This is all basically they need to do to sort of start running their workloads at scale. And of course your work doesn't end here. Your data is constantly getting updated, your models are constantly getting updated, and you'd want to make sure that as and when you have fresh data, as and when you have newer versions of the model, you're able to run this workflow reliably well, almost on autopilot. So you can basically enable these dependencies to your wider infrastructure that whenever there are any further changes in your infrastructure, let's say new data files show up in your data warehouse, then this workflow can start executing in many ways. You would also want to make sure that everything is fault tolerant. Of course, for folks here who have been sort of like using spot instances to save on costs, you can imagine they can be sort of taken away from you at any point in time. So how do you sort of ensure that there is proper reliability that's sort of baked in, just like plenty of features and functionality that sort of like metaflow enables. One very simple thing that you may want to do is if, let's say your workload is idempotent, then maybe just retry a bunch of times.

Savin Goyal [00:19:06]: And then another sort of interesting aspect that comes in is how do you monitor these workflows? Right now, depending on your use case, what you may want to monitor may actually vary quite significantly from project to project from your colleague as well, right? Like what your colleague cares about versus what you care about could be different. What you cared about in a prior project could be different than what you care about here. And what you can do within metaflow is you can very easily attach custom pythonic reports that can be very rich in terms of the visualizations that they offer, so that you can monitor the progress of your systems in real time and really sort of track the metrics that sort of concern. So that sort of in many ways gets you up and running with the data aspect of a rack system now you sort of have that running. And now sort of many times what we have seen people come across is that they want to then start going further, right? Like the next thing that they want to see is that would fine tuning a model sort of yield me better results, right. Many times they sort of make a leap further and they are trying to sort of figure out that if I have the resources, then can just like pretraining, be sort of a lot more helpful for me. As well. So let's talk about sort of like how can fine tuning help here? And how would you sort of go about doing that? So let's say we start with a llama two model as before, and we are able to, let's say, load that up.

Savin Goyal [00:20:36]: Now, one sort of interesting case with fine tuning is that it can be very resource intensive. You may require a lot of GPU compute to be made available to you, and there are limits to the number of cards you can pack on a single instance. As of today, of course, that number is growing up, but many times you may just want to run some amount of compute on top of a gang cluster. So a gang cluster is basically nothing, but you bring up a bunch of machines all at the same time, and you're able to distribute that compute over those instances. And now creating these gang clusters and managing compute on top of that. It's a very involved topic, and many times it can be rather unapproachable to data scientists as well. And one pattern that we have seen sort of like happen quite frequently is that data scientists are happy to sort of prototype something in a resource starved environment. And then there's an expectation that there's a team of software engineers that can then sort of carry forward their work and make it a lot more scalable, run it at scale, maybe sort of rewrite that work so that it can run that training routine in a distributed setting.

Savin Goyal [00:21:53]: But in that sort of like multibody universe, the data scientist loses that ability to iterate, loses that control over the actual model that they want to actually get trained. And with metaflow, that becomes a lot more straightforward. So in this case, with num parallel, you are able to define your gang cluster size. And then with add resources, you're able to specify that, okay, each gang needs to have four GPU cards attached to it, and then Metaflow will, behind the scenes, create that gang cluster for you, and it will run this fine tuned workload on top of that gang so that you don't really have to worry about any of the nittygritties of distributed training at this point. Of course, one sort of big question that oftentimes comes up in this quickly moving world of ML is that every single day there is a new advancement that's coming in, like Pytorch is shipping a new update, or there's a brand new library that sort of comes in and you would want to experiment with that and in many ways figuring out how to install those packages and create, let's say, a docker image that can run on top of a GPU instance can be dark magic all in itself, right? Figuring out sort of like what are the right, like what are the packages that I need to install through Pip? What are the packages that I need to install through Aptget. All the dependencies that sort of cross between Python and sort of system packages can be rather complicated. And as you are iterating, as you are experimenting with different package versions, that's sort of know yet another layer of complexity that if I just want to now experiment with a newer version of Pytorch, do I really need to worry about all the transitive dependencies that Pytorch is bringing in? If I want to try executing PyTorch on top of, let's say a trainium instance that AWS offers, do I really need to sort of then figure out how best to sort of go ahead and create that Docker image all by myself? So we make that super easy and straightforward for folks. So they can sort of just specify what are the dependencies that they want and we'll sort of create a stable execution environment behind the scenes for them that's sort of like reproducible.

Savin Goyal [00:24:05]: And of course then at the end of it you would also want to deploy these models somewhere so that you can actually sort of start generating business value out of that. And then we sort of start getting into this world of Ci CD. How do you bless a model that, yes, this model is sort of like okay to be rolled out into production? How do you figure out that? How do you run, let's say a B tests between different variants of models so that you can collect some more real world performance data before actually figuring out that is the model sort of ready to be set as the default experience for all your customers. So sort of like a bunch of tooling that we sort of provide on that front as well. So in a nutshell, metaflow, which is sort of like our open source project, it sort of has a bunch of functionalities that are catered towards taking care of this thick fat stack of ML infrastructure. If you're curious to sort of know more, please just search for Metaflow on either Google perplexity or whatever is your favorite sort of search engine these days. Can't really assume that Google is ruling the roof still. And then a quick plug for outerbounds, which is the managed offering around metaflow.

Savin Goyal [00:25:22]: So that basically sort of takes care of all the management overhead. It runs in your cloud account, so all your data stays with you, you pay for compute costs that you incur directly to your favorite cloud provider. We can orchestrate compute across multiple clouds as well. It is a reality that even if, let's say, you might be on AWS today, you may not have any availability of GPUs from AWS if you do not have a special relationship with your AWS term. And if you're then exploring a different cloud just to get access to GPUs, then how do you basically connect your compute fabric altogether without exposing your data scientists and engineers to sort of like the messy realities of GPU availability today can be a big question, so we'll sort of take care of that behind the scenes and move around. Compute intelligently for you. That's sort of like what powder bounce does. Now, folks who might be curious about getting their hands at what I just spoke about, we have a slack community.

Savin Goyal [00:26:25]: So we have 3000 plus data scientists and ML engineers who are day in and day out discussing many of these topics. So if you are interested, please join our slack community. So the URL is there. I think if you just scan this QR code, that should sort of directly take you to slack. Our documentation is hosted at Docs metaflow.org, so that should sort of give you a quick walkthrough of many of the features that I skipped over in today's talk. Now, one interesting facet here is that of know all of these features are useful if you're able to sort of try these out in real life. But these features, they also rely on other pieces of infrastructure that might be rather nontrivial for folks to deploy if you are just evaluating whether metaflow is something that could be useful for you. So if you go to outerbounds.com, we offer free sandboxes that have basically all the infrastructure deployed for you so you can try out metaflow.

Savin Goyal [00:27:24]: There are a few lessons as well that sort of COVID a few different use cases, including sort of like fine tuning use cases, rag use cases as well. So that should sort of get you started as well as answer this question quite immediately whether Metaflow would be useful for what you're doing on day to day. So yeah, plenty of tutorials [email protected]. And we have the outer bounds platform. If any of you are interested in getting started with that, please do reach out. If you go on our website, there's a link to sign up that will get you started. And last but not the least, this talk was supposed to be a very high level overview of what Metaflow is. Of course, as I mentioned, Metaflow originated at Netflix, and in another three weeks we are organizing a meetup with Netflix at Netflix HQ, where we'll go a lot more deeper into how Netflix uses Metaflow.

Savin Goyal [00:28:26]: How many other organizations have been using Metaflow, and how are we thinking about sort of advancing the state of the art around gen AI tooling? If you're interested in getting an invite, please join our slack community. I was hoping that we would have an invite link up and running by now. Unfortunately it isn't. But if you follow our LinkedIn page, or if you're in our slack community, then you'll definitely come across the invite page as and when it's ready. That's all I had today. Yes, please. So I was wondering, what's the relationship.

Savin Goyal [00:28:58]: Between Algebra and Netflix?

Savin Goyal [00:29:01]: It seems like this is originally internal two of Netflix. Are you guys now independent of Netflix? Yes. So Metaflow started at Netflix. So we started working on Metaflow in early 2017, and then in 2019 we open sourced Metaflow and we started out of bounds in 2021. So out of bounds and Netflix are two different organizations, but out of bounds focuses on furthering the development of Metaflow as well as building out the managed service around it.

Savin Goyal [00:29:35]: Yes, I've used obviously Metaflow as you know, while back, and seems like there's some cool new updates on running multiple gang jobs on Kubernetes. You mentioned that it does manage the infrastructure. So curious if you are taking care of provisioning the GPUs with throttle scaler or something like that? Or is this like assuming that I already have purchased GPUs, can I run?

Savin Goyal [00:30:01]: So what we assume right now is that you have access to GPUs through a cloud provider. It could be any of the popular ones, or let's say new age GPU cloud provider. And then as long as we have access to that cloud account, then we are able to orchestrate that on your behalf. So you don't have to worry about setting up kubernetes clusters or sort of like any of that management anymore. So we get limited access to your cloud account so that we can, let's say, spin up a Kubernetes cluster, spin up some data storage location, and then we'll do all of that choreography behind the scenes. Yes. So the question is, can you operate this on Prem? Yes, indeed you can. Yes.

Savin Goyal [00:30:45]: So you can basically mix and match on prem and cloud. I mean, it depends on sort of like what kind of functionality would you want to use? So we have sort of like a few other things as well that we did not discuss here. But if you are running, let's say, workloads, then we can definitely sort of bring your on Prem fleet together with your cloud fleet. Yeah, as I mentioned, deploying models has many different connotations. So what we handle today is if, let's say you have any batch inferencing needs, then you can definitely sort of orchestrate that through our platform. But if, let's say you have any real time inferencing needs, then we can do a very easy handover to any existing solution you may already sort of have in your organization.

Savin Goyal [00:31:32]: There's a lot of new end inferencing platforms like together AI and fireworks AI that are emerging, but it seems like they're more focused on, they have like a UI for the compute layer, the model training and then model serving. But would you say the main differentiation is metaflow being this abstraction layer, infrastructure as code in the Python itself, and that's sort of your differentiation. Or do you see those companies?

Savin Goyal [00:31:59]: I think what those companies sort of like are currently focused on, I don't know, their future roadmap is around hosting LLM models and that by itself is sort of like a very deep engineering topic. Right. But how do you even come up with those models in the first place, or any kind of customization that you need to do? Or you can imagine in any organization you're running a lot of data intensive workloads as well. So how do you basically orchestrate that? That's basically what we are trying to solve as of today.

Savin Goyal [00:32:26]: And are you working with companies like Lambda Labs or Runai to integrate with those sort of abstraction layers for GPU?

Savin Goyal [00:32:33]: So for example, we integrate with GPU providers like Corviv. So if you have access to GPU capacity from, let's say, your normal cloud vendors or any of these other GPU clouds, then we can definitely run your workloads on top of.

Savin Goyal [00:32:49]: I had a very similar question I was going to ask what's the main differentiator between Metaflow as an ML platform versus others? But you kind of touched on that. But I would like to know some of the engineering or infrastructure challenges in just developing an ML platform, like the biggest ones kind of.

Savin Goyal [00:33:10]: In your opinion? Yeah, I mean, at the end of the day, what we want to basically solve for is providing a very simple and straightforward experience to a data scientist so that they can focus on the complexities of data science and not have to worry about the nittygritties of infrastructure. So that's basically what we are focused on. And you can imagine then if you look at, let's say, this layer cake diagram, there are interesting challenges all across. So a very simple example here would be that GPUs are expensive, but you also would want to make sure that your GPUs are not idling. So if you have to, let's say, load a whole bunch of data from your cloud storage to your EC, two instances, then how do you optimize for throughput? Right? And it isn't the case that if you're using any of the popular frameworks today, that that would happen by default. And that can have a significant impact on how quickly you can experiment, right? Because at the end of the day, you also want to be in a flow state where if you are making certain changes to the code, and the code is also very data intensive and it needs to load a whole bunch of data, then you wouldn't want to wait many minutes or hours for just the data to be loaded for it to then getting processed. So that's one kind of problem. Another one would be how do you run, let's say, multitenant workloads on top of your compute layer and still guarantee fairness? So you sort of start getting into the nittygritties of workflow orchestration in many ways.

Savin Goyal [00:34:47]: And I wouldn't say that it's quite a solved problem depending on sort of like what you are trying to optimize this, and sort of plenty of headroom that people are trying to make. And there are also this organizational problem as well that can be quite important at the end of the day, which is around reproducibility. And when I say reproducibility, of course, there's sort of like the scientific concept of reproducibility, that for you to have trust in your output, you need to have reproducibility so that you can demonstrate that it wasn't sort of like fluke. And things can be sort of actually regenerated and recreated from scratch as is. But even from an engineering context, these systems, they have myriad of dependencies. They are stochastic in nature, and they can have very interesting failure patterns. And in an organization, you can also have these cascading dependencies, right? You can have one team that is, let's say, responsible for creating embeddings that some other team relies on and so on and so forth, right? And if, let's say, any one of these workflows fails for whatever reason, and you do not have reproducibility baked in, then you would have a really hard time even replicating that failure scenario. And then you would end up sort of like violating all sorts of SLAs in terms of how quickly can you actually get that workflow up and running again so that the teams that are dependent on you, they don't sort of run into any other further delays? And that can be a rather difficult problem to solve for because then you are basically working at multiple layers from a reproducibility standpoint.

Savin Goyal [00:36:27]: Of course there's code, there's data, there's your compute infrastructure. There are like all sorts of third party packages that you are reliant on, so you have to catalog those as well as make them available in a rerunnable manner. It's not enough you knew what sort of like were the constituents of any model that was generated. But you also need to be able to get to a spot very quickly where the exact same ingredients can be provided to a data scientist so that they can sort of actually replicate that, the exact same issue again. So that's another problem I would say that's quite interesting.

Savin Goyal [00:37:05]: So there is the orchestration and compute. By any chance at metaflow level or out of bound level? Do you override, say auto scaling behavior, scheduling placement?

Savin Goyal [00:37:19]: Yes. Now within Metaflow, of course we can't be super open needed around how you are structuring your infrastructure. So metaflow is relatively unopened around if, let's say you're using Kubernetes for your compute tier, and we provide a few other options as well. But when it comes to Kubernetes, then you are completely under control in terms of what kind of auto scaling behavior you need, but within outer bounce, of course, then we bring in our own expertise around what is the best auto scaling behavior. And also what is the best auto scaling behavior is oftentimes a function of what you are really trying to optimize for. If you're trying to optimize for costs, that looks like a very different optimization function versus if you are optimizing for machines always being available so that you don't have to sit around waiting for a GPU instance to be sort of like spun up and waste precious human cycles, then that would be a different objective functional kit.

Savin Goyal [00:38:21]: So would that be using more software metrics?

Savin Goyal [00:38:25]: So what's the distinction between software metrics?

Savin Goyal [00:38:29]: And I think you can optimize for hardware efficiency as well, and then you can also optimize for more software metrics or.

Savin Goyal [00:38:38]: Yeah, for, yeah, I mean token, but at the end of the day it's a mic, you.