Evaluating and Integrating ML Models

Speakers

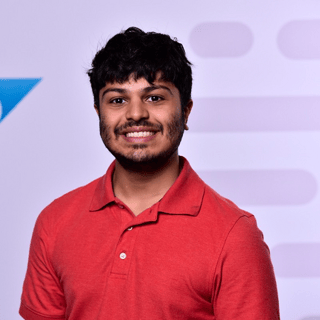

Anish loves turning ML ideas into ML products. He started his career working with multiple Data Science teams within SAP, working with traditional ML, deep learning, and recommendation systems before landing at Weights & Biases. With the art of programming and a little bit of magic, Anish crafts ML projects to help better serve our customers, turning “oh nos” to “a-ha”s!

Morgan is a Growth Director and an ML Engineer at Weights & Biases. He has a background in NLP and previously worked at Facebook on the Safety team where he helped classify and flag potentially high-severity content for removal.

At the moment Demetrios is immersing himself in Machine Learning by interviewing experts from around the world in the weekly MLOps.community meetups. Demetrios is constantly learning and engaging in new activities to get uncomfortable and learn from his mistakes. He tries to bring creativity into every aspect of his life, whether that be analyzing the best paths forward, overcoming obstacles, or building lego houses with his daughter.

SUMMARY

Anish Shah and Morgan McGuire share insights on their journey into ML, the exciting work they're doing at Weights and Biases, and their thoughts on MLOps. They discuss using large language models (LLMs) for translation, pre-written code, and internal support. They discuss the challenges of integrating LLMs into products, the need for real use cases, and maintaining credibility.

They also touch on evaluating ML models collaboratively and the importance of continual improvement. They emphasize understanding retrieval and balancing novelty with precision. This episode provides a deep dive into Weights and Biases' work with LLMs and the future of ML evaluation in MLOps. It's a must-listen for anyone interested in LLMs and ML evaluation.

TRANSCRIPT

Demetrios [00:00:00]: Hold up. Before we get into this next episode, I want to tell you about our virtual conference that's coming up on February 15 and February 22. We did it two Thursdays in a row this year because we wanted to make sure that the maximum amount of people could come for each day since the lineup is just looking absolutely in. Incredible. As you know, we do. Let me name a few of the guests that we've got coming because it is worth talking about. We've got Jason Liu. We've got Shreya Shankar.

Demetrios [00:00:36]: We've got Dhruv, who is product applied AI at Uber. We've got Cameron Wolfe, who's got an incredible podcast, and he's director of AI at RebuyEngine. We've got Lauren Lochridge, who is working at Google, also doing some product stuff. Oh, why is there so many product people here? Funny you should ask that because we've got a whole AI product owners track along with an engineering track. And then as we like to, we've got some hands on workshops too. Let me just tell you some of these other names, just for a know, because we've got them coming and it is really cool. I haven't named any of the keynotes yet either, by the way. Go and check them out on your own if you want.

Demetrios [00:01:20]: Just go to home, mlops community and you'll see. But we've got Tunji, who's the lead researcher on the Deepspeed project at Microsoft. We've got Holden, who is the open-source engineer at Netflix. We've got Kai, who's leading the AI platform at Uber. You may have heard of it. It's called Michelangelo. Oh my gosh. We've got Fazan, who's product manager at LinkedIn.

Demetrios [00:01:46]: Jerry Liu, who created good old llama index. He's coming. We've got Matt Sharp, friend of the pod, Shreya Rajpal, the creator and CEO of guardrails. Oh my gosh, the list goes on. There's 70 plus people that will be with us at this conference. So I hope to see you there. And though, let's get into this podcast.

Morgan McGuire [00:02:12]: I'm Morgan. I lead the growth ML team at Weights and Biases, and I drink my coffee as an Americana with a little bit of cold milk.

Anish Shah [00:02:22]: Hi, I'm Anish. I work as a growth machine learning engineer at weights and biases with Morgan. And I prefer tea. And I like to drink tea, such as oolong or chamomile.

Demetrios [00:02:34]: Welcome back to the Mlobs community podcast. I am your host, Demetri -Os and you are in for a treat.

Morgan McGuire [00:02:42]: Today.

Demetrios [00:02:43]: We've got Morgan and Anish from Weights and Biases joining us to talk all about what they've been up to, how they're doing. Know their exact title is around growth, but I think they're more like debril. We talked about so many different pieces going from all the cool products that they've been seeing being put out, all the different projects that people who are using weights and biases are putting out. And as you will hear in this conversation, make sure if you're using weights and biases to give them due credit, it's on their okrs. So let's not let these folks down. Come on now, cite them in your papers. And at the end of the conversation, we got into such a cool musing around evaluation as a service, around hallucination, mitigation as a service. And hopefully you've heard by now I've got a little bit of a song that we dropped for the last AI in production conference.

Demetrios [00:04:01]: This song is all about them. Prompt templates and some of the lyrics are evaluate me, make the AI, make sure the AI it is. No. What did I say? I don't even know anymore. But we dropped a song. I've been threatening for ages to actually create something and put it on Spotify. I think it's been a good three years now. Back in the day, I wanted to create a song all about going real time.

Demetrios [00:04:30]: We're going to real time, but never got around to it. Today I happily can say that my time is best spent creating a funny song for you all. And I hope it's one of those songs that's a banger and you'll listen to it whether or not you're into AI. But wow, if you are a nerd like myself, and you can chuckle at the lyrics being my prompts are my special sauce, then this song is for you. We'll have a little play right now. I'll play it for you so that you can hear it. You can give me your feedback. And let's get into the conversation with Anish and Morgan.

Demetrios [00:05:16]: Huge shout out to weights and biases for continuing to sponsor the ML Ops community. It is a blast having the knowledge that they bring to this community.

Anish Shah [00:05:49]: Hate, you know, all this loss. GPU got too many.

Demetrios [00:06:03]: All right, let's listen to this song. And of course, if you like it, you know what to do. Just stream it on Spotify. On repeat, I get zero $0.02 for every stream. So I'm trying to fund my next startup with these. Let's go. Dude, I gotta start with this. Morgan, what's this breakfast radio show that you hosted in college?

Morgan McGuire [00:06:37]: Yeah, good question. So in third year university, I don't know, myself and a friend, we saw the radio, college radio had this opening for a breakfast radio show once a week and we're like, we could do that. Let's try that. We just signed up and so there was like three of us. That hard? Yeah, it can't be that hard. He was the talker. And then we pulled in a third friend. I'm definitely quieter, so I was kind of more on the soundboard and hitting the play button, but yeah, it was a lot of fun.

Morgan McGuire [00:07:13]: Did it for about six weeks in my university does this charity fundraising week where the different societies do different events and it's a lot of fun. There's a fair bit of drinking involved. So we had as a. Coming into a breakfast show at like half six in the morning after a night before, we had pretty loose show. I would say, like nothing bad, but I think the editor at that point was kind of sick of us. So that was the end of our radio career. But I still have the recordings. I'm afraid to listen to them, but I might listen to them someday.

Demetrios [00:07:49]: And were you playing music or it was all talk show?

Morgan McGuire [00:07:53]: Yeah, like music, yeah. And as cheesy as we could make it, like all the 90s, early 2000s cheesy hits.

Demetrios [00:08:03]: What were some of the bangers you were playing?

Morgan McGuire [00:08:06]: There's definitely a lot of spice Girls, boys own Britney, all the girl brands, all the boy bands, gets you going in the morning.

Demetrios [00:08:14]: And this was not in the early 2000s, not when they were cool.

Morgan McGuire [00:08:20]: Yeah, 2007, I'd say. Yeah.

Demetrios [00:08:23]: All right. After their prime.

Morgan McGuire [00:08:26]: Yes, definitely.

Demetrios [00:08:28]: And Anish, man, tell me a little bit about chemistry and what happened. You were studying that and then you got sucked into the world of machine learning.

Anish Shah [00:08:38]: Yeah. So initially when I started doing chemistry stuff, I love the topics and I loved PChem as a concept too, so I joined a couple lab or two and then I realized that the actual physical process of doing the science wasn't actually that fun, like pipetting things or going in and putting in lasers or physical hardware kind of stuff. Wasn't really my type of science, I realize, but we use this really awful graphical programming language, I forget the name of it at this point, to go and analyze all these different experiments that we were doing. And I kind of really fell in love with that side of the world of connecting nodes that would then give you insight about, oh, from these three different lines of sensors, for instance, it goes into this specific database and then you can graphically apply it to some pre made algorithm that they had like a symbol for. And so I was like, oh, this is cool. I can actually understand what's going on. And I realized this is the side of the world I like a lot more. And so I got sucked into a whole bunch of data stuff and programming around that, and then naturally just was like, okay, time to give up chemistry.

Anish Shah [00:09:54]: It's here to do data programming based things. And luckily, it was at the same time that my university started to really dive into more data intensive classes. So, like data engineering stuff and machine learning stuff. So it was like all the stars were lining up and I was like, if I don't do this now, I'm going to miss an opportunity.

Demetrios [00:10:13]: I felt like it was meant to be. And now you both, for context, are working at weights and biases as machine learning engineers and you're working with different customers that weights and biases has. Can you break down exactly what is the job because you're machine learning engineers, but it's a little bit of internal and external facing, right?

Morgan McGuire [00:10:37]: Yeah, that's a good way to put it. So the roots of the team, our growth team started with doing events locally in SF. At the time when it was a one person team, our leader, Lavania was running those, but also writing content for the blog and building integrations examples. And that's really been kind of like we've still kind of stuck to those core pillars to drive people into weights and biases. More recently, I think, yeah, over a year and a half ago now, we started a courses program which has really taken off and I would say that's our fourth one now. And that has been super fun. The primary goal is to just deliver high quality ML education. If it showcases weights and biases in the process, like all well and good, but definitely trying to deliver the education and the value to the folks first, but yeah, then also internally helping out across the sales side of the business.

Morgan McGuire [00:11:47]: If you want to jump on any user calls, if there's any debugging that our support team can't do, although I would say less of it now, our support team are legit and have grown a lot in the last year or two.

Anish Shah [00:12:02]: Yeah.

Morgan McGuire [00:12:03]: And then just kind of like, I think because we're constantly focused on user growth and understanding where the field is going, we're quite active and aware of what's going on on Twitter and keeping on top of new models, new architectures, so bringing that knowledge internally is also important as well to let the product team know that, yeah, hey, large multimodal models are coming, and we need something for text to image or text to video. And not just like llms this year. Those kind of insights, I think I see our team doing well. Anish, did I miss anything?

Anish Shah [00:12:40]: Yeah, I think you hit the nail on the head. I think a big part of what I see myself doing is interacting with the community a lot. So, like Morgan mentioned is the community builds really cool stuff with or without weights and biases. And we, as an ML Ops platform, really have to make sure that we're on top of our game to understand, oh, these are actual projects people are building and using in the real world. So let's make sure that we share that knowledge with the world in really useful ways.

Demetrios [00:13:07]: Yeah, I feel like in a different company you would be called Devrel, but at this one you're kind of called something else, even though you're doing kind of the same thing. And so it's cool to see that you're getting out there and getting that experience with what people are building in the community. Can you name off some of the stuff that you've been seeing that you're getting excited about?

Anish Shah [00:13:30]: I would say the most recent thing, I think that's really interesting is the Allen Institute folks. I believe they released Olmo. And I think I like that project quite a lot because I think that one thing that at least weights and biases does well is this whole reproducibility piece. And I think that the core tenets of this specific framework is to be low carbon emissions, really reproducible, extremely shareable. And they also use weights and biases to kind of share all that out to the world, too. So it's kind of nice to see that frameworks exist that will actually be moving forward. The science of a lot of this large language model stuff, which I think the open source models are doing a great job of, and making sure that people aren't repeating work or repeating frameworks such that they all diverge and there's no standardization around it. So I think Olmo is definitely something.

Morgan McGuire [00:14:22]: I think is really cool. Yeah, I'd add it just came out, I think, yesterday on archive, the clean RL benchmark by Kosta Huang, who's in hugging face now, but it was actually interned in weights and biases over a year ago. That's a really nice project, I think, because we see a huge amount of researchers use weights and biases. Sometimes they cite us, sometimes they don't we'd love if more people have cited us. We can send you the citation if you're curious. But what was really interesting with.

Demetrios [00:14:59]: Is that an OKR?

Morgan McGuire [00:15:00]: It might be this year.

Demetrios [00:15:03]: That's why. Yes. Everyone out there, cite these folks. Make sure that you do that so that Morgan and Anish can crush their okrs.

Morgan McGuire [00:15:11]: Exactly. Yeah.

Demetrios [00:15:13]: Before we get into this, what is cleanrl? I didn't see that one.

Anish Shah [00:15:16]: Yeah.

Morgan McGuire [00:15:17]: So it's been a project Costa has been running for a while. The goal is to have really clean implementations and evaluations of standard or models and benchmarks. So the nice thing with the library is similar to the Alan AI, where they're going for extreme kind of transparency, like reproducibility. They've also released everything. They're less focused on releasing model weights or. It's not the aim of the project, it's more focused on evaluation. But they have it all completely instrumented in weights and biases. Their weights and biases project is completely open, so you can go and poke around and see how different evaluation is doing.

Morgan McGuire [00:16:10]: And I was just skimming the paper yesterday, and one of the things that emphasizes we're also making available the actual loss curves and things like that. So not just the final metric, but how the model actually got there. So that was like a pretty cool project to see and like a little bit, I think. Yeah, given the resurgence of interest in ORL with all the PPO and DPO going on with the llMs. Yeah, it was a nice project to finally come out. It was a big collaboration as well. Like, if you look at the paper, there's maybe like, I think 30 or 40 authors there. So it felt like a really nice community effort from the URL community as well.

Demetrios [00:16:56]: Yeah, you know, that's a big one. When the authors takes up a full page itself, then you're in for a treat.

Morgan McGuire [00:17:05]: Yeah, exactly.

Demetrios [00:17:08]: So talk to me a little bit about how you all are thinking about llms internally at weights and biases. Because I know that before we hit record, there was some chatter that we had around. You are seeing what's out there. You're getting to experience the cutting edge and cool things that are happening, but you're also getting to potentially affect product when it comes to weights and biases. Or just make sure that weights and biases itself is ahead of the curve when the new trends are coming out. So how do you think about currently just llms internally?

Anish Shah [00:17:49]: Yeah, so I think that there's a variety of places where I think llms work really well. I think, for instance, Morgan worked on a quick win using LLM to help clean up some dirty data. So I think that it's like understanding where lms can actually be effective. At the very least, create a nice launching point for people to go ahead and access knowledge or start work in a way that just makes their life easier. So from our side, things such as creating better translations for certain documentation, or creating early prs with some pre written code in the right locations are like places where I think lms definitely help out quite a lot. And I think the one project that we love to talk about a lot also is a wanbot, which is our internal support bot in a way that goes ahead and interacts with a lot of different data sources that are public facing weights and biases, style documentation such that when anyone comes in and asks really proprietary knowledge about weights and biases that unfortunately GPT is not trained on yet, it can go ahead and do that, being really complicated rag pipelines.

Demetrios [00:19:04]: And so if I'm understanding this correctly, it's more about how can we help plug in llms or let the user sync their LLM usage to what we are creating, as opposed to let's put an LLM under the hood, and people now have text to SQL or something that maybe they don't really need, or they're not going to be using as much unless it's what you're talking about with the wandbot where, yeah, this is a perfect use case for using an LLM. Is that a correct assumption, or are you also thinking about it as like, let's plug in an LLM to the product and see how we can do that?

Morgan McGuire [00:19:46]: Yeah.

Anish Shah [00:19:46]: So I think that it's kind of a mixture of both those. I think that one feels more experimental than the other. So from our perspective, I think we want to make sure that the large language model usage that we do still ensures that it's effective, I guess is like the biggest thing. And so that incorporates a lot of different kind of like, I guess we want to make sure that there's multiple pieces to make effective large language model based applications. I think part of it is ensuring that the people who are using it get to interact with it in the way that makes the most sense for them. And so sometimes that may be as simple as having LM be the driving layer at the very bottom that does a majority of the work. And other times it's as simple as letting the LM just be a single piece in the workflow that the user can interact with and turn offer on, depending on how that specific workflow is being used for whatever they're trying to do. So be it like the docs translation or imputing or cleaning dirty data, I.

Morgan McGuire [00:20:52]: Think a lot of people are asking themselves, and I definitely am, how many killer product use cases there are for llms. There's some, obviously with incredible product market fit, like copilot and cursor and alienish. And a few companies, I think even up in the legal space are doing some amazing work. But it kind of feels like a lot of the value of llms is, at least when I look at our product and the way somebody's business is more in back office operations, just making things a little bit more efficient, a little bit more productive, allowing people to scale more, at least that's like, in the shorter term, that's like where all the quicker wins are. We have bounced ideas about where it would be fun and interesting to add it to the product. And there's some interesting use cases there, but I don't think any of them are revolutionary. I guess I shouldn't speak on behalf of our product team. Maybe they're cooking up something wild.

Morgan McGuire [00:21:59]: But I think in general you see it in a lot of products that try and shoehorn an LLM in just because they're trying to ride the hype. It doesn't work in a lot of cases, people haven't found the right way to really nail it.

Demetrios [00:22:13]: I find, yeah, and I feel like it's less of that now because people have had experiences where they're like, oh, we're just going to plug AI into our product and now it's revolutionary. And then when you actually use it, you realize that it's still a chat bot and it still doesn't understand me half the time or it hallucinates half of the time, and it's actually not the best experience. So I would rather have a different user experience. And I like that you all are taking a bit more of an approach of like, well, we have all these hammers, do we need to go around looking for nails? Maybe not.

Morgan McGuire [00:22:55]: Yeah, exactly. The other, I think impetus in our team to help build out these tools is just to build a muscle. We have a great machine learning team. Everyone has backgrounds in ML from academia and industry. Been doing it for years. But this is new, right? And so I wanted to make sure that the team are, yeah, that way we build that muscle through building real use cases and not just toy examples on the latest open source model. But ultimately we care about solving a task, be it like translating the docs or cleaning this little bit of data or whatever. So you want to use the best models and the best techniques for that as opposed to doing a fun fine tune of a llama two model that, you know, is kind of garbage compared to like GPT four at the end of the day.

Demetrios [00:23:51]: Yeah, it's not actually that.

Morgan McGuire [00:23:53]: Looking forward to lambert three for sure.

Demetrios [00:23:57]: Yeah, let's see what happens in a few months or year. But I do like that where it's like, hey, let's look at the value that we can bring. Not just like, is this going to get a bit of attention on Twitter for a.

Morgan McGuire [00:24:13]: You in terms of our work, then on the growth side of things, it builds credibility in the long run to be able to point to actual things you've put into production. So, yeah, it kind of works for multiple reasons for us, I think.

Demetrios [00:24:33]: And what about this data cleanup that you're using, that use case? Can you give me more details on what that looks like?

Morgan McGuire [00:24:41]: Yeah, that's just a tiny one. So I shipped a small tool and then I know someone in data team have also shipped something similar. Super straightforward, but we have some user facing forms where the user can put in their country they're in. In the back end, our CRM has a standardized list of countries and so we just need to translate USA to United States of America or Antarctica to unknown because not everyone, they put in lots of different stuff in there. Yeah, so it's like complete overkill for GBD four, but we do use it anyways just because the volume of requests is pretty manageable. It's still going to be pretty cheap. And again, just going back to reliability and performance, I joke we were promised AGI and we got like country standardization is like what I joke about, but it works. It works much better than a big complicated reg X or a person having to manually fix them.

Morgan McGuire [00:25:45]: So we ship it, we do it, we move on.

Demetrios [00:25:47]: No, there is a topic that I think is top of mind for so many people, and it came up recently when I was talking to your coworker, Alex. It also has been on my mind for months because we did an evaluation survey in the Mlops community and we got a ton of responses. We also are doing another one right now and getting all kinds of great responses. I wrote a blog post on the first one. All of that data is open source, so if anybody wants to see it, they can. All of the new responses, we're going to be getting an incredible report made for it. I want to know how you all being machine learning engineers out there in the trenches are thinking about evaluation because it feels to me like it is one of those, like, it's like the dark arts of evaluation still, and it depends on what your use case is. It also, a lot of times is really not clear and you still can't trust an LLM.

Demetrios [00:26:56]: But how are you all thinking about it?

Anish Shah [00:26:59]: Yeah, so I think the hard part is that, as we've all probably heard of earlier, is a lot of the evaluation data that were used for standard benchmarks have been leaked in the training data itself. So one thing that I always think when I go into evaluation is how much do I actually trust the novelty of the underlying data that this is being used for, for the evaluation. And then for me, I also think that it's about making sure you hit the breadth of different topics that you want to evaluate over. So one project I think is great is like the LM evaluation harness, which allows you to test amongst a bunch of different data sets. And so in a lot of different projects and models I've seen trained or fine tuned, I think that the one graphic or one visualization that I think is pretty effective is almost looking at these radar charts. So looking consistently at a bunch of these different data sets that you consistently look over as you compare different models that you're training or seeing the efficacy of fine tuning against, maybe like GPT, I think that it's important to grab data sets that are proper, I guess, for the different tasks that you're solving for, and then seeing how those compare to the other models that you want to be better than, for instance. So it's very easy for you to go to OpenLM leaderboard, find the one data set that this specific model is not good at, and fine tune towards it to make it slightly better for it. But that doesn't automatically mean that that is a better model in general.

Anish Shah [00:28:39]: And so that's why I think for me, the whole radar concept of having these different topics, data sets and tasks, are important for evaluation and then ensuring that you maintain that across all your different experiments and ask yourself, is this data set still valid after all these different iterations of models exist? And the training data and the fine tuning data underlying it is now, in a way slightly being falsified, in a way not to be too negative about it.

Morgan McGuire [00:29:11]: Yeah, I think another thing that maybe a lot of folks looking to use llms, like open source llms, especially in practice, is I'm not sure how closely they actually look or understand the different benchmarks out there. Do they even know what the MMLU task is or set of tasks is. Does it matter that their model can do multiple choice? Well, if it's like whatever text SQL model at the end of the day, I think, and it's hard, right? Especially if you're a software developer coming into the space, it's like super intimidating. You're not going to go to the hugging face leaderboard, look at the four or five metrics in there, go and read those papers, look at a bunch of the examples in the evals, and then pick your model based on there, right? You're probably just going to pick the model that's highest on the leaderboard given your capacity to serve it or like fine tune and serve it. And yeah, you could argue maybe rigorous teams should do all that work, but they're not ML engineers. At the end of the day, they're trying to just add another building block to their product or whatever. So that's super hard as well. And then the whole gaming of leaderboards and benchmarks on top of that, either intentionally or accidentally, which is definitely an issue.

Morgan McGuire [00:30:44]: But one thing we saw, I noticed that was quite interesting when we were evaluating our support bot and going back to the radar charts Anish mentioned was. So it's good practice to evaluate your rag system for a number of different aspects, not only answer correctness or accuracy, but also how well the retriever is doing. But it was interesting that we noticed with when we moved from, I think, GPT Turbo, can we get this right? Yeah, GPT Turbo, the November preview to the January preview model, the context precision of the rag system actually went down. And so that's just a measure of how well did the system do in paying attention to the context it was given when it gave output the final answer. And that metric actually went down, even though our overall other metrics, like the answer correctness and the ultimate results we kind of care about went up. And it was kind of interesting because there's so much code and examples and knowledge about weights and biases online that that's all been scooped up. And you ask chat GPTD about weights and biases and it'll give you a pretty good answer for a lot of questions without plugging into our rag system. But it was interesting to see that the January model knew when to ignore the context.

Morgan McGuire [00:32:29]: We just didn't have enough relevant context. For example, in our docs, maybe we have a gap in our docs, but we still retrieved a few samples even though they were maybe like low quality. And so January preview knew to ignore those samples, which hurt it on the context precision, but ultimately led to a final answer because it was like, nah, I know better than this, I'm just going to answer from my internal knowledge instead. So that was kind of a fun little insight we got from doing some of our evaluations.

Demetrios [00:33:00]: So that brings up a fascinating point that I've been thinking about a lot lately, especially when it comes to rags is how you evaluate the retrieval side of the rag. And it feels like there was somehow baked into the update in January. I know better than you, so I'm not even going to retrieve. How can you even evaluate that part? Because you need to be able to evaluate the retrieval side so that you can get better answers at the end of this whole rag, like after the generation. Right? And I feel like that's one of the foundational pieces, because if it doesn't retrieve the right thing, then you're looking at something that has more probability of being wrong at the end of the output.

Morgan McGuire [00:33:58]: Yeah, I think we're in a lucky position. Like I said, there's so much good with the mouse's content online that's been baked into these models, it's almost like a bonus because it seems to pay attention when the context is decent and ignore it when it's bad. That's kind of anecdotal. We haven't studied that properly. But yeah, I agreed. If this was like a system, this.

Demetrios [00:34:21]: Is just looking at a few metrics, right? This is you looking at a few metrics on the final output saying, well, it didn't actually retrieve, but the output still was quality. So it doesn't matter if it retrieved or if it didn't.

Morgan McGuire [00:34:37]: Yeah, exactly. And we do need to dive deeper into the individual samples where it did poorly on context precision and get a better feel of what's going on because it, short term it feels like a nice win, but yeah, this isn't a sustainable way to improve our system. We can't rely on GPD five scooping up all the good stuff next time.

Demetrios [00:35:00]: Around, or even if you're trying to help others that are in this situation that don't have a bunch of information on the Internet, it's like, oh yeah, well, we didn't have that problem because we've got all kinds of great material that GPT trained on. Right. So how do you lend a hand to those in need?

Morgan McGuire [00:35:20]: Exactly. So we do, yeah. Context precision. Context recall, answer or context. I think faithfulness and context anish, maybe you might don't know the other one. There's like faithfulness and relevancy I think is the other one. I think there's a lot of blog posts out there at this point, kind of running through them.

Anish Shah [00:35:47]: They gave it like a name, ragas or something. I've seen that phrasing come around. Yeah, I think that's the four things you mentioned. Are the four tenants of Ragus or whatever.

Morgan McGuire [00:35:57]: Yeah, we actually use ragas for some of those evals. Brad and my team kind of runs wombat. I know he's used it. That would be where I would start to improve the system. And then it's like anything in ML. At the end of the day, you just need to be looking at a lot of data, a lot of samples. One thing actually we did through Onebot's generation is that we're on our second evaluation set. The first one, we took a bunch of queries from the wild, and I clustered them.

Morgan McGuire [00:36:36]: We found kind of representative questions from each, and that gave us about 100 and 3140. And we did some evaluators on that. But we looked again at the evaluation set, like, a couple of months later, and kind of noticed some of the questions were maybe duplicated or less relevant. So we've actually quittled that down to, I think, 98 now. And it's probably like a higher quality eval. And we've moved on that eval from 50% to 80%, and we want to get it above 90 as we kind of scale it out to a broader audience. But I think once you get over to over 90, we want to go back and look at our valuation set and make it harder again, because we don't want to. I feel like 90, 95, or 99 is kind of, like, almost not irrelevant.

Morgan McGuire [00:37:25]: Right. But you want to make sure that you're not just hitting numbers that look pretty on a report, but actually nailing all those hard cases and making your valves challenging.

Anish Shah [00:37:41]: Yeah, I guess I do. One thing I do want to expand on is I did really like the approach that was done with wanbot, too, for the whole evaluation piece in that most people should be doing when they're building these applications, is that you don't necessarily know how your users may automatically interact with the lm based application you're using. So the most important thing is to make sure that you gather all this data from people actually using that application and then condensing it like standard user mining techniques. So from our end, we did that clustering tactics to figure out which topics, especially because of the support bot, which I think is a common use case, figure out what support topics are people asking about, choosing the right question that's representative for that specific topic and then building it into the evaluation framework itself. So I definitely think that every company using LM should do what they've been doing with a lot of their other data products, is that make sure that they're actually mining the user data for the application that's using LLM and incorporating that piece into their whole evaluation framework.

Demetrios [00:38:42]: And when you're mining that data, are you also making decisions on, oh, maybe we should update the docs so that people don't even have to ask this question? Or maybe we should change the product a little bit so that this is more intuitive.

Anish Shah [00:38:57]: Yeah, for sure. I think maybe not even an evaluation piece is not the only part where we see that because our doc spot is in our slack page. Sorry, our slack. Slack channel. It's very easy to see directly, like, hey, this answer is being returned is either old information that needs to be updated or it's not retrieving the right thing. And so from our end that the reason we cared about the retrieval is one, is it grabbing that right context? And if it's not grabbing that context, that means that it doesn't exist. So for us, it definitely does inform us for how we should be updating or thinking about a lot of our documentation, for sure.

Morgan McGuire [00:39:35]: Oh, that's cool.

Demetrios [00:39:36]: Yeah, that feels like a supercharger to the docs and the whole debrel efforts.

Morgan McGuire [00:39:44]: Yeah. Demetrius, I want to put you on the spot if you've there's a funny case I see and would like to pick your brain. On the evaluation side, I see one bot occasionally answer, and usually when an answer requires some code, and maybe that's not immediately obvious from the docs, it returns an answer which isn't wrong, but isn't the preferred way to do it. It basically finds workarounds of how to use the API to four or five lines of code instead of one method that it's missed for whatever reason. How would you classify that? Is that correct? Incorrect. Do we need an okay ish risk category?

Demetrios [00:40:30]: Yeah, I would say that's incorrect. Right. Because there's a better way to do it. So you're like teaching people how to be worse engineers in a way.

Morgan McGuire [00:40:39]: Even if it gets them to the results they're looking for?

Demetrios [00:40:42]: Yeah, I guess at the end of the day, it is true. It's like, what are you aiming for? Are you trying to make it? It's that constant trade off. This is a great question to think about, because it's the trade off when it comes to do we care about tech debt and we just want to move fast and whatever. We'll figure it out if we find product market fit in six months. Or do we want to build for scale and have everything bulletproof? What phase are you in? Because depending on what phase you're in, I think you'll take that answer in a different way.

Morgan McGuire [00:41:24]: Yeah, that's actually a good point, looking at it in our development cycle.

Demetrios [00:41:30]: Yeah. The other piece I was going to mention that you touched on, which I think is fascinating to zoom into, is that really diligent teams should be not only looking at what the best model is on the leaderboard, but also, what are these leaderboards? What do these data sets even mean? And then you go out and you create your own data set and you see how it matches up against that. And I do think one of the things that people aren't doing enough or I haven't seen enough of, is the sharing of data sets or, like, data sets as a service or evaluation data sets as a service type thing. Because it's like, oh, as soon as the data set is public, then you get that gaming the data set type game, the gaming the data set type actions happen. But if it's like, you are able to send model, fine tuned model to data sets or evaluation data sets as a service and see how they rack up against it, that could be interesting at the end of the day, because you're able to not really have to, or it's a third party service, and you don't have to worry that it's been gamed as much as if it was just with open data sets. Maybe you guys have seen something like that. I feel like I've seen one or two people release data sets that are specific verticals, and it's like new data sets for the financial data. And then their company is that like, oh, yeah, we are an evaluation company.

Demetrios [00:43:20]: And I've also heard a lot of debate around, is evaluation a full fledged product, or is it just a feature inside of a product? So these are all things that I often wonder about.

Anish Shah [00:43:37]: I think the skeptic of me is always a hard sell for me to almost go and use that service right off top of my head, only because we can't see the data. It's hard to understand the credibility of the underlying data source unless they give me some really deep understanding of what types of data live in that data set in which that sells the secret sauce. Then I think it's very hard to ask a lot of machine learning engineers or data or even people who are security folks in a way, making sure this lm is at a point where it's ready to be touched by customers to go ahead and say, oh, yeah, this third party service which won't tell us any information about their data other than whatever their marketing page says, yeah, I'm going to go trust its results. So I do think that for me, the whole evaluation as a service, while I think it's an interesting concept and I think we might even see them coming out here and there as data, is a valuable resource in itself. I just think it's going to be a hard sell for me at the very least.

Demetrios [00:44:44]: And what about if it is more of, okay, you submit your model and then we evaluate it together type thing. So it's not like you just throw the model over the fence and I give you back a score. It's like you throw your model to me, but then you've got this dashboard, I've evaluated it in every way. I also know that you're going to use it for this specific use case. I wouldn't recommend it for these parts. Kind of like red teaming in a way. Red teaming as a service. I think there are a few companies that do that.

Demetrios [00:45:18]: Off the top of my head, I think like surge AI does that and more specifically for these popular models that come out. But that's one thing that it feels like could be done again, going back to that person, that's just grabbing a model, that's a lot more work to say. All right, now I'm going to try and red team this model. I'm also going to figure out, I'm going to go read all these papers on what all these benchmarks mean and familiarize myself with that. You have to be very diligent. However, if that diligent keeps your model from saying some shit it shouldn't or doing something it shouldn't when it's customer facing, is it worth it? Again, it's a constant trade off, really is the TLDR. And looking at it like that.

Anish Shah [00:46:13]: I.

Morgan McGuire [00:46:13]: Think you could solve a lot of the objection. Just like it's a reputational or credibility thing. Like if Kaggle did this, if hugging face did this, I think people would have a bit more fate maybe in a black box, but agreed, ultimately, yeah, they're evaluating. Together's story is much stronger and I think pair that with a strong capability to generate synthetic data for that eval specific to the customer. Yeah, feels like pretty compelling because there's enough to be worrying about improving your LLM system. Never mind going off and learning then about how to generate good synthetic data to evaluate. Like, why not just go to an external service for an expert for that part, maybe.

Demetrios [00:47:02]: And the other piece on this, I was just reading a paper that it was like a TLDR of all the different ways to mitigate hallucinations that have come out so far. And it has a whole taxonomy on these hallucination mitigation techniques. And it feels like, wow, that is another thing that could be really cool, seeing how these different techniques work for your specific use case. And yeah, you can do it. You can go and spend the time, there's like 32 different techniques that they identify. So you could go and figure out, like, this one looks cool. I guess it's more like throwing a dart at the wall and saying, all right, I like this name. Let's try that technique and see if it mitigates the hallucinations a little bit more.

Demetrios [00:47:49]: And then who knows if it actually does. Maybe it does, maybe it doesn't, and you really got to put it into practice. So then you may find out that it doesn't and you're screwed. There are things like that where it could be cool to have someone else that does it for you. They give you a stamp of approval, and they also map it out for you. So it's not just like this black box that you were talking about, Anisha.

Anish Shah [00:48:18]: So I definitely think that that hallucination piece is definitely very compelling, for sure. I think that it's like, kind of like you're alluding to is like, there's a lot of techniques that will keep on arising that will actually build upon previous techniques or still stay consistent between models. And I think that a lot of the hallucination mitigation tactics will stand the test of time a little bit more. Like, as you create more models, these techniques will still be useful. So it's almost like, in a way, earlier I mentioned the LM evaluation harness. I can imagine it being like a hallucination mitigation harness in a way where you want to test all these techniques alongside either generated data, like evaluation data as a service, or some sort of synthetic data, and see how those line up. So I definitely think super compelling use case. If no one has built it yet, they should go ahead and start building that type of stuff.

Demetrios [00:49:11]: There we go. That might be the one that I just convinced myself to build, but it also feels like there are ways when it comes to weights and biases and what you all are doing on being able to plug things like that in there. Right. And say again, it's not really what you're trying to do, but you make it easy for customers to say, all right, cool. You want to try this hallucination mitigation technique. We've got you covered. It's not like in our product, but we make it easy for you.

Morgan McGuire [00:49:49]: Yeah, 100%. We are just going to ship. Maybe it'll be out by the time this gets published. An integration with Elutor AI's element Val harness, which will let you push all those evaluation metrics into weights and biases, but it'll also generate something like, not just into the workspace, but generate a weights and biases report specific to that model to just make it more presentable for that particular report. And then you'll be able to add more evaluations to that report if you want. And yeah, same for hallucinations or any other toxicity, PII detection. Any of these metrics you want to track, you can log those metrics into weights and biases. You can add a bunch of all your data, or like a subsample of your data to inspect it.

Morgan McGuire [00:50:45]: It could be like, the best examples. The worst examples. Yeah, it's really powerful to be able to just have a reliable performance kind of like system of record, to be able to dump whatever you want into it and visualize it nicely and also being able to share it. That's the other thing we see, especially in these cases. Especially, say, if you're a developer in a team and you're trying to convince your manager, like your CTO, that we should do something like fun with llms, you need to be able to convince them that it is performant and also safe. And so being able to have a place where you've stored all of these and not like random spreadsheets or like Jupyter notebooks that you're flicking through, but like, nice, polished reports in weights and vices, just kind of makes their life a little bit easier, too.

Demetrios [00:51:35]: 100%. That's so cool to see. Well, fellas, I've taken up a ton of your time. This is awesome. I love seeing what you all are doing at weights and biases. And thanks for coming on here.

Morgan McGuire [00:51:48]: Thanks, mitridish. Appreciate it.

Anish Shah [00:51:49]: Thanks for having us.