Streamlining Model Deployment // Daniel Lenton // AI in Production Talk

Speakers

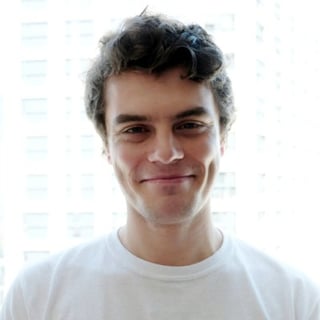

Daniel Lenton is the founder and CEO at Unify, on a mission to accelerate AI development and deployment by making AI deployment faster, cheaper and simpler. He is also a venture partner at Pioneer Fund, investing in top YC companies, with a focus on AI infra companies. Prior to founding Unify, Daniel was a PhD student in the Dyson Robotics Lab, where he conducted research at the intersection of Machine Learning, Computer Vision and Robotics. During this time, he also worked at Amazon on their drone delivery program. Prior to this, he completed his Masters in Mechanical Engineering at Imperial College, with Dean's list recognition.

At the moment Demetrios is immersing himself in Machine Learning by interviewing experts from around the world in the weekly MLOps.community meetups. Demetrios is constantly learning and engaging in new activities to get uncomfortable and learn from his mistakes. He tries to bring creativity into every aspect of his life, whether that be analyzing the best paths forward, overcoming obstacles, or building lego houses with his daughter.

SUMMARY

Ever since the release of ChatGPT, the AI landscape has changed drastically. In 2022, most “AI companies” were building general AI infrastructure. Now, most of these companies have pivoted and began offering AI-as-a-service (AIaaS) products, mostly through public endpoints. Many new companies have also come onto the AI-as-a-service scene, leading to an exponential growth in the market. With an overwhelming number of endpoints, optimisation tools and hardware, building an optimal deployment pipeline is getting increasingly difficult. Users now need to rely on unreliable and non-exhaustive benchmarks to gauge output quality, runtime, accuracy, and latency trade-offs to select the best option for any given application. In this talk, we will propose a way forward, through the use of open governance and open source runtime benchmarks, and we will outline the essential role of dynamic routing in this fast-moving landscape. We will conclude by discussing how Unify’s model hub combines both of these aspects to create a holistic user experience when deploying any AI models, making them faster, cheaper and more accurate.

TRANSCRIPT

Streamlining Model Deployment

AI in Production

Slides: https://docs.google.com/presentation/d/1rbQF4M1Z3uSTewyk_YgAxWGLaviHVE_C/edit?usp=drive_link&ouid=103073328804852071493&rtpof=true&sd=true

Demetrios [00:00:05]: And we're gonna keep rocking with Dan. Where you at, bro?

Daniel Lenton [00:00:09]: Hey, how's it going? I'm calling from. Calling from London a little bit.

Demetrios [00:00:16]: It's a little late. Then I'm gonna let you get moving real quick. I see you shared your screen. I will get rid of that and let you start rocking and rolling.

Daniel Lenton [00:00:26]: Good. Well, yeah, really awesome to be here. Thanks a lot for the very kind introduction. Yeah. So my name is Daniel Lenton. I'm the CEO and founder at Unify. We're kind of playing around with the slogans a bit, but for this talk going with all AI in one API, and I'll be talking about how we're trying to effectively unify the LLM endpoint landscape in a product we've released recently. So let me very quickly.

Daniel Lenton [00:00:55]: There we go. It says working. So the first thing to note is that basically what we've seen is that the number of endpoints has exploded a lot recently. So there was a lot of companies that were conventionally doing kind of pure infrastructure. There's obviously any scale and Ray and there is Octoml now Octoai and many, many companies together. AI do a lot of compute as well. What we've seen recently is that there's a lot of different AI as a service. Endpoints coming onto the market.

Daniel Lenton [00:01:22]: This can be existing models that are exposed as very efficient, very cost effective endpoints to build kind of verticalized LLM applications. It can obviously be text to image models like stable diffusion, et cetera. But there's more and more of these coming onto the scene, not only with their own interesting, unique cost performance profiles, but also with their own unique specialities. There was recently text to SQL models that are competitive with GPT four, of course, Google releasing Gemini and Gemma onto the scene as well. So there's a lot of interesting stuff coming out all the time that is both adding to the performance profiles and also the actual quality of these different endpoints. And basically, when you deploy these, there's such a maze. When you think about how you can deploy these, there's so many options, because with the whole gen AI boom, not only are there more endpoints, but there's lots more models. So there's mixed roll, there's mamba.

Daniel Lenton [00:02:19]: There's all these new things coming out, all these interesting technologies. There's a whole load of serverless GPU options and a whole load of kind of orchestration technology going on. Lots of really interesting stuff, removing cold start times and things like this. There's a load of compression techniques coming out all the time, a load of compilers, there's XLA, Tensorrt, open AI, Triton, mojo, kind of with its own Mir dialect and so on. And of course the hardware landscape as well. Nvidia remains in a very strong position, but there's great hardware from intel with the gaudy's, there's AMD entering the scene, there's sambanova systems, Cerebrus, et cetera, et. So, you know, all of these areas of the stack are seeing a lot of innovation, a lot of stuff going on, and the combinatorial search space across all of these is very vast. And that just means that this endpoint landscape is even more diverse because there's so many options to really squeeze the performance out of these models that are deployed.

Daniel Lenton [00:03:09]: And yeah, getting the best performance is very much a trade off. So one application, some applications you want to have low cost, others you want to have low latency or high accuracy or high throughput or low memory. And these things are all in direct competition. So you can get high throughput by just having a ton of idle devices, but that's then going to cost you a lot of money. You can have very low latency by doing heavy compression, but then you're going to sacrifice accuracy, et cetera. So these things are all indirect competition. And every application has its kind of optimal combination of these things, which is nontrivial to arrive at when you're looking at these solutions that are out there. And the other observation is that the current benchmarks, well, saying they're flawed is maybe slightly strong, but that there's certainly issues to be resolved.

Daniel Lenton [00:03:53]: So as many people will know, the actual quality benchmarks in llms are sensitive to small differences. There's some really interesting work showing if you kind of use capitalized ABC with the options, or non capitalized or one, two, three, it can change the rankings of these models. There's issues potentially with models being trained on the benchmark data, so there's contamination issues. A lot of the benchmarks are very narrowly scoped, which also means that there's the risk that models can somewhat overfit to these benchmarks, even if they've not actually been contaminated. So these are just some of the issues that exist with quality benchmarks. And the other issue with kind of runtime benchmarks is that these things are not static at all. So when you look at any of these endpoints, they are constantly changing through time, because of course, these are not static systems, they're dynamic. The performance you get out depends on the load balancing that's going on at that point in time, the amount of user traffic that's going on, of course, software and hardware updates behind the scenes of these black box endpoints, network speeds, geography and so on.

Daniel Lenton [00:04:58]: And these are incredibly dynamic systems, and I think we need to think about them as dynamic systems rather than kind of static leaderboards, particularly when it comes to runtime performance, which is not really an intrinsic property of the weight of the model, it is an intrinsic property of the dynamic system. So what I think is needed, and what we think is needed is that having basically open source benchmarks which are target agnostic, so aren't specialized to any particular LLM with an open governance approach where the data is kind of quite raw. So really focusing on the very bare bones metrics such as time to first token, intertoken, latency, et cetera, in a very user centric way. So what is it that the end user would actually observe when they go and query these endpoints at any point in time? So this is what we think would be quite useful addition to the runtime benchmarking landscape. So another thing that's needed to be considered is you need to have a uniform tokenizer, because the tokenizer changes how many tokens any given sentence or word goes to. So when you do kind of tokens per second or cost per token, we need to be talking the same language. So you need a standardized tokenizer when you do any of these comparisons. You need diverse inputs, because obviously things like speculative decoding, if you have very repetitive inputs and so on, then this can actually change performance.

Daniel Lenton [00:06:24]: You need varied regimes. So in terms of concurrency and in terms of geography and so on, because these things change with all of these things, and also these metrics that everybody knows about the time interface token, the edge token latency, et cetera. So what we've tried to contribute, and hopefully is useful to people in the field, we've created ongoing, basically dynamic runtime dashboards where you can go onto the hub and you can see all of these performance metrics as they evolve through time. And what this really shows is that actually these things are incredibly non static. If you take a look at this performance evolution across time, and this is going from kind of February 3 to February 17, so this is kind of a week or two, and you can see that sometimes one is the best, sometimes another one is the best. And these things are really very dynamic through time, basically is the main observation we have, which means that really a time series perspective is the correct one to have. When you think about the runtime performance of these benchmarks, basically, and what most people do, I guess you could think of it as a static router. The typical approach is to basically work with one provider, work with one endpoint, and then stick with that.

Daniel Lenton [00:07:40]: And then things like latency and throughput vary across time. But that's okay, you're just sticking with one provider and all of your requests go to that single provider. One arguable downside of this is that basically these things change a lot. So sometimes the performance is very high, sometimes the performance is not so high, and it's great when it's high, but when it's not so high you're left waiting a bit longer. So you have a suboptimal overall solution. So what we think would be useful in this space is actually even just thinking about something as simple as runtime performance without even getting into quality based routing or anything. Yet just dynamically routing to the highest performance endpoint at any point in time can give significant speed ups when you're working with these models. So the idea is if we can take the kind of top band and effectively do an Argmax over the performance, then you can actually with having this dynamic routing and single sign on, you can get performance which is better than any individual provider and actually is kind of the Argmax across all of the providers, which is very useful for end users that then just want to make sure at any point in time they're optimizing for their runtime performance, for example.

Daniel Lenton [00:08:48]: So this is the insight we've had. So just to put this in a concrete, in concrete format. So again, with two concrete examples here, each endpoint fluctuates on any given day and we've got llama 270 b chat here in mixed rule here as well. Again you see the performance varies quite a lot and what effectively we're doing visually is taking this and just taking the Aug max and that actually improves the performance quite significantly. And with what we've seen so far on code llama 34 b instruct mixturel and llama 270 b chat, we see quite significant improvements when you take the Arg max versus the second best individual provider in the order of up to three times better sometimes when you then three or four times better when you consider this unrolled over the space of a week or two. Because again, sometimes there are slight drops in performance. So this is relatively simple, but again, quite big performance gains to be made by a relatively simple approach. And again, basically this can be based on any metric.

Daniel Lenton [00:10:00]: So you might want to optimize for time to first token, you might want to optimize for intertoken latency, et cetera. So it really depends what you want to optimize for, but you can choose whatever works best basically. So I will very quickly, very quick demo show you what this looks like in action. So let me quickly share my screen. So basically what we have here, so let me quickly do this and quickly open up this as well. Sorry. So you can imagine that basically let's say we want to optimize for throughput in one case, and we want to optimize for time to first token in the other case. So bringing this up here, basically we can see that this is how you would do it.

Daniel Lenton [00:10:59]: So basically let me quickly do this broadcast all. So what I have is this simple agent class and this is something that's in our docs, but just a very, very simple agent class that kind of connects to this centralized router. We can then do agent equals agent and we specify what the provider is. So in this case we're going to do llama 270 B chat. So we put that in there and then we do at, and what we can do is actually select a mode which is not one provider. So one thing we can do is we can say at any scale or we can say at together AI or at fireworks, I, et cetera. But what we can also do now is basically select a mode. So we can then do kind of at.

Daniel Lenton [00:11:52]: Let's do one that's lowest time to first token. So let's do that one for this. Oh, sorry, hang on, I need to undo this. So let's do broadcasting off. So let's do that again. So this is lowest time to first token. And then this one is actually going to be, let's say highest tokens per second. Like so let's get that there.

Daniel Lenton [00:12:19]: So again, we're not specifying one provider, we're actually specifying a mode which will then look at the latest results and act accordingly. So right now we are, let me quickly go back to the hub. So right now I'm calling from London. So what we would do is look at llama 270 B chat. The closest is Belgium. So that's probably a reasonable approximation. So then let's see what we expect to be the selected provider in terms of output tokens per second. Right now the highest performing is together AI.

Daniel Lenton [00:12:49]: So I would expect that this one is likely to be together AI. The one that's doing best with time to first token right now is Octoai. So that would likely be the one that's chosen here. So let's now put this in action and see. So I'll quickly do broadcast all again. Let's do agent run. There's two options here you can see in the docs. One of them is to print the cost that's spent and one of them is to print the provider that's being used under the hood.

Daniel Lenton [00:13:13]: So let's run both the agents and then I don't know, let's say there was one I did. That's just like because it shows the performance quite well. So count from one to 100 using words, not numbers, using a new line per number. So what we would expect to see, and I mean this is a live demo so let's see. What we'd expect to see is that this one starts quicker, so the left starts quicker because we're trying to do time to first token, but the right one goes through the numbers more quickly and then we'll get shown at the end because we've set the logging to true, we'll get shown what the provider is that's being used under the hut and I hope this works. If it doesn't, then I'll have to record. So although they did start similarly actually in this case, but you can see the one on the right goes through way, way faster and the time to first token is something that's quite noisy actually. So yeah, we were able to get together.

Daniel Lenton [00:14:17]: AI was the one that was selected on the right because this is the one that has substantially the faster intertoken latency. And then the one on the left was octoai because again this is the one that was selected to have the best time to first token. And the benefit here is you can do the same with costs. So like for example, let's say that the costs change every few weeks or so on and you don't want to keep up with this. So you can just say, well, I just want to do if you're doing a very input cost dominated task such as chat interfaces where you keep sending the entire chat history as an input, you can just set this mode and just know that at any point in time you're always going to have the cheapest. If you have a very output dominated task such as content generation, or you want it to make loads of marketing material for you, then you can select the lowest output cost. And again you can rest assured you will always have the cheapest among the providers. So even just very simple rules like this, this is quite a useful abstraction layer to make sure that you're getting the very best out of all these great providers with all these great different endpoints.

Daniel Lenton [00:15:21]: So that was the end of the very quick demo. I know we're kind of quite tight on time, so I'll fly through the last few slides.

Demetrios [00:15:30]: Yeah.

Daniel Lenton [00:15:33]: Basically. And I go fly through these. So future directions we're looking now at doing as well as this quality based routing as well. So we've shown here simple runtime based routing based on simple handwritten rules, but actually doing a learned system that can route to the best model for any given prompt. For example, you can imagine what is the capital of Egypt. This doesn't need to be answered by GPT four. Llama seven B can answer this totally fine, so we can save a lot of time and money by routing it to that accordingly. Similarly, if it's a SQL text, maybe we send it to one of the fine tuned SQL models that are actually better than GPT four, et cetera.

Daniel Lenton [00:16:11]: So quality based per prompt routing is another area that we're looking into. And then you can train this, for example with human evaluation, much like the way that chatbot arena is trained. You can also do this with LLM Oracles to help learn the routing function as well, something that we're working on at the moment. And we also see multimodal routing has been an interesting potential future direction where similarly to what you get with GPT five and so on, where it can do things with images and audio and so on. Actually, if we see the endpoint landscape expanding and lots of very good niche models appear across modalities, then I think routing across modalities as well to get the very best for every request is a really interesting area for future work as well. And again, basically it's all based on the standard OpenAI standard. So basically you just send your HTTP request with the exact same content to our URL with your API key, and then we can kind of route it to the most appropriate one behind the scenes. And again, you can find all this in our model hub basically, but I'll try not to go by too long.

Daniel Lenton [00:17:16]: So that was just a very quick demo, very quick overview, but yeah, thank you very much for listening and very happy to answer any questions. Thank you very much.

Demetrios [00:17:23]: Dude, very cool. Thank you for showing us that. I have one thing that I want to ask you right away because I've played around with another playground type solution, and I've noticed that depending on the endpoint provider, the model is or the output is higher or lower quality. So what's weird to me is that I'll have the same model selected across a few different endpoint providers and the output will be drastically different.

Daniel Lenton [00:18:03]: Yeah. So this is one thing that we are working on as well. So as you say, the prompt based routing between providers will be strongest when the model is different entirely. But for things like quantization, there are some endpoint providers that are focusing on improving the speed through things like compression, which of course does change the quality. Now, it's very hard, obviously, with these being random, to know whether that's to attribute down to the temperature and the randomness or down to the actual difference in the model. But one thing that is very much needed in our benchmarking and everything is quality based benchmarking. So we intend to not only plot the performance across time, but actually the quality across time, because of course that's quite hard because there's a lot of noise, but that's something that I think is doable because then if one provider decides to compress their model, that should also emerge as a time series data as well. So it's very much on the radar and we're thinking about.

Daniel Lenton [00:18:59]: But it's very hard. Runtime is relatively objective, so there's not too much disputing like quality as a whole game altogether. So we're thinking very carefully about how we do.

Demetrios [00:19:08]: That's the only thing I would caution people against. So I love, together, I love their product and what they're doing. And Jamie, one of the founders of it, I've talked with a few times, but the Mistral model, and together it's so fast and it is really like, for some reason I can't get it to give me a good answer. And then you go to the Mistral API itself and I'm pretty sure they're doing some special sauce behind the scenes to make their model much better. And so if you're just looking, yeah, yeah, I swear, man, they're making their stuff. Like, if you get it from them, it's really good. And they say it's just like the normal model.

Daniel Lenton [00:19:58]: Well, hopefully this is the kind of thing that would become emergent through the kind of time, through benchmarking. But again, it's so hard to do benchmarking reliably because you need to do it. A lot of data, it's something we're thinking carefully about, but I would love it if we could have that kind of presented in this way. And also, I'm very much talking with the founders of these companies and all doing really good. There's more, broadly speaking, very much on board with open benchmarks, obviously there's llmper from any scale. That's a great initiative. There's initiatives from fireworks as well. Have one and martian rooter have one.

Daniel Lenton [00:20:38]: So I think there is a good joint initiative to try to get objectivity here, which is great to see.

Demetrios [00:20:44]: Yeah, it is super cool. It's super cool to see. I really appreciate what you've built and what you're doing. I will let you go, man. I don't know if I see, some people are just mentioning, this was awesome, great live demo. And others are saying like, yeah, for my company, accuracy of output is more important than money.

Daniel Lenton [00:21:07]: That's good to know. Yeah, we want user feedback, so if there's some feedback in the comments, that's perfect. We'll make sure to get added.

Demetrios [00:21:13]: That's it. There you go. So if anybody wants to go and check it out and get in touch with Dan, hit him up. That is perfect. So, Dan, we're going to keep on rocking. Thanks so much. Yeah, get some sleep, man. I know it's late where you're at.

Demetrios [00:21:28]: I appreciate you doing this all late. That's very kind of you.

Daniel Lenton [00:21:31]: Thank you very much.