Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory // May 2025 Reading Group

speakers

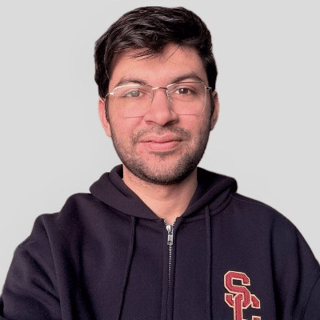

Founding AI Engineer @ Mem0 and co-author of the paper

Arthur Coleman is the CEO at Online Matters . Additionally, Arthur Coleman has had 3 past jobs including VP Product and Analytics at 4INFO .

Hey! I’m Nehil Jain, an Applied AI Consultant in the SF area. I specialize in enhancing business performance with AI/ML applications. With a solid background in AI engineering and experience at QuantumBlack, McKinsey, and Super.com, I transform complex business challenges into practical, scalable AI solutions. I focus on GenAI, MLOps, and modern data platforms. I lead projects that not only scale operations but also reduce costs and improve decision-making. I stay updated with the latest in machine learning and data engineering to develop effective, business-aligned tech solutions. Whether it’s improving customer experiences, streamlining operations, or driving AI innovation, my goal is to deliver tangible, impactful value. Interested in leveraging your data as a key asset? Let’s chat.

David DeStefano has joined Adonis as a Senior Machine Learning Engineer in the United States, previously serving as Lead Engineer for ML/AI Platform & Infrastructure at EvolutionIQ.

SUMMARY

Memory is one of the thorniest challenges in deploying LLMs. Mem0 introduces a scalable long-term memory architecture that dynamically extracts, consolidates, and retrieves key information from conversations. By using graph-based structures to model relationships between conversational elements, Mem0 enables AI agents that are more accurate, coherent, and production-ready.

TRANSCRIPT

Arthur Coleman [00:00:00]: Good evening wherever you are in the world. We are now recording just so everybody knows that. Thank you for starting that Pinoy. Really excited, really excited this morning. I read this paper as I prepped for this last week and learned a tremendous amount because I also read all the prior papers on prior technology and this is a very, very cool and important development in our industry for agent memory. And I am really proud to have. We are really proud to have Pratik here who's the founding AI engineer from MEM0 and I'll tell you a little bit more about him in a minute. Let me just remind everyone of the guiding principles.

Arthur Coleman [00:00:46]: These are your sessions, okay? They're intended to be interactive. I'm going to keep working on this until we get lots and lots of questions from the audience because the only way to learn, and especially we have an amazing engineer who built this technology. He owns the end to end stack. From embedding store optimizations and RL driven update loops to production deployments, he's got it all. If you ever wanted to be able to ask a question of someone who knows about this technology, he is visibly in front of you at this moment. So there's a no judgment zone. I mean this sincerely. There's no such thing as a dumb question as I always like to tell people.

Arthur Coleman [00:01:25]: I'm usually the guy asking the dumb question. So there's absolutely no dumb questions here. And put your questions in the chat. I'll get set up for them in a minute and I will pick them up and curate them. And then if you have a discussion, you want to have a question, please raise the hand in in Zoom. I know you can do that and then I'll call on people as I see them. So with that, let me talk about our speaker. As I mentioned, he is the founding engineer of MEM0.

Arthur Coleman [00:01:56]: He owns it all, total end to end stack. Now he's got an illustrious career. Before M0, he went to USC Information Sciences Institute where he got his master's in. Let's see, I got to look at this one. Computer vision, scalable AI infrastructure. There we go. Prior to M0 and I should say that during his graduate research he authored papers on multimodal LLMs and reinforcement learning agents and he got publications in ICLR, NeurIPS. I would love to get a paper of mine published in Neurips.

Arthur Coleman [00:02:30]: By the way, I love that conference ACL and he got it best paper accolade at RCAP. Before this he was at housing.com and proptiger.com where he achieved high accuracy and anomaly detection and launched real time recommendation systems. So got a lot of background in just about every aspect of AI that you can think of. And so with that I'm going to turn it over now. I'm going to stop sharing who's got the next set of slides. So whoever that is, go ahead and welcome Pratik. Looking forward to hearing what you have to say.

Prateek Chhikara [00:03:09]: Thank you. So should I start the presentation? Yes, yeah sure, go for it. I just wanted to say this one quick thing that while you load it up Pratik is last month we did the AMM paper for those of you who didn't join but for those of you who did join, AMM is another technique that is also trying to solve the same problem of how do you give memory to long running conversations in agents. And you will see very similar kind of ways to do evals, et cetera. Locomotive data set shows up again which is what most people are eviling against. So yet another SOTA paper on how to do memory. And the amazing thing is we have Pratik here with us so we can actually ask questions and understand it better than just kind of figuring it out ourselves. Yeah, sure.

Prateek Chhikara [00:04:09]: So hello everyone. In today's session I will be presenting the paper that we me and my team have worked on. So I am from mem0 so it's like yc startup. So me and my like team members we worked on this paper and I will go through it. So the title of the paper is MEM0 building production ready AI agents with scalable Long Term memory. So let's start with the motivation. So right now we have a wide range of LLMs like from like smaller LLMs like llama 3B to trillion parameter LLMs. But these LLMs have like these LLMs they have Excel in like short term coherence.

Prateek Chhikara [00:04:55]: Like if you ask them some like open ended question they will go to the web page and fetch it for you. And also these LLMs have learned a lot of data during the pre training phase. So you can ask anything and they will return you the correct response based on their knowledge. However they forget across sessions due to fixed context windows. For example if you see on the left side, so if the user says that I am vegetarian and I avoid dairy and any dinner ideas. So the agent says like try these dairy free veggie recipes, right? And suppose the session has ended and the next day the user go to the same like agent and it ask like it asks like I want to eat something, what should you recommend? So the agent Might, might recommend that. How about chicken Alfredo? So this thing is, this thing will cause user frustration because the agent has forget that user is vegetarian and the user avoids daily free. The user wants daily free products, right? And if there is memory in the EI agent, so the personalization will happen and the LLM or the agent will recommend the user the recipe based on its prior experiences with the user, right? So on the left side, on the left scenario there is like user frustration.

Prateek Chhikara [00:06:40]: The user has to repeat itself again and again, like mentioning that they want vegetarian recipe, etc, right? This is like a very small example, but in real world dialogue system, the conversation can vary from like a few days to weeks or maybe months. And then the full context approaches, they will not be that helpful because if you try to ingest all the conversation into the context window so that two things will happen. First, you, you will extend or you will go beyond the context wind of the LLM. And secondly, you will be feeding everything into the context. So this will increase the cost as well as latency. Because in the LLMs, the cost and the latency is directly proportional to the number of input tokens that you are feeding to the LLM, right? For example, in the right chart, if you increase the number of tokens that you feed to the LLM, so the latency also increases. So suppose this is just 8,000 tokens and your search latency is like more than 4. Sorry, this is error.

Prateek Chhikara [00:07:58]: So this is seconds. So this is 4 seconds. So if you go beyond like 200k tokens, so the latency can go up to several minutes, right? So we do not want to have LLM or a memory mechanism which is dependent on the input size of the conversation. So whatever the input size is there for the conversation, the latency should be constant, right? So let's start with the problem. So our goal is to enable EI agents to maintain, retrieve and update some information from the conversation. And this conversation can be extended to several months or maybe more than that. So the current LLMs or memory approaches, they have these challenges. The first one is context overflow.

Prateek Chhikara [00:08:50]: So even like Claude and other models, they have more than 200k token windows, they will not be able to solve the memory problem. They are just delaying the memory thing rather than solving the fundamental limitation of like fundamental limitation of extended conversation. Secondly, if you add everything to the context window, so the attention for the important information in the text or the conversation will be lost. For example, today if I'm talking about my dietary preferences, like what I like to eat for the next One week I am discussing about my coding preferences, how I like to code and other things. And after one month I ask about what I like to eat. So if you feed everything to the context, the attention will be degraded because there's a lot of noise in the context. And the output of the query, like if I ask what I like to eat, so it will be filled with noise and the result will not be that great. And also there are things that should be updated with time.

Prateek Chhikara [00:10:08]: So for example, today I say that I like pizza and after a month I say that I do not like pizza anymore. So this the state management of the memory should also be there, otherwise the output will be wrong. So the memory mechanism is widely used in various use cases. So like at Memzila we address the we provide memory to diverse use cases. So I have shared four of them here. So the first one is personalized learning. So suppose there is an AI tutor who uses memory and it can track like student progress and see like what concepts or areas needs revision for the student and what areas the students does not need to work on. So that is the personalized learning.

Prateek Chhikara [00:11:02]: And second is healthcare support. So if you want a personalized health care, so it just, it is not only dependent on your current symptoms, but maybe it is dependent on your full medical history, like your family history and other things. So they are also memory helps and obviously the customer support. So if you are talking to customer support today and you are sending your order number and asking for the asking why you face that issue. And later on the session is closed and the next time you have to feed the entire thing again and again. So in that case customer. In the case of customer support, memory is also essential and similarly for financial advisory. So I will start how we at MEM0 solve this memory issue.

Prateek Chhikara [00:11:56]: Like how memory can be implemented to solve the long or extended context window problem. Right, so here, this is the our main architecture diagram. So let's start from here. So suppose we have a new exchange between user between either two users or user and agent. So we pass these two messages to the extraction phase. And the extraction phase consists of three things. The summary. So it is the summary of the current conversation like whatever has been discuss between two users or user and agent.

Prateek Chhikara [00:12:35]: So we generate a summary here or put it here and then last M messages. So here we have used m equals to 10. So we fetch last 10 messages from the conversation history. And also we have the the new messages here. So the summary it is generated is async at the backend using an LLM which is which we call as like summary generator LLM. And from there we fetch a summary and this last M messages is also stored in the db. And we pass all of these three things to the LLM and then we generated new extracted memories like based on these two messages. What are the new extracted memories? And then we pass it to the date phase and the update phase.

Prateek Chhikara [00:13:28]: Uses semantic similarity as well as relevancy to fetch similar memories based on the new extracted memories. Suppose we have two new extracted memories from here. And for each memory we fetch similar memories. So suppose we Fetch like S is 5. For each of these two new memories we extract 5. So we will have 10 new memories. So sorry, so we will have 10 memories from the existing DB. And then we ask the LLM, like these are the new memories, these are the existing memories.

Prateek Chhikara [00:14:06]: Perform these action like whether to add new memories, whether to update some existing memories or delete existing memory or perform no operation to the existing memories. So this thing is handled by the LLM. And then we will have the new memories and which we will store it to the db. So in this entire architecture we perform one LLM call here and one here. And also in the summary generator, which is async. So overall there are three LLM calls in this diagram, right? So this is the base memory architecture. So we enhance this architecture with graph memory. So we call it as mem mem0g.

Prateek Chhikara [00:14:59]: So mem0g consists of the mem0 original architecture in addition to the graph architecture, which I will be mentioning right now. So similarly in the MEM0G, we will have an input conversation, the latest two messages and then we pass it to entity extractor, right? So suppose the input message is Alice likes pizza. But this entity extractor will fetch entities from the input text. So the entities would be Alice and pizza, right? So these will be our nodes. So we will pass these nodes to the relation generator as well as the original input text. And the relation generator will have the original input and the nodes and it will try to generate relationship between the nodes. So now we will have triplets which will be like Alice likes pizza, right? So we will have the triplets here. So this is the extraction phase.

Prateek Chhikara [00:16:01]: So with the input we will be getting a triplet and then we will have update phase. And update phase also has two steps. One is conflict detector. So there are few like important things about the conflict detector. The first one is it checks whether the whether the nodes in the triplets, whether they are in the current graph or not. Suppose Alice and Pizza are the nodes. So this will see like whether Alice is present in the graph or not or Pizza is there or not. If they are not there, so the new nodes will be created.

Prateek Chhikara [00:16:42]: And if they are present, then the ID of that node is returned. So this is this will like this is done to make sure that we do not have duplicate nodes in the graph. And once this conflict detector finds like there is no no conflict. So it will. So the conflict detector will see whether the two nodes are there or not. If they are not there, so they will be added. And also another thing is if the two notes which are being added. So we tried to see how many edges are there between those two nodes and we fetch them.

Prateek Chhikara [00:17:28]: So suppose there in the existing graph there is Alice loves pizza and the new triplets are Alice likes pizza. Both of the information are same like the destination and source nodes are the same. The relationship is different. So one is loves and another one is like. So both of them conveys the same thing. So in the update resolver we use an LLM and we pass all the node pairs and ask the LLM like these two nodes have these relationships. So remove the redundant information or combine the information between the two nodes. So this is done by the update resolver.

Prateek Chhikara [00:18:09]: So once we consolidate the information between two nodes, we add the two nodes back to the graph. So this is the entire working of the mem0g. Right. And for the experiments we have used LOCOMO benchmark data set. So it consists of 10 multi session conversations and each conversation has like 26,000 tokens. And for each conversation there are like 200 questions. So we selected LOCOMO because it has diverse question types like single hop, multi hop, temporal and open domain and other data set. They mostly focus on single hop.

Prateek Chhikara [00:18:57]: So we refrain from using those data sets. And we also want to compare memory models and see how they perform in these subdomains. Like there could be a possibility that a particular memory mechanism can perform better in single op, but it will perform very badly in temporal. So for example full context window if you pass everything to the context. So single OP performance will be better for the full context. But the and also if you are using rag, so RAG will perform poorly on multi hoping. So that's why we wanted to compare models across these question types. So that's why we use locomo and we want to evaluate the memory models based on two metrics.

Prateek Chhikara [00:19:48]: One is quality, like how good the memory memory quality is. And we measured it based on the questions that is asked at the end of the conversation. And we calculated the F1 score, blue score as well as the LLM as a judge score. And also we also one of our motivation was to see how memory can be used in like production scale environments. So we also wanted to see the token consumption as well as the search and total latency of all the models or approaches that we have compared to. And we selected several baselines like rag, full context, OpenAI and as well as Amen and this is one sample from the locomotive data set. So first of all let's see the quality comparison of all the methods that we have used. Like we have F1 blue score as well as the LLM as a judge score with 4 like sub domains of the question categories.

Prateek Chhikara [00:21:00]: And here you can see that MEM0 performs better than other methods and MEM0G performs better in like open domain and temporal. Whereas on single hop and multi hop the MEM0 the base variant performs better than MEM0G, right? And this is a comparison of MEM0 in terms of latency as well as token count. So we have compared it with RAG as well. Like here you can see if you increase the chunk size in the rag, the total latency also increases. And if you increase the count or the number of chunks in the rack, it also increases a lot, right? So if the if the number of chunks are 2 and the token number of tokens per chunk is 8192, so the total latency can go up to 10 seconds, right? And if we see mem0 the base variant, so the total latency is like around 1 1.5 seconds. And also you can note here that the number of tokens are also less. It is like once around like 1800 tokens. So irrespective of the size of the conversation, this will not vary much because during the retrieval part we will be fetching like top k relevant memories for a a given query or question.

Prateek Chhikara [00:22:41]: So this will not go or vary that much. But for the full context it depends on the length of the conversation. Like in the LOCOMO data set the average length is like 26,000 tokens. So suppose there is a data set which or conversation which has like more than 100k tokens. So this will also increase which will result in the LLM cost because LLM cost varies or depends on the input and the latency will also increase. So that is also one merit of using MEM0 because it is not dependent on the input size. Right? We also compared OpenAI memory, right? So here you can see the scores of the OpenAI memory so it is like 53% and MEM0 is like more than 66%. So the search is the time taken for the LLM to fetch the memories or the chunk.

Prateek Chhikara [00:23:45]: And total means the time taken by the LLM to see the chunks or memories and then generate the answer. So this is search and total. So the key takeaways. So the MEM0 architecture, it offers a human like persistent memory and it dynamically extracts and aggregates only the important facts. And if MEM0 is used in an agentic framework, so the agent will never forget user preferences and it will try to give personalized results. Also, we have superior performance, like has like better performance than rag and it beats existing memory solutions like open source solutions like Line Memor and other dedicated memory solutions. And there's a dramatic cost and speed gain. Like we reduce the token count by 90% against full context and we also reduced the latency during the retrieval phase by 91%.

Prateek Chhikara [00:24:57]: So the full context offered 17 seconds. And MEM0, the base variant, it offers like 1.4 seconds. So yeah, this was from my side. So we also have an open Source variant of MEM0, so you can scan this QR code and try using MEM0. So we have like an open source variant and another platform variant. So the platform variant is managed and we regularly pushed updates there. But if you want to play and try mem0, you can check our GitHub repository and start using mem0. So yeah, this was from my side.

Prateek Chhikara [00:25:40]: So if you have any questions you can ask.

Arthur Coleman [00:25:43]: Thank you. Pratik, can you give me the screen back?

Prateek Chhikara [00:25:46]: Yes.

Arthur Coleman [00:25:50]: Okay. Oops. Let me, let me blow this. Actually not. Because I need to work on it while we're talking. Okay, tell me when you can see my screen. All right, let me get this over here. All right, so we had loads of questions and rather than necessarily go to the floor, I'm going to start with the questions.

Arthur Coleman [00:26:13]: And the questions, the majority of them are right here. Let me, let me highlight them. And the colors are for me to track questions. So don't mind that they're all about memory. So let's, let's talk about memory. How do you handle versioning of memory, especially when the agent's behavior or model evolves?

Prateek Chhikara [00:26:32]: Yeah, so like I mentioned that in the MEM0 base variant we have an update phase and it consists of a tool called Architecture. And whenever there is some new information which has a conflict with what is there in the database already, so that conflict Resolute handles everything. So it is based on an LLM and it decides whether the new facts is something that should be added or should be replaced by the existing facts or memories in the db. So that is handled there.

Arthur Coleman [00:27:14]: Any follow up questions for folks on that? I can't see everybody's hand, so if someone can. Niel, can you see people's hands? I can only see some of the people. If anyone's got their hand raised, let me know.

Prateek Chhikara [00:27:28]: Yeah, I think I also can only see the four of us, but we can continue.

Arthur Coleman [00:27:34]: Okay, so let's go to the next question. I have to be able to see it. I have to bounce between windows. What's the cost of keeping contextual memory? What are, what are the costs? I'm not sure exactly. And then whoever asked that question, if you want to follow up on that, that'd be great. Let me see. That was Trisha Agarwal. Trisha, anything you want to add to that?

Trisa Agarwal [00:28:00]: I was asking more about the architecture, like when and I specifically didn't understand the conflict part which he was explaining. So I couldn't ask more question about it at this point. But you. I was asking like, what is the cost between the like architecture of the like. This happens because it would be more when it goes with the versioning and you have to save it. So that's why I was thinking and that's why I asked him like if he's keeping the contextual memory.

Prateek Chhikara [00:28:34]: Okay, so the answer to your like one of your questions. So like if you use the base variant mem0g so roughly the token count is around like 2000 but if you switch to the graph one so it doubles the number of tokens, right? And also the tokens and the latency, we are measuring it during the retrieval time, right? So if there is a query or the question from a user to the agent, so we are trying to calculate like how much time the agent is taking to answer that query or how much time, how much how? Like what are the number of tokens that that agent is using to answer the question. So the tokens and the latency, they are measured during the retrieval or the search time. And we are not doing comparison during the AD time because like AD can be async, so it shouldn't, it cannot. Like it depends on you, like whether you want to put the AD in the critical part of your application or not. Because suppose there is a conversation happening between two entities. So you can just take the new messages and do like async AD and then whenever there is a search. So that search part Requires to be in the critical path.

Prateek Chhikara [00:29:55]: Because you have to do. You have to provide the personalized answer. So the output of the search is essential, not the ad thing. So does that answer your question?

Trisa Agarwal [00:30:09]: Yeah, like you, you are me you're mentioning you are actually keeping the feature which is more essential and that's where you are actually thinking. But how you exactly maintaining the cost of even for searching and querying it back.

Prateek Chhikara [00:30:22]: So like, can you elaborate? Like what do you mean by like keeping the cost?

Trisa Agarwal [00:30:29]: I mean like even though when the, when the user is actually doing a serve, like there would be a lot of user who is using your application and they are doing so how you are actually minimizing like the calculation cost and all.

Prateek Chhikara [00:30:42]: Yeah. So as I have specified that in the mem0base architecture we use two LLMs. One is like in extraction and one in update phase and also like one in the summary generator which is async. So we can like remove that for this particular question. So we have two LLMs right now. So if you want to reduce the latency or cost so you can use a different LLM. Like for our experiments we have used GPT4 mini and using that model you can reduce the cost and also the you can reduce the latency or the response time. But if you do that you will be hit by the performance because GPT400 mini being a small LLM, it can do well in the extraction phase, but the update phase which requires reasoning between the existing and the new memories.

Prateek Chhikara [00:31:39]: So GPT400 mini will not perform that well. So in our like internal experiments we switched from GPT 400 mini to O4BD and there was a like huge performance improvement because O4 mini is a reasoning model and in the update phase reasoning is essential as the LLM has to reason between the new memories and the extracted or the existing memories. So you can like mem0 architecture it you can use any LLM with it and it the performance will be based on how well that particular LLM perform specifically in the update phase.

Trisa Agarwal [00:32:21]: Okay, thank you.

Khal [00:32:29]: Arthur is on mute.

David DeStefano [00:32:39]: I think the one question kind of ties well to what Trish was asking is that.

Arthur Coleman [00:32:44]: Sorry, I had this weird pointer thing I couldn't get it to turn off. I think the follow on questions here, the two of them, some of it you may have answered but since they're related I'd like to continue down that path. And then whoever was was chatting we can let you ask your question. So how do you if I have to fine tune the quality of memory what knobs can I turn? And what trade offs, for example, do you encounter in those cases between retrieval speed and memory depth? I think you asked answered that part. I think you answered pretty well. But if there's any more you want to add to that, go ahead.

Prateek Chhikara [00:33:26]: Okay, so like for example, we at MEM0, we offer like our memory to several use cases, right? So the prompts that we have for our LLM, it cannot be used in all the use cases and we can and we cannot expect it performing well in all the use cases. So for the MEM0 we have some like fine tuned LLMs at our own end which are fine tuned on specific use cases. So suppose there is like healthcare use case. So we fine tuned our LLM for that use case and based on that the performance is increased, right? Earlier like we tried using like off the shelf LLM and use it across all the use cases, but we, we were not able to get that much performance. But when we fine tuned the LLM on a specific use case, so we saw improvement in the accuracy as well as the latency as well.

Trisa Agarwal [00:34:30]: And where do you get the data set from? Sorry to interrupt. Yeah, so doing contextual update for the LLMs.

Prateek Chhikara [00:34:40]: Yeah, for sure. Suppose there is a healthcare use case and we understand what kind of conversation will be there. And then we use LLM to generate synthetic data set. We prompt LLM like bigger LLMs. We provide them the use case and few short examples and ask them to generate similar examples. This way we generate a wide range of samples for a particular use case. Then later on we randomly sample like 10% of the data set and do manual evaluation whether the data set makes sense or not and see whether the ground truth memories generated by the LLM makes sense or not. This way we generate the data set for the fine tuning.

Prateek Chhikara [00:35:28]: It is very easy to scale up the number of samples during the training because you just have to send the same prompt to the LLM and just vary the temperature and you can just sample a high number of training data.

Trisa Agarwal [00:35:46]: Thank you.

Arthur Coleman [00:35:48]: All right, so someone was asking a question. I interrupted you. Whoever that was, feel free to go ahead and ask your question. No, okay.

Prateek Chhikara [00:36:03]: Sorry.

Arthur Coleman [00:36:03]: I have a question.

Prateek Chhikara [00:36:04]: You know, grid representation, you know, first of all, so I have two questions regarding the, you know, the memory. One is you mentioned facts, another one is about, you know, graph, right? Both are very domain specific. So from day zero, you know, the over start using the feature, the memory, what's the developer experiences in terms of the facts as well as graphs is going to be auto Learned by something or developer need to put a lot of source to define the facts as well as the graph. Okay, so the locomotive data set or other data set that we have, so they are mostly like companion type of data set in which like 2 user talk about their day to day life events, right? So in that scenario the vanilla or the base like prompts that we have, they works pretty well, right? But if we have a specific domain use case like healthcare or like AI tutor, the conversation changes. So in that scenario the agents or the user, they do not talk about their day to day life, but a particular or niche area, right? So in the AI tutor use case, the student would be talking to the AI tutor and the student would be asking like on like what concepts they have to work on and what concepts they are very good at. Right? So in that scenario the normal like AI, the normal like companion you use case prompts would not work. So in that case we offer a functionality of custom instructions in our platform variant in which we ask several questions from the company itself. Suppose I want to use mem0, I will sign into mem0 and there would be a certain question.

Prateek Chhikara [00:38:05]: The first one is the type of use case, whether it is healthcare, finance, education or like personal use case. And then we ask like what kind of memories you want? So a few people might want like very disjoint memories and some might want like summaries form of memory of the entire conversation. So we ask that question and also we ask like what kind of things you do not want to capture in memory. Like for example in the healthcare you do not want your like SSN or like personal information to get captured. So we ask that thing from the user as well. And this information we create some customized prompts for the user and then we use that internally in our application. So based on the inputs we like, we control that kind of memories that the user wants. So we do this in that way.

Arthur Coleman [00:39:10]: Pratik, would you do any favorite following? How are you determining what is pii? This is really important for me in the ad tech space, for example. That's really cool. That was nowhere in the paper.

Prateek Chhikara [00:39:22]: Yeah, so the paper is mostly on the locomotive data set. So but if you have a PI data. So we ask users to provide some like excludes or includes prompt like in natural language on our platform. And based on that we prompt our LLM to not fetch certain information or fetch specific information. For example, there is like customer support use case. So the customer wants like in each memory we store the order id, right? But if you use the normal like companion use case prompt, so it might skip the order id. But if the user wants to store order ID specifically, they can mention that in includes and we fetch the order ID and store in the memory.

Arthur Coleman [00:40:13]: Interesting.

Prateek Chhikara [00:40:14]: Mostly depends on the use case. So we provide the functionality and we provide the liberty to the user to decide what memory means to them. And we will fetch that only for them.

Arthur Coleman [00:40:27]: And I'm going to ask you more about that offline. Yeah, so let me do the question and yell. I don't see any hands raised. Anthony took his hand down. So I want to ask this one because it came out of the paper we read last week and it really. I mean, I want to hear this because this is a really interesting quote if you can see it here. I want to read it in full. While graph databases provide structured organization or memory systems, their reliance on predefined schemas and relationships fundamentally limits their adaptability.

Arthur Coleman [00:40:59]: This limitation manifests clearly in practical scenarios when an agent learns a novel mathematical solution. Current systems can only categorize and link this information within their preset framework, unable to forge innovative connections or develop new organizational patterns as knowledge evolves. What's your answer to that critique? Because this is core to your technology and they're basically saying this is a very limited. You have limits at which you will run into problems with trying to build new parts of the graph dynamically.

Prateek Chhikara [00:41:32]: Yeah. So as highlighted from the AMM paper. So according to the prompt that they have mentioned. So I read their paper as well. And then they mostly critique the traditional graph DB memories. Right. Because the traditional graph memories they rely on predefined schemas, like what kind of attributes you want to capture and store in the graph, like predefined schema relationships as well. So this limits the adaptability.

Prateek Chhikara [00:42:04]: So the new innovative connection or conflict resolution will not be there in the traditional graph databases. Right. But in the MEM0 graph variant we addressed all of these issues. So for example, the first thing is entity extraction and cryptid generation. So they are LLM driven. So we are not dependent on any rule based methods. So we ask LLM to generate that and it is not a hardwired schema, which was there in the traditional graph databases. And also the conflict resolution model that we have, so it also uses an LLM, so it checks whether the new triplets should those be added into the graph or not, whether there is a contradiction between the nodes or between the relationship.

Prateek Chhikara [00:43:00]: So that is handled by the LLM itself and that resolve the conflict. And during that Thing we will, we will always have the updated graph based on the conversation. So all the unnecessary information or outdated information will be removed from the graph. And also the notes and the relationships that we are creating, they are mostly created based on some similarity threshold. For example, two words can be very similar to each other. So instead of having two distinct nodes, you can create an overlapping node. So this will help maintaining the semantic relevance of the triplets. For example, I give one example, like Alice likes pizza.

Prateek Chhikara [00:43:56]: Alice loves pizza. Instead of having two edges between these two nodes, like one edge for likes and one for loves, you can combine them in one edge. Another example could be I live in San Francisco. I live in sf. The SF and San Francisco, they mean the same thing. We combine nodes in that scenario. As AMM highlighted that the traditional graphdb they rely on predefined schema and relationship. So we do that.

Prateek Chhikara [00:44:32]: Like we handle that particular thing using LLMs and we maintain the updated state in the graph. So the graph is generated on the fly.

Arthur Coleman [00:44:43]: Sorry. Yes, you have a question?

Trisa Agarwal [00:44:46]: Yeah, I have a question. Like, if I have to integrate with my like LLM now this particular thing to make it contextual, because I'm actually building a like agent, one agent, customer agent, customer support agent. And I want to have this mechanism wherein I can actually store the memory. Like how do I can do it? What is the way and what is the easy way? Just I can integrate with my existing code.

Prateek Chhikara [00:45:13]: Okay, so at MEM0 we provide the integration in like less than three to five lines of code only. So you just have to initialize our memory client just like you do for OpenAI. And then we provide two endpoints, one is AD and one is search. Whenever there is conversation happening, you just take the new exchange of messages and just pass it to the ad call like mem0add and we will internally extract the memories and we will store it. Whenever there is a query which can be answered by like previous exchange of messages, you can just do mem0.search and the query and then the memories will be returned based on the semantic meaning of your query. And we offer some like additional features in the search part. Like all the memories that we are returning, if you want to re rank them, you can pass re rank parameter. So the most relevant memories will come up.

Prateek Chhikara [00:46:21]: And if you want memory filtering to increase the precision of your memories, you can do filter memories equals to true parameter into the search call. So everything is there in our docs. So we provide a wide range of functionality during that as well as search call.

Trisa Agarwal [00:46:41]: I just Want to have a simple thing? Is it cost or is it free? Free version something which is available to try.

Prateek Chhikara [00:46:48]: Yeah, so we have free version. You can try it.

Trisa Agarwal [00:46:52]: Okay.

David DeStefano [00:46:53]: Yeah.

Arthur Coleman [00:46:54]: I think we just answered the how many API requests Question. I think I can let that go. I want to come down to one here because if I have a frustrate. Oh call you have a question. I'm sorry, there's a question from the. From the floor. Kyle, go ahead.

Khal [00:47:12]: Sure. Thanks. First of all, great presentation. Really love this. I'm also curious about from these conversations how you are extracting nodes because as you just said, which is great, you don't have a rule based approach like the traditional graph methods would, but you still are extracting this using an LLM which has a prompt and in this prompt there would be some preconceived notions around what would be worthwhile nodes to store. Right. So I guess I'm curious how you go about choosing what kind of nodes are being extracted and how that's informed with how that's informed my context.

Prateek Chhikara [00:47:56]: Probably got it. So the graph part that we have at MEM0 so we do not recommend it to all of our users. So it depends on use case. To use case like in the paper experiment as well we can see that in not all the question types graph memory is performing better than the base variant. It depends on the use case and the question type. Suppose there is a use case of like medical support. So in that case the user will mostly talk about like their preferences and their like medical history. So in that scenario the graph would not be that helpful.

Prateek Chhikara [00:48:35]: But in personal AI companion use case 2 users are talking so they will talk about their friends, their family, their interests and a lot of things. In that scenario graph memory is really helpful because you can create a social graph based on your memory as well as the conversation. In that scenario graph is helpful. And also we have some internal prompts which vary from use case to use case. And we also consider the prompts from the customer itself. Like I mentioned that we have includes excludes and the use case parameter as an input where the user define what kind of memories or what memory means to them. So they define that there. And based on that we extract the notes as well as like natural language memories.

Khal [00:49:30]: Okay, awesome. So the graph based approach is more powerful when you have a clearly defined use case rather than an open ended conversation. Then one more question if I can, is it possible to inject memories in batches? So say I have a pre designed database with a lot of user specific information that I'd like to form the foundation of this. Is it possible to inject this or is it only possible to get this going with conversational context?

Prateek Chhikara [00:50:00]: Yeah, so we have one parameter like infer equals to false in which you just need to pass the input and we will store them as it is without doing any inference on the input that you are doing. Suppose you already have some memories or like input text. So you want them to be stored as it is in the form of memory. So you can just pass it during the ad call by making the flag as false for the infer and we will store them as it is.

Khal [00:50:32]: Okay, awesome. Thanks a lot.

Arthur Coleman [00:50:35]: This is a big topic. Any follow on questions from the floor on this for your particular implementations.

David DeStefano [00:50:44]: I'm curious how you could think about using the graph score to compliment the like then zero piece.

Arthur Coleman [00:50:50]: Like.

David DeStefano [00:50:50]: So if you're storing. Could I think about like for example like the. The metadata like the categories that you'd set when using MEM0 to retrieve this relevant facts or memories using the graph output to help given those some. Some of those nodes as entities could map to those categories to improve the response as part of that query. Yeah, because it. It seems like today like when you use it, you have to like improve. It's either you're. You're always starting with like the base of then zero and then like if you apply like the graph store like you're going to get both responses but like it.

David DeStefano [00:51:26]: It kind of comes as a pair or just like the singular but like using them to complement each other. Is that anything you kind of seen like a pattern of people applying?

Prateek Chhikara [00:51:34]: Yeah.

Arthur Coleman [00:51:37]: Played with this tech. Right. David, as a host you were doing stuff.

David DeStefano [00:51:41]: I jumped. I jumped around the. Yeah, the open source as much as I possibly could. And yeah, it was like super interesting just to see that. But then like also read the paper. So it's just kind of like trying to see how you guys were thinking about the architecture, the use case of it, how both could be applied either independently or with each other.

Prateek Chhikara [00:52:00]: Yeah. So whenever you want to generate memories for graph. So during the ad call you have to pass a parameter like Enable graph equals to true. If you do that, we will generate memories for like the natural language memories as well as the graph memories. Right. And during the search you have option to fetch only the like semantic memories or the graph memory. So in that case as well you have to pass enable graph equals to true. Like if you pass enable graph equals to true.

Prateek Chhikara [00:52:35]: So we will return the semantic memories as well as graph memories. Right. But in for some cases, like the companion use cases where there is a social network between the users, like I'm a, like Alice is a friend of Bob and Bob has these hobbies, right? So if there is a question like what is the relation between Alice and Bob? Right? So there are some questions which are not directly answerable by the semantic memories. So in that case, the social graph helps for such scenarios. If you fetch the graph memories during the search, that would help because most of our customers use MEM0 as a memory layer. Suppose there is a query. Instead of passing the query to LLM, they pass it to MEM0 first and then they fetch the memories and then the customer use their question and memories to the LLM. Right? And then the final response is generated which is returned to the user that which is written to the end user.

Prateek Chhikara [00:53:41]: So if you just use the semantic memories, you can reach like up to like X percent of accuracy there. But if your use case requires some social knowledge between the participants in the conversation. So if you pass the graph memories or the graph triplets in the final LLM call. But that can increase the performance of your use case. Like suppose like x plus some delta. So in that scenario the accuracy will increase.

David DeStefano [00:54:14]: Okay.

Prateek Chhikara [00:54:14]: Obviously there will be increase in latency because like graph search or traversal is expensive. So we recommend our customers that if latency is not a major factor, so you can use graph if that is helping your use case. Because most of our customers, they want retrieval in less than like 100, 200 milliseconds. So in that scenario we ask them to use base variant. But if they can wait for one second for the response to be generated. So we can, we recommend that if graph is helping you so you can use graph if latency is not a major factor for you.

David DeStefano [00:54:56]: Gotcha.

Prateek Chhikara [00:54:57]: Critique.

Arthur Coleman [00:54:58]: Oh, sorry, go ahead, David. I mean interrupt you.

David DeStefano [00:55:01]: No, you're. I was just gonna ask one more question about like MEM0 and maybe the difference between like you're like the more like memory client which is like more I think enterprise focused. And then you have like memory which is going to be like more of like the open source focus because like in the like fact generation like so like the extraction piece for facts. I know that your prompt formula is you're going to take the summary of the conversation history to date and then you're going to take the most recent message pair which would be the assistant plus the user and then the past 10 discussions. I think Nihil asked a really good question saying what of those things can I tune. Or do you suggest not tuning them? For example, the past 10 messages suggest that you guys keep a running history of the past 10 messages. Like, is that something that could be increased or decreased? Yeah, I guess I'll stop at that.

Arthur Coleman [00:55:53]: Before David, before you answer this, I'm going to ask you. I should have done this sooner. Everybody, I want to find out how many people actually have used mem0, please, in the chat, in the screen, just raise your hand like you have a question and I'm going to count because it'd be very interesting for Pratik to know how many people have actually touched the technology on this. Call now. Sorry, Pratik, go ahead and answer David's question.

Prateek Chhikara [00:56:15]: Yeah, so like in the OSS variant, we do not provide much liberty and you have to go to the code itself and modify it. Like, we do not release. We have not released any endpoint through which you can customize the prompt. You just have to clone the repo or create a fork and then do the modification. So there are certain hyper parameters that you have mentioned, like the number of past messages that you can use and how detailed the summary should be. Internally, on the platform version, we did some ablation studies by playing with this hyper parameter and playing with the summary quality and we have some eval setup internally, we perform some internal experiments and came up with this M value equals to 10 and the summary prompt as well. Right. And for the like Locomo experiments, we have not like modified our existing pipeline.

Prateek Chhikara [00:57:21]: We use the same MEM0 pipeline that we use for all of our customers without like trimming it or like modifying it to perform well. For this Locomo use case, I'm going.

Arthur Coleman [00:57:35]: To interrupt us because we are at time. We have had so many questions. I'm so thrilled, folks. The last thing is nobody's raised their hand except Nahil and and David. So folks, I'd love to hear, we'd love to see you play with it. And Prateek, thank you so much for coming and talking. Your paper was eminently readable. I might add that graph discussion into it and updated because I think that's important and other than that will pick up next month with the next topic.

Arthur Coleman [00:58:05]: Everybody, thank you for coming. If you want to review it, it's being recorded and have a great day and a great week. Take care.

David DeStefano [00:58:13]: Thanks, Pratik.

Prateek Chhikara [00:58:14]: Thank you everyone. Thank you. Thank you guys. Bye.