Blueprints for the Agentic Era: Inside the Revolution Transforming Enterprise AI

# AI Agents

# Open Source

# API

Real-world insights from CrewAI, LlamaIndex, and Lambda on bringing agents to production

May 13, 2025

Robert Schwentker

At Microsoft Reactor in San Francisco, nearly 200 builders and thinkers gathered for a master class on AI agents – not to marvel at flashy demos, but to witness the blueprint of a quiet revolution already rewriting the rules of enterprise computing. This wasn't your typical AI showcase of theoretical promises. It was a frontline report from João (Joe) Moura CrewAI, Jerry Liu LlamaIndex and Lambda's engineering team on how agents are moving from technical curiosity to mission-critical infrastructure.

Beyond Buzzwords: The Quiet Production Revolution

"60 million agent executions in March alone," announced João Moura, who recently relocated to the Bay Area from Brazil with his wife, five-month-old child, and two dogs. The CEO of Crew AI stood before a packed room, dispelling any notion that agents remain hypothetical constructs. "Honestly, we talk about agents as this thing that is just starting, that's gonna happen at some point. But what you're seeing is companies running this pretty consistently and getting a lot of value."

Companies including PwC, Havas, and numerous others under NDAs are already deploying production-grade systems. When an audience member probed which industries were leading adoption, Moura's answer defied conventional wisdom:

"It's very much spread across many different horizontals. I was expecting everyone would do customer support or everyone would do marketing – things that don't necessarily require a very high bar of precision. But what we're seeing is everyone's trying a little bit of everything."

This horizontal expansion spans financial services, healthcare, telecommunications, and even consumer packaged goods. When an attendee from the CPG sector questioned potential use cases, Moura responded: "If you're selling some product in Whole Foods, you've got invoices that could be optimized. You have distributor processes, logistics processes – everything could use agents for some sort of optimization."

The Enterprise Maturity Curve: Faster Than Anyone Predicted

Moura articulated an enterprise adoption trajectory that's accelerating beyond predictions:

- Discovery Stage: Companies running experiments with frameworks and LLMs

- Agentic Native: Organizations planning deployments of hundreds or thousands of agents

- Enterprise Suite: Building infrastructure for management, security, and governance

"We're talking about how 2024, we thought, would be all about experimentation and pilots. But you're seeing most companies getting to production already. I think 2025 is going to be level three to level five – that's moving a little faster than what most would expect."

The strategic inflection point came into focus when Moura addressed scaling challenges:

"You realize that no framework is gonna save you. Even if you use Crew AI – let's say you use the best framework out there – that's going to be a thousand projects like the one you just ran in GitHub. Is that how you're going to go into this new agentic way?"

This realization drove Crew AI beyond open-source tools toward enterprise infrastructure centered on interoperability – what Moura called "the inconvenient truth for building."

"I want to use any data management I want. I want to use any LLM I want. I want to use any orchestration framework, any memory, any authentication, any connector, any agentic apps," he emphasized, comparing the current moment to early cloud adoption where vendor lock-in created long-term business constraints.

From Document Processing to Dynamic Decision-Making

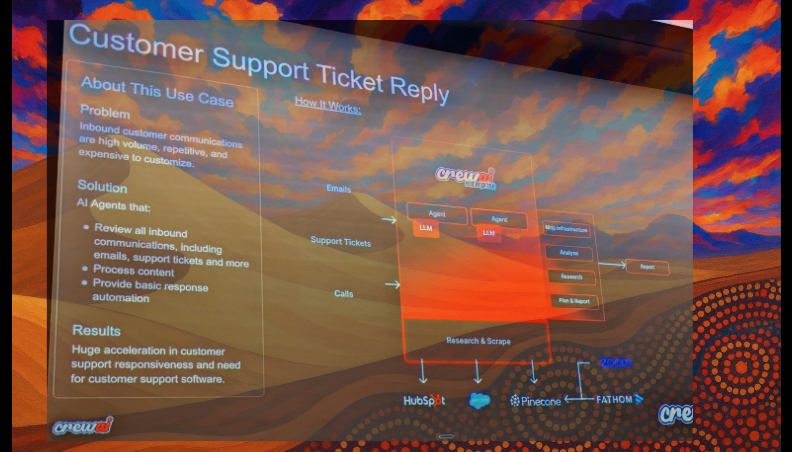

The practical applications showcased weren't incremental improvements but workflow transformations. Moura demonstrated an agentic OCR system that transcends traditional document processing:

"Many companies pay huge amounts for industries to do OCR, to convert paper into digital information. It stops there. But now with agents, you can go way beyond. You extract better information using multimodal, then classify it by querying databases using historical data, then route it, prioritize it, and even take action. How many hours might this save for a company in banking, health, or insurance?"

In real-time, Moura showcased a crew of agents analyzing Netflix's catalog data through Databricks. When prompted about the oldest film available, specialized agents:

- Query the database independently

- Extract and validate findings

- Analyze related data patterns

- Generate comprehensive insights

The film in question – "Professor," a Hindi production from 1962 – wasn't the point. The methodology was. As Moura explained:

"Imagine someone says, 'I need a report comparing products by genre since 2014.' That person might not know SQL or dataframes. They could be chatting with a crew, and the crew could be doing this data work."

The Architecture of Production: Crafting Multi-Agent Systems

Nick Harvey from Lambda guided attendees through building a multi-agent system for business intelligence reporting – transforming what typically consumes "10 hours out of my month" into an automated workflow.

The workshop's central revelation wasn't technical complexity but strategic simplicity. Harvey emphasized the "80/20 rule" of agent development:

"Spend 80 percent of your time defining tasks and 20 percent defining the agent. You can spend hours architecting the perfect agent, but if you phone in the task, just like a human, the most perfect agent will still fail."

When an audience member questioned balancing specificity with conciseness in task definitions, Harvey's response cut against conventional wisdom:

"First time ever, I'm going to tell you – don't be concise. Be very descriptive. Be very, very specific on what you want. Just as if an intern starts tomorrow and you wanted them to be perfect on a zero-shot attempt, you would take the time to literally say 'Step one, do this. Step two, do this. Output this data in a percentage format.'"

The workshop demonstrated a multi-crew architecture with specialized agents for sales, marketing, and customer support operations – each accessing specific data sources but contributing to a unified business intelligence report.

Perhaps most valuable was Harvey's emphasis on state management between agents – the hidden complexity in production systems:

"If you're working towards production, you want to get pretty explicit with your state manager. If you're expecting it to return an integer, make sure to define that, because if the agent doesn't get it right, it'll try again automatically."

Document Intelligence: LlamaIndex Tackles the Unstructured Frontier

Jerry Liu, CEO of LlamaIndex, shifted focus to one of AI's most underestimated challenges: complex documents with tables, charts, irregular layouts, and mixed formats – the lifeblood of enterprise data.

"A lot of documents can be classified as complex – there's tables, charts, images, irregular layouts, headers, footers, different languages, different columns," Liu explained. "Instead of just thinking about the RAG stack, think about what functions you want to expose to an AI agent."

Liu's approach transcended traditional retrieval-augmented generation:

- Document understanding using multimodal models that "destroyed OCR"

- Normalized extraction to structure information consistently

- Tool creation to enable agents to interact with the extracted content

- Workflow orchestration through deterministic or reactive approaches

The technical demonstration processed earnings reports from Tesla and Ford – extracting financial metrics, generating financial models, cross-referencing them, and producing a research memo through an event-driven workflow:

"Every single step in an agent workflow is defined as a set of async functions. They can listen to some initial event and then emit some output event. By defining inputs and outputs, you can have an implicit graph of the workflow you want to build."

Hallucination Defense: The Production Reliability Challenge

Rahul Duggal from Lambda confronted what many consider the final barrier to enterprise deployment: hallucination. Rather than theoretical solutions, he demonstrated Lambda's production-tested approach:

- Methodology documentation: Requiring agents to explain their calculations

- Planning stage: Forcing step-by-step reasoning before execution

- Observability tools: Implementing MLflow for execution tracing

- Asynchronous execution: Running independent agents in parallel

"One of the biggest problems is we have tens of thousands of CSV rows, and we're expecting that agent to reliably produce an accurate result, knowing hallucinations are fundamental to large language models," Duggal explained. "Before the crew executes, we tell the agent to write down step-by-step what it needs to do. By making the LLM explicitly write instruction-by-instruction plans, you drastically improve accuracy."

An audience member posed the central architectural question: "Where do you draw the line between adding actual software to process data versus letting the LLM handle it? If you programmatically process all the data first, you get fewer hallucinations and way faster response times."

Duggal acknowledged the strategic tension: "If you want to eliminate all hallucination, you should write traditional software that does this symbolically, and then pass those responses to the LLM. The only hesitation is if there's insights or data that you don't know about that you'd want the LLM to discover. You probably want some combination of both approaches."

Another attendee questioned benchmarking accuracy. Duggal described Lambda's approach with their customer-facing assistant:

"We took our scraped pages and had GPT generate an eval dataset with appropriate answers, questions, and citations. We did manual review for the first 100, then scaled with synthetic data. From a user perspective, we add thumbs up/down buttons and track the results."

The Economics of Scale: Production Without the Premium

Perhaps the most surprising revelation came when Harvey discussed the economic viability of these systems:

"Depending on the model you use on the Lambda inference API – we are the lowest cost inference API available, I've verified it – it costs about 30-35 cents to run this whole workflow. I've pushed about 26 million tokens through our API, and it cost me about five bucks."

This cost efficiency transforms the calculus of adoption, making agent systems practical even for large-scale implementations.

Beyond Basics: The Art of Agent Orchestration

The workshop explored advanced patterns that separate proof-of-concepts from production systems:

- Hierarchical workflows: Using manager LLMs to delegate tasks dynamically

- Asynchronous execution: Running independent agents in parallel

- Human-in-the-loop: Configuring fallbacks after specified retry attempts

Harvey explained hierarchical workflows: "You don't have to assign an agent to every workflow. If you switch to a hierarchical workflow, it'll introduce a manager LLM, and then based on the task, the manager agent will figure out which agent to delegate to."

Duggal demonstrated asynchronous execution: "Those three crews – sales operations, marketing, and customer success – have nothing to do with each other. This function allows you to run these crews asynchronously. All three crews were running async, which allows for significant speedup."

When questioned about security and prompt injection, Jerry Liu emphasized dual safeguards: robust authentication layers and human validation for consequential actions. "Any action-taking stuff, I think generally people still want some sort of human review validation."

Strategic Questions for the Agentic Era

The workshop surfaced critical questions for organizations charting their agent strategy:

- What workflows waste the most internal time and talent?

- Which processes need agents versus traditional software?

- Where does agent state need clarity or human oversight?

- What assumptions must observability confirm or disprove?

- How will scaling change failure patterns?

- What is your strategy for managing costs as implementations scale?

- How will you handle the transition from experimentation to enterprise deployment?

The Blueprint Emerges

It is rare to witness a moment where multiple primitives shift together: reasoning, orchestration, memory, interface. This event – featuring the CEOs of Crew AI and LlamaIndex side-by-side, with Lambda guiding real-time builds – provided nearly 200 attendees with more than technical demonstrations.

It offered blueprints for the agentic era.

As attendees filed out after three hours, the sentiment was captured by one developer's remark: "This is going to save so many hours of my life."

The AI agent revolution isn't arriving someday. It's already transforming enterprises, one workflow at a time.

This event was hosted by the San Francisco MLOps Community at Microsoft Reactor. If you're exploring AI agents, check out the open-source frameworks from Crew AI and LlamaIndex, and consider Lambda's inference API for cost-effective deployment.

Originally posted at:

Popular

29:55

Video

DevTools for Language Models: Unlocking the Future of AI-Driven Applications

By Diego Oppenheimer

Dive in

Related

Blog

The Agentic Cloud: Forging the Next Era of Infrastructure

By Médéric Hurier • Aug 12th, 2025 • Views 104

45:24

Video

Inside Uber’s AI Revolution - Everything about how they use AI/ML

By Kai Wang • Jul 4th, 2025 • Views 1.7K

Blog

The Agentic Cloud: Forging the Next Era of Infrastructure

By Médéric Hurier • Aug 12th, 2025 • Views 104

45:24

Video

Inside Uber’s AI Revolution - Everything about how they use AI/ML

By Kai Wang • Jul 4th, 2025 • Views 1.7K