Drug Discovery with Deep Learning at Recursion

Drug discovery is time consuming, difficult and expensive

November 26, 2022

Drug discovery is time consuming, difficult and expensive. It also has a high failure rate. This post explains some of the unique challenges that Recursion faces in operationalizing deep learning to build maps of human cellular biology used to develop biological insights and accelerate drug discovery.

Problem context

Recursion is a biotechnology company with the mission to decode biology to radically improve lives. An important aspect of this mission is to accelerate drug discovery. Drug discovery is notoriously time-consuming, difficult, costs billions of dollars, and 90% of drugs that undergo clinical trials will fail before making it to market.

This post describes how Recursion’s machine learning (ML) specialists (engineers, scientists, directors) have developed a sophisticated approach to operationalizing deep learning – model training, inference, and serving of biologically sound embeddings – to build maps of biology and find new potential treatments.

Challenges of drug discovery

One of Recursion’s major focus areas is building models and methods that accelerate target-agnostic drug discovery. Instead of building specific models for each disease we want to find treatments for, we build models that generalize across diseases. Recursion has three drug candidates in Phase 2 clinical trials and our models have enabled the advancement of dozens more candidates in earlier stages. We believe that if we can continue improving on our approaches, then we will be able to help millions of patients get the treatments they need.

Two of the big challenges in any application of drug discovery are the lack of adequately-labeled data (e.g. what molecular compounds treat this disease) and the complexity of the connection between the input data and that label. The process of getting a drug candidate from the lab to the market takes, on average, 14 years. Even getting preliminary data about whether a drug is effective in vitro can take years and be more costly than is practical to build training datasets.

Our focus on building target-agnostic models—including for diseases currently without treatments—means that we need to develop models that generalize well. While we started with manually engineered features, we’ve taken the approach of utilizing representation learning methods to design models that can genuinely generalize beyond known biological relationships.

Our ML Scientists use techniques that include deep learning, transfer learning, and domain adaptation to design such models. We need unique MLOps capabilities to support our ML teams in improving these models and data pipelines that leverage the petabytes of data produced by our laboratories.

Recursion’s data

Recursion’s labs create huge amounts of high-content + high-throughput screening assays. One of the datasets we’ve used these robotic labs to assemble is a constantly growing dataset of over 19 PB of images of cells.

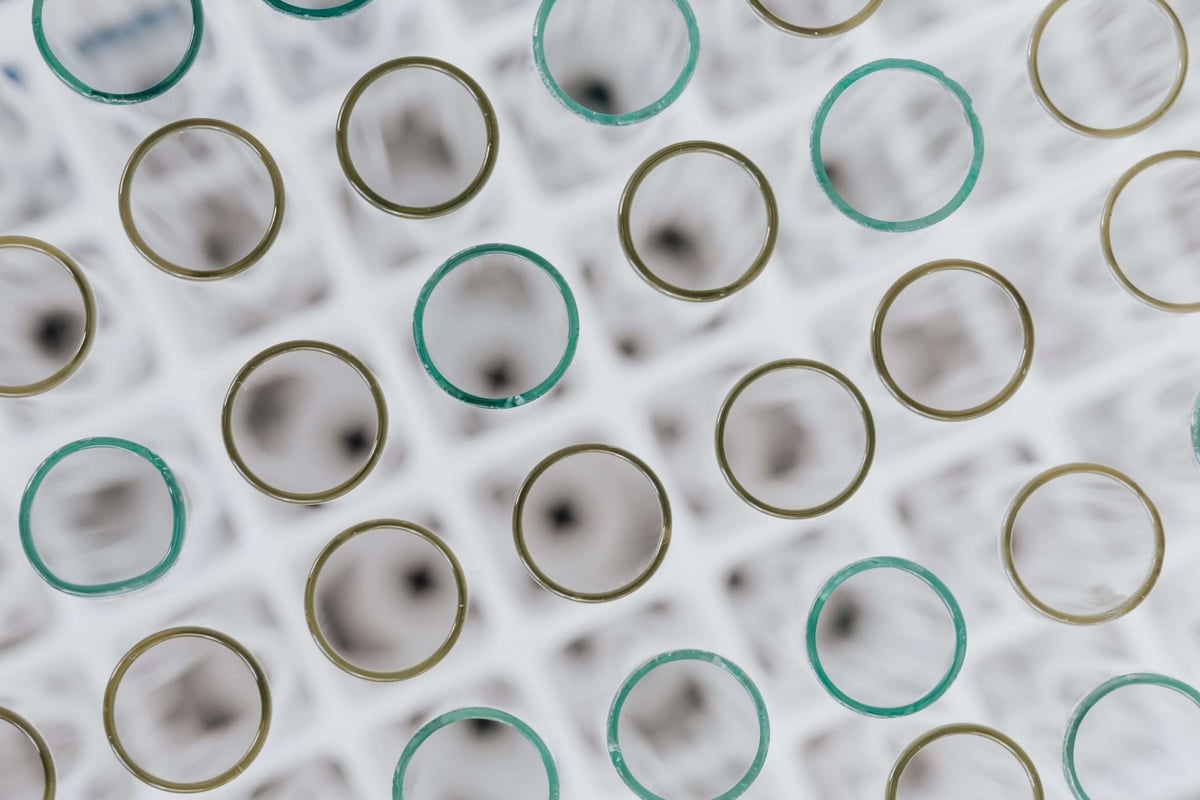

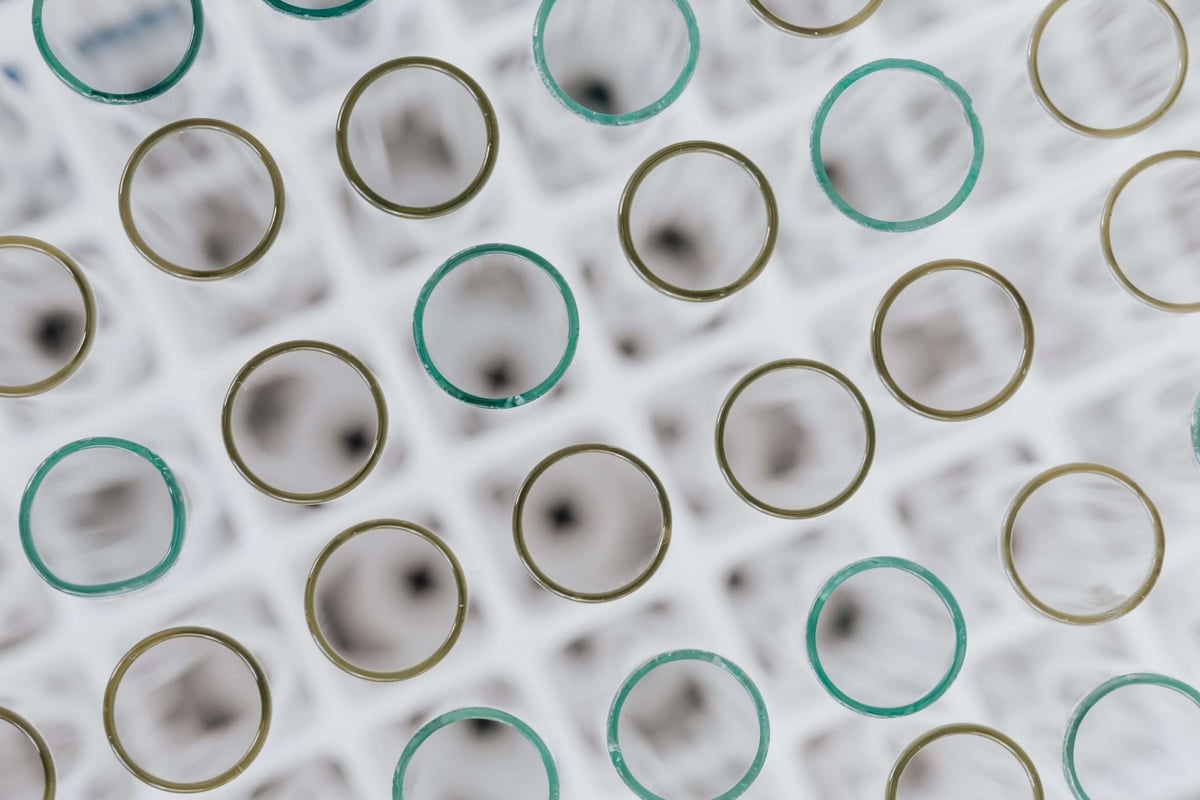

This microscopy data was generated using an in-house version of a high-content assay called Cell Painting that uses fluorescent dyes to capture the morphology of different parts of cells. The high-resolution microscopy images that we take from these assays show the impact of performing perturbations on cells – altering their biological function via techniques like siRNA/CRISPR based genetic knockdown or exposure to small molecules.

Images of two different genetic conditions (rows) in HUVEC cells across four experimental batches (columns) in dataset RxRx1. We’ve released ~1 TB of our data to the public over 4 RxRx datasets.

Modeling paradigm

Recursion first used this image data with a tool called CellProfiler to extract biological features. While CellProfiler offers machine learning based features, it also includes a large number of manually-engineered features extracted with traditional computer vision techniques. These computer vision techniques allow CellProfiler to work in a general-purpose way across different cell types and disease targets.

In an effort to increase our confidence in our models, we invested in deep learning (DL). We found that DL models specially trained on our datasets extract unique features better suited to our downstream tasks. Our DL models use weakly supervised learning to classify which perturbation was applied to the cells based on the input image. For us, this classification task results in high quality representations when trained on the right data. The classification objective is purely a proxy task – the output of each model that is used in downstream applications is a hidden layer that we treat as an embedding of the input image.

Transfer Learning

Our modeling paradigm is a form of transfer learning. Models trained via narrow, well-defined tasks like perturbation classification produce embeddings used in downstream applications that help our biologists discover drugs.

This transfer learning approach is analogous to the approach taken in natural language processing (NLP) for training word embeddings and language models. Word embeddings, such as Word2vec, are often trained to predict the surrounding context of a word (specifically, pointwise mutual information statistics). The resultant embeddings provide dense vector representations of words that are much more generally useful for NLP tasks beyond context prediction.

NLP practitioners design many evaluation tasks and protocols in order to assess and improve word embedding algorithms. One common task is using the word embeddings for analogy completion; e.g., “Paris” is to “France” as “Berlin” is to ___? Such tasks are necessary because accuracy at the training task (e.g., context prediction) does not necessarily translate to good performance in downstream applications (e.g., analogy completion).

We’ve developed and continue to grow a suite of benchmarks that similarly aim to measure how well-suited our embeddings are for downstream applications. These benchmarks quantify, for example, similarities between the embeddings of genes which are known to have the same effect on cells.

Recursion’s internal processes ensure that these benchmarks are robust, scalable, and connected to business objectives. This enables quantifiable comparisons between different sets of embeddings. Such comparisons help our ML teams determine how to refine their models and provide data for making empirically-grounded decisions regarding model selection for production settings.

MLOps

The scale of Recursion’s data and the complexity of building representation models of biology yield many interesting MLOps challenges. For the rest of the post, we’ll highlight two:

- The challenges that ML scientists and engineers face when training DL model variations (vary model backbones, loss functions, hyperparameters) on massive amounts of data;

- The challenges of running inference at scale to produce embeddings and enable robust benchmarking of the resultant embeddings.

Challenge 1: Model training

In 2021, Recursion purchased the 84th fastest publicly benchmarked supercomputer in the world (now it ranks #106) called BioHive-1. This on-prem cluster based on the SuperPod reference architecture is equipped with hundreds of powerful Nvidia GPU slots and nearly 40,000 CPU cores.

The ML Platform team at Recursion enables ML engineers and scientists to train DL models on this machine easily and at-scale. To this end, we’ve adopted Determined AI as part of our tech stack.

We coupled Determined with our own custom software for submitting jobs and managing training configurations and datasets. Our software is maintained with GitOps and DevOps best practices, using a great tool called Codefresh for continuous integration / continuous delivery (CI/CD). This coupling with Determined enables our users to easily train many DL model variants simultaneously and monitor their progress during training. We also leverage MLFlow as an experiment and model registry to checkpoint our trained models.

Our training loop can be summarized with the following diagram:

Our CI/CD pipeline automatically builds a Docker container that fully defines the training environment and registers it into Google Container Repository (GCR). This container contains the dependencies necessary to submit training jobs distributed across BioHive-1’s GPU nodes.

Once a DL model training job finishes successfully, we save artifacts to MLFlow backed to Google Cloud Storage (GCS). Users and automated systems can easily retrieve these model artifacts for use in downstream inference jobs or for fine tuning.

This workflow addresses challenges such as environment configuration, dataset management, and the distributing load across the underlying hardware. Such abstraction enables ML scientists to focus on the intricacies of refining and prototyping deep learning models without getting bogged down by DevOps challenges.

Challenge 2: Model inference and benchmarking

After training a DL model, we need to benchmark it against previously trained models. Given that we’re using the DL model for the purpose of transfer learning, benchmarking requires us to perform inference with the DL model to produce embeddings of the images in our benchmarking datasets.

Our model evaluation benchmarks require running inference on hundreds of millions of images into the DL model to evaluate the biological soundness of their resulting embeddings. Our ML scientists may train hundreds of DL models in a month, so operationalizing this workflow is crucial.

We run DL model inference workloads on preemptible VM instances equipped with GPUs in Google Kubernetes Engine in addition to on-prem. This design allows our ML scientist users to submit inference jobs without needing to worry about configuring resource scheduling, parallelization, and allocation.

After inference is performed with a DL model, we can evaluate the quality of its embeddings. While the embeddings produced by the models are substantially smaller than the original images, some of these benchmarks involve robust non-parametric statistical tests and other computationally intensive tasks. We support a framework that allows data scientists, ML practitioners, and computational biologists to author metrics, configure the datasets used to benchmark with these metrics, and run them at scale.

Enabling velocity both in model development and model evaluation is critical for our success. Operationalizing machine learning at Recursion requires support for standardized, dynamic validation datasets, especially given: (a) we are constantly running new biological experiments to gather more data (including from different domains like new types of cells), and (b) the rapid pace of research in computational biology and deep learning methods.

Our benchmark suite produces a final report summarizing the results quantifying the biological integrity of the DL model’s image embeddings. The report is used by ML scientists and decision makers to determine whether or not a DL model should be selected for production and helps our researchers understand how choices they’re making impact model performance, making it easier to come up with new hypotheses to test.

Conclusion

MLOps is a demanding and relatively new domain, and requires even more in the context of professional computational biology. Biotechnology is a cutting-edge field, and continuously applying state-of-the-art deep learning methods to petabytes of image data while staying up-to-date on the best technology stacks and infrastructure to support this at-scale is no small task.

In this post, we’ve conveyed some of the unique challenges surrounding operationalizing deep machine learning to enable drug discovery at Recursion. We hope that our experiences, learning, and solutions provide a source of inspiration for other ML professionals and companies.

Authors Bio:Kian Kenyon-Dean Senior Machine Learning Engineer, Jake Schmidt Machine Learning Engineer, John Urbanik Staff Machine Learning Engineer, Ayla Khan Senior Software Engineer, Jess Leung Associate Director, Machine Learning Engineering, Berton Earnshaw Machine Learning Fellow at Recursion; are solving some problems in the Pharmaceutical space (decoding biology) in style, with AI/ML.