Governance for AI Agents with Data Developer Platforms

# Governance for AI Agents

# Data Developer Platforms

# DDP

Facets of Governance for AI Agents, Current Challenges/Risks and Data/AI Architectures that Address them.

December 10, 2024

Brij Mohan Singh

Travis Thompson

Ritwika Chowdhury

Imagine having an AI that not only executes a set of functions but strategises, thinks ahead, responds to your users, and humanizes the entire user journey.

When AutoGPT debuted, we saw this shift from reactive tools to proactive decision-making systems, demonstrating a bold step forward, tackling complex tasks like market analysis, website creation, and logistics planning. It could even generate subagents—specialized AI units designed to complete specific parts of a broader goal.

Initially requiring user consent for major decisions, AutoGPT sparked conversations about how far such autonomy should go. The prospect of fully independent agents raises crucial questions about trust, accountability, and alignment with human goals.

Today, we have a diverse array of autonomous AI agents but this introduces a paradox!

While, on the one hand, these AI agents could revolutionize productivity, automating tedious tasks and mediating or replacing uncomfortable human interactions—think negotiating a complaint or managing a business transaction. On the other, this delegation of significant decision-making and operational tasks to AI agents raises pressing questions about governance.

🎁 Editor’s Recommendation Before diving in, if you’ve not already checked it out, tune in on our latest episode of Analytics Heroes with Abhinav Singh 🦸🏻♂️ where he spills the beans on everything from Cloud Complications and Data Stack Shortcommings to Unified Platforms and Data Products! Read full story

Impact of Increasing Use of AI Agents on Governance

Let’s start from the foothill of the mountain!

Just the idea that agentic AI enables creativity and autonomous decisions is itself a risk. Such an approach allows the agents to penetrate key systems and perform actions across different platforms, sites, devices, and networks, often directed by human-created prompts with negligible intervention during execution.

Unlike traditional AI models, agents don’t just offer insights—they ACT. And those actions, be it executing phishing instructions or mismanaging sensitive tasks, can have direct consequences for individuals and orgs, sometimes even without their active knowledge.

Moreover, the risks compound when agents collaborate or rely on each other, creating a cascading effect from a single system failure. As these agents grow more capable, the trade-offs between efficiency and risk become sharper. To navigate these challenges, frameworks like the economic theory of principal-agent problems and the common law of agency provide a lens to balance these trade-offs effectively.

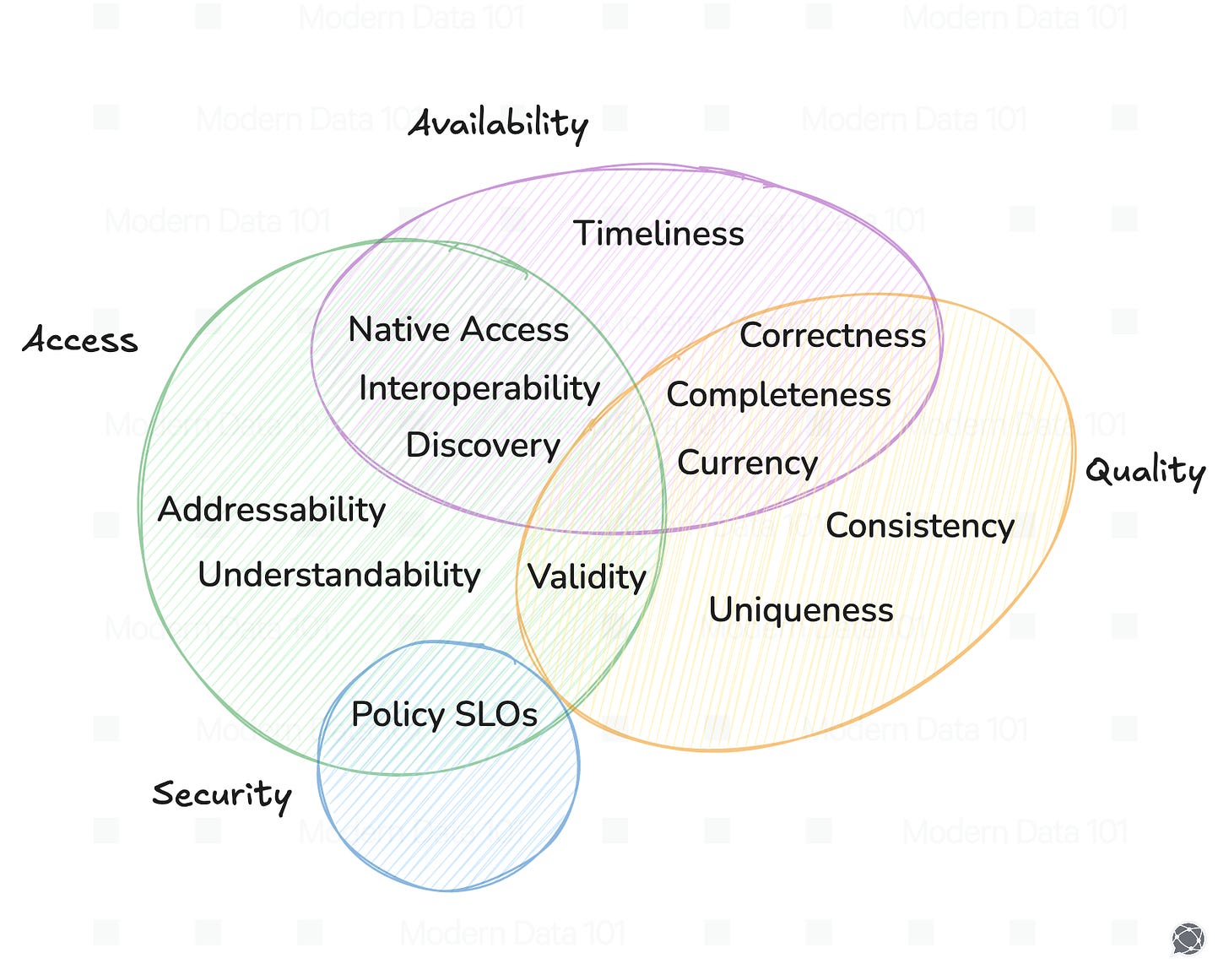

The Many Dimensions of Governance

Governance is a multifaceted concept integral to building a wholesome system that promotes ethical decision-making, robust compliance measures, security frameworks, and proactive accountability—all converging to ensure sustainability, trust, and alignment with organizational and societal goals.

The Multiple Facets of Governance | Source: MD101 Archives

There can be an extensive classification of governance risks that AI agents bring in. However, in several cases, the risks they bring don’t always fit neatly into categories—they often overlap and create new challenges.

To tackle these risks, the key is understanding the root causes to help address the problem at its source, making solutions more purpose-driven and long-lasting.

💡What you might want to look for?

Often, the nature of these impacts can be way scarier than you suspect. Sometimes, these AI agents can have what we call delayed and diffused impacts, meaning their effects might show up much later and be hard to spot because they’re spread across many cases.

For instance, imagine a banking org deploying an AI agent to automate the loan approval process & this agent denies underserved communities due to biased data. The exclusion remains hidden until audits expose it years later (a delayed impact).Similar agents could restrict entire demographics from accessing credit, stifling economic growth (diffused impact).

The agent might favour the bank’s own loan products, distorting market competition and limiting customer choices, exhibiting a self-preferencing behaviour.

If AI agents replace human interactions, they risk eroding trust, as customers may feel disconnected, dealing with impersonal systems that lack empathy.

Policy Confusion & Risks With Agentic Replacement of Humans

As we see, these AI agents often running behind an LLM interface bring in governance challenges around the LLMs too. Without advanced agents, the general scenario might be something where the users ask natural language questions to the LLM interface, where the UI provides a semantic query, and the user runs this query to obtain the required output.

By outputting the query instead of the data, the responsibility for executing the query shifts to the human user. Here, when the user runs the query on a separate platform (e.g., a workbench), their individual access policies naturally apply, ensuring compliance with governance rules and preventing unauthorized access.

However, introducing agents often attempts to replace this human intervention. If we try to pinpoint one major angle for viewing the risks, it is the potential of these agents to largely remove humans from the loop.

The LLM interface operates as a machine user and directly queries a dataset and provides output, bypassing granular access controls tied to individual users. Allowing the LLM to both query and return data output risks circumventing user-specific permissions.

The impact:

- ambiguity in enforcing data policies (since the interface may inadvertently access data that certain human users are not authorized to see)

- unauthorised access, granted unintentionally by the LLM (if the human querying through the interface lacks access to certain datasets)

Security Risks Due to Faltering Code and Access Management

Unlike traditional AI, which focuses on inputs, processing, and outputs, AI agents interact with broader systems and processes. This creates vulnerabilities across entire event chains that may go unnoticed by human operators.

Risks coming from data exposure and exfiltration

This can occur at any point along the chain of agent-driven events. Additionally, as agents can consume system resources excessively, it can potentially lead to denial-of-service conditions or financial burdens.

Imagine an AI agent tasked with optimizing credit card transaction approvals during peak shopping hours. If the agent begins querying the core banking system frequently without upper limits, it could overwhelm the system's capacity and cause delays or downtime.

This could result in a "denial-of-service" where legitimate transactions fail, frustrating customers and breaking trust or impacting experience. Additionally, there may be spikes in operational costs from excessive computational load.

Risks arising due to coding errors

Hard-coding credentials in AI agents, especially in low-code or no-code environments, make it easy for attackers to access sensitive data or exploit the system.

There’s a risk of malicious code being hidden inside documents read by AI agents, which can lead to code execution when the agent processes the document.

This attack vector isn’t new, as this is a classic SQL injection or database stored procedure attack. There are emerging mitigation techniques that leverage data loss prevention-type patterns to limit or exclude data types from being learned.~ Dan Meacham, VP, Cyber Security and Operations, CSO / CISO at Legendary Entertainment

Safety Risks Associated With Ambiguous Scope of Responsibilities

Agents are deployed to execute a specific task or a set of tasks, which requires defining the scope of responsibilities for the agent.

This introduces a set of new challenges around scope creep, misalignments in roles and security risks around access controls. The ambiguity of responsibilities or even a lack of clearly scoped agent responsibilities can lead to overreach.

Risks in a single-agent system

For instance, consider a single agent handling account balance inquiries and managerial reporting risks exposing sensitive data without clear role-based boundaries.

When deployed for both account holders and managers, permission distinctions are critical. Weak access controls could lead to unauthorized responses to sensitive queries (e.g., "top ten high-balance accounts").

An account holder querying the same agent framework as a manager could unintentionally access sensitive organizational data, increasing the risk of unintended exposure. 🔑 Defining clearly scoped use cases and creating the right modularity even while building agents is front and centre!

📝 Related Reads More on building modularity for AI Agents and Data Supply

Risks associated with multi-agent systems

The risks often compound when agents collaborate or rely on each other, creating a cascading effect from a single system failure. As these agents grow more capable, the trade-offs between efficiency and risk become sharper.

Deploying multiple AI agents at scale introduces risks not seen in isolated systems. Agents can unintentionally interact, creating destabilizing feedback loops. For instance, content recommendation algorithms like those on social media face destabilizing feedback loops.

In this case, it would optimize for social media engagement metrics, such as clicks and time spent, often creating ‘echo chambers’ or ‘filter bubbles.’ This reinforces user biases, limits diverse exposure, and can amplify misinformation or polarize opinions, impacting user experience and society.

Agents sharing foundational models inherit vulnerabilities, magnifying failures across systems. Furthermore, complex agent behaviour can be unpredictable, and competitive pressure may drive the development of agents with anti-social tendencies.

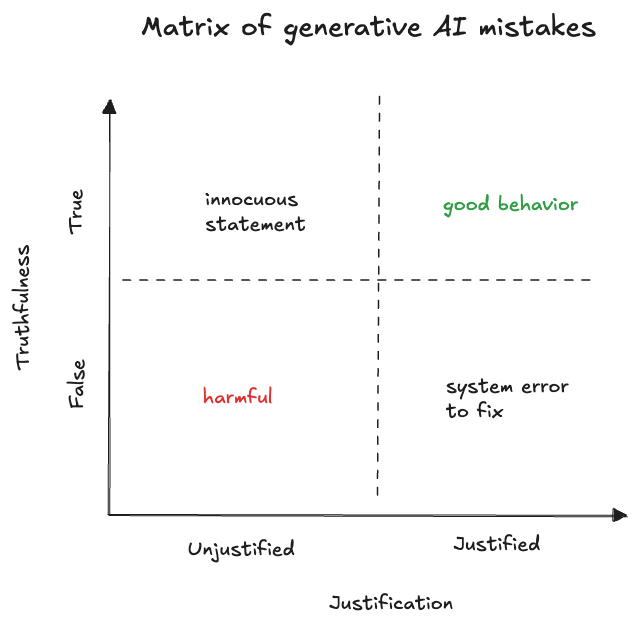

The Risks Associated With the Quality of Responses

It isn’t just the security, safety, and privacy risks that data governance entails. Governance comprises the holistic means of managing data assets for its usability and reliability, besides security.

And as we know, these agents largely intersect with language models or LLM interfaces, and these agents become vulnerable to similar risks like hallucinations, biases, lack of context, and leakage of sensitive personal data.

A human-agent misalignment is often a common scenario where agents generate responses inconsistent with user expectations due to gaps between training-time objectives and real-world human values.

Beyond the typical LLM’s scope of risks, these AI agents suffer from sycophancy, where they tend to overly accommodate user inputs, even when they are misleading or incorrect. This happens because models often prioritize aligning with user-provided feedback during training rather than maintaining factual accuracy.

In tasks like Principal-Agent scenarios, where the agent represents a user (the principal), differences between the agent's learned objectives and the user’s actual goals can lead to errors or ethical conflicts, particularly in situations with incomplete information.

As humans interact with AI agents, they usually communicate using prompts—essentially the instructions or questions we give the AI. The biggest risk here is something called adversarial attacks. These are sneaky, deliberate attempts by bad actors to trick the AI by feeding it misleading or carefully crafted prompts.

Though this might sound like a security threat (which, of course, it is), such attacks affect the quality of responses, too. The AI might get confused and give wrong or biased answers.

The Umbrella Risk: A Lack of Visibility and Control Over Agents

Visibility entails the detailed knowledge of who, when, how, and where the AI agents are used. In other words, the entire lineage of AI actions, data movement, user interactions, and impact on the far right of the data chain. A lack of visibility in AI agents limits our understanding of how, why, and where these agents are used, as well as their potential impact.

As we aim to curate the solution to these overarching risks, we can pen down that the aspired state of the AI agents involves end-to-end visibility by offering the organisations with:

- complete control

- heightened governance

- accountability

The key idea here is that when organisations are leveraging these agents, what is the ideal scenario for them—what is the ideal and fundamental framework or approach they should deploy for building the agents?

The key is to ensure that wherever the agents are being deployed, the organisations have a great deal of control over them. In a world where AI agents operate autonomously, governance risks can feel like navigating a fog. But what if there’s a design principle that cuts through the uncertainty?

Data Developer Platform: A Design Principle To Enable Governance With End-to-End Visibility

The data landscape is moving towards unified infrastructure quite fast. And, at this juncture, leveraging a data developer platform (a rising community-driven unified infrastructure standard) provides the building blocks for governance and monitoring features.

To attain the ideal scenario of ensuring governance, we believe in merging the secure practices of software development and data engineering through key functionalities of a data developer platform (DDP) that surfaces the required tools for self-service across multiple layers of data citizens.

Modular approach of a DDP + Secure software dev practices = Self-Served Decentralised Governance for AI Agents Across DomainsAn effective DDP enables developers to streamline the process of deploying the AI Agents while addressing their governance risks.

So imagine having an Agentic system named Batman (comprising of custom agents and a multi-agentic workflow) and this is integrated and deployed through a DDP. Now, when users log into the DDP, Batman inherits all governance policy models, roles, and permissions enforced by the platform for the specific domain or use case Batman hails from. This ensures that Batman operates within the same governance framework applied across the platform as any other module.

How Does DDP Enable Governance for Agents?

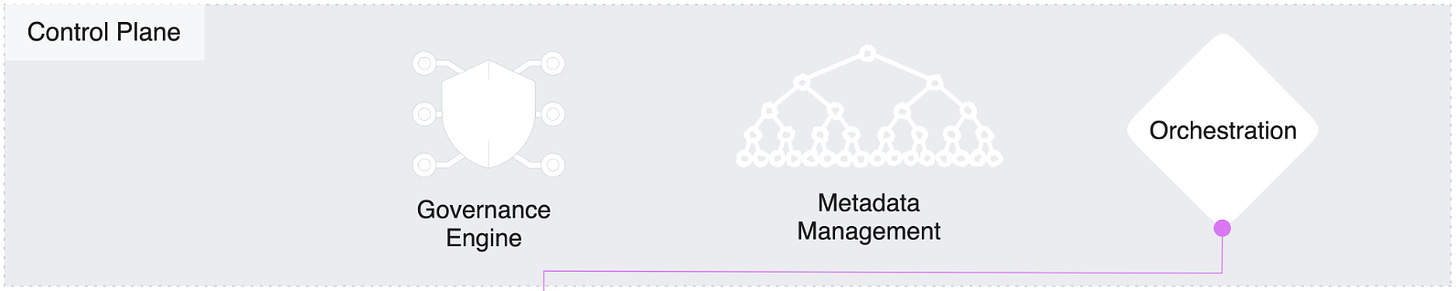

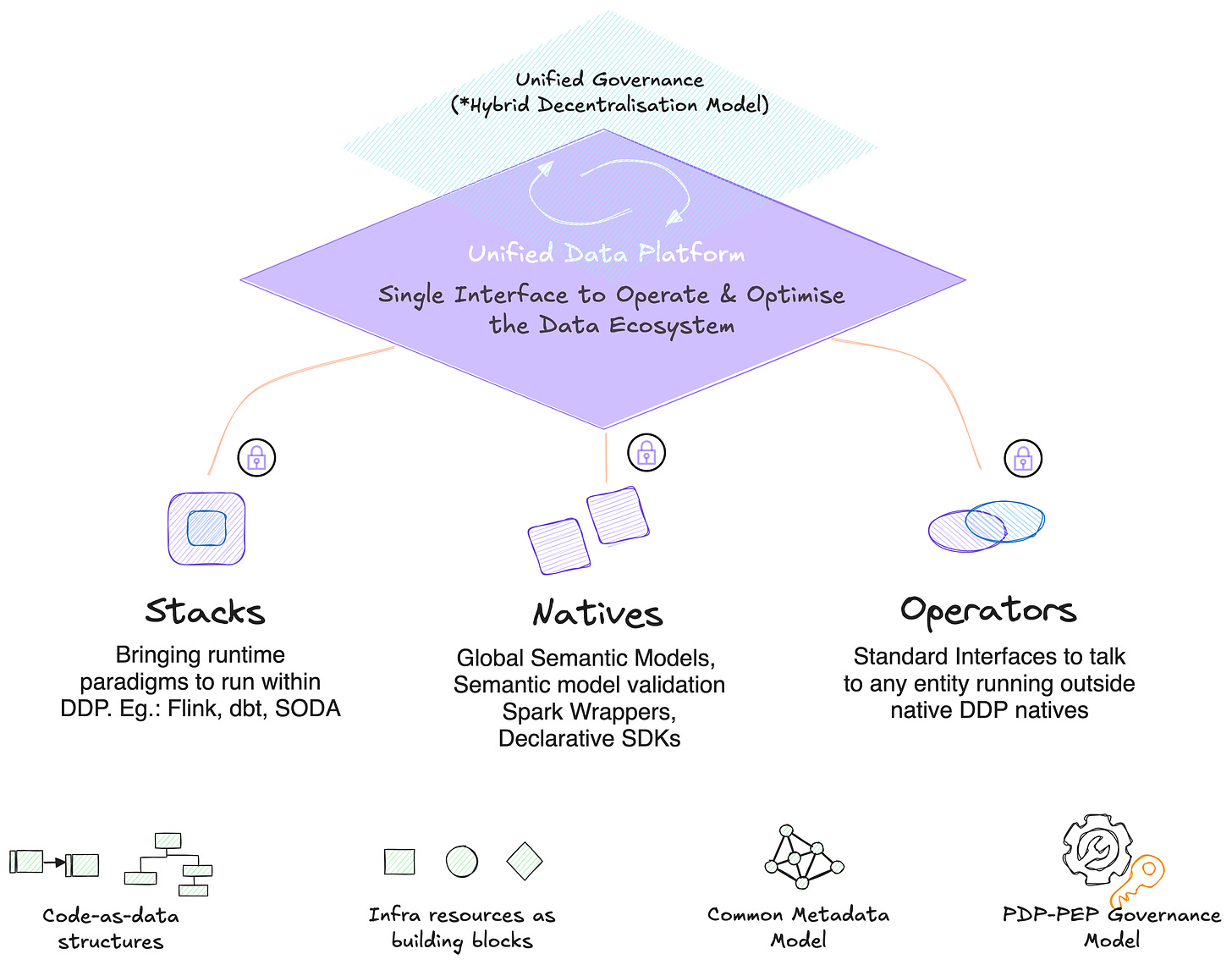

A DDP comprises a central control plane that acts like a central command centre that establishes your rules, policies, and standards to maintain order and coherence across the entire platform. Like a governance model (much like a metadata model). This control plane enables the governance of the entire data ecosystem through end-to-end unified management.

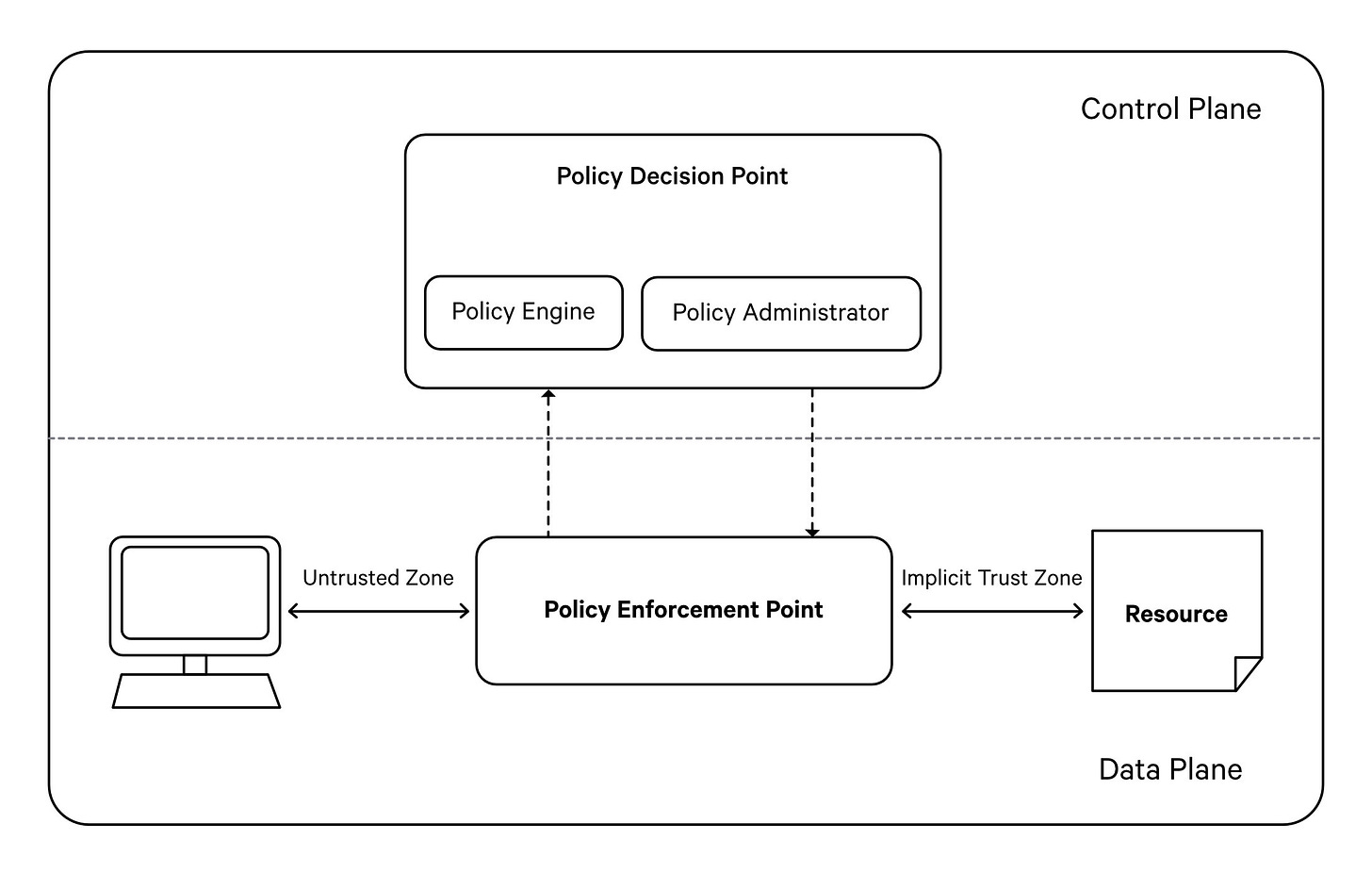

What about decentralised governance?Consider the PDP-PEP governance model: a hybrid decentralisation approach that inherits the best of both worlds while stabilising the demerits of each pure-breed model (centralisation, decentralisation).

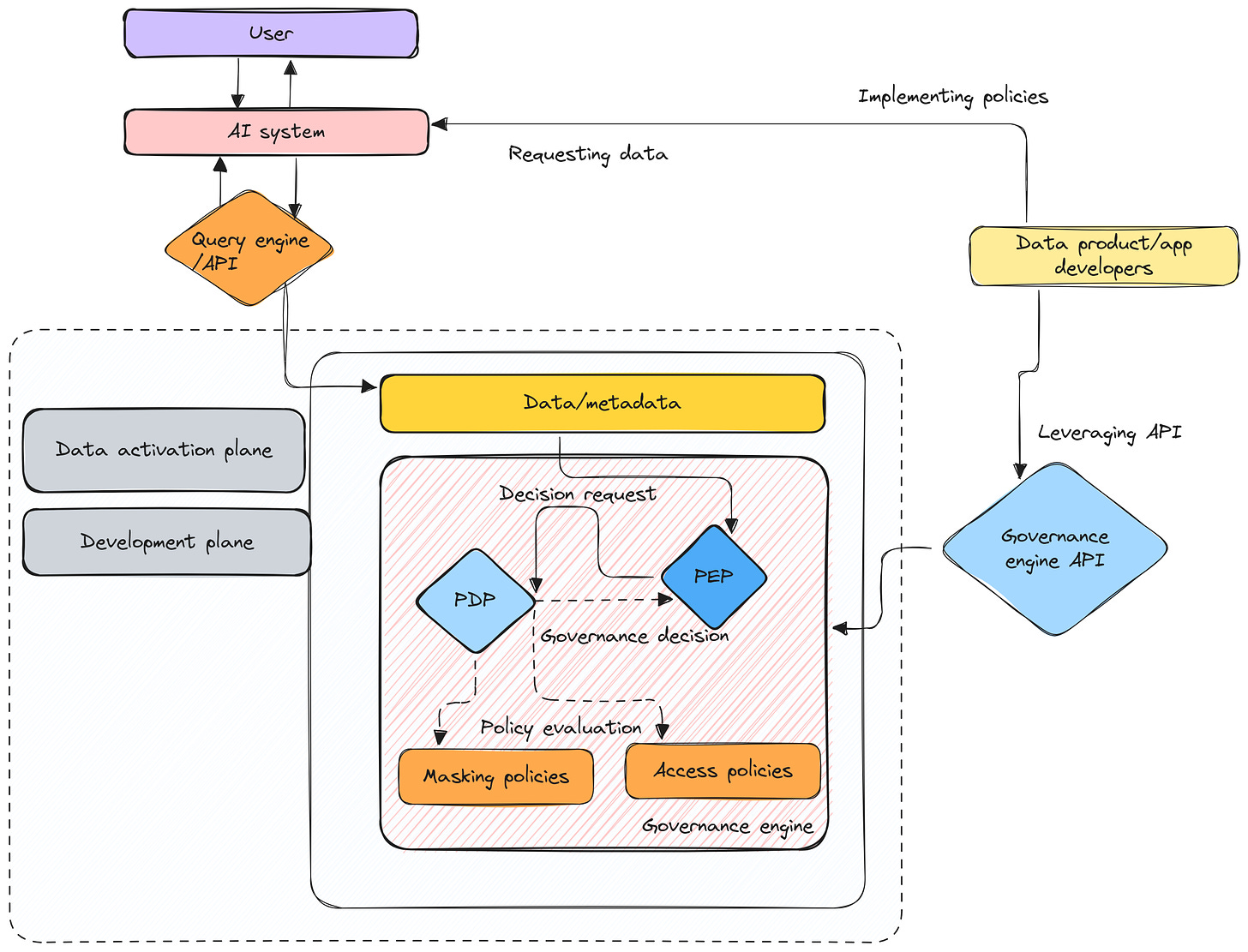

The data developer platform’s native governance engine implements ABAC by acting as a PDP or a Policy Decision Point and transmitting decisions to PEPs or Policy Execution Points.

- PDP or a Policy Decision point is where the system decides the course of action with respect to a policy. It is the central and only point of authority for any and all governance across the data ecosystem. A data ecosystem cannot have more than one PDP.

- PEP or a Policy Execution Point is where the policy is implemented. A PEP can exist both internally as well as externally in the context of the data platform. There can be multiple PEPs operating in the data ecosystem based on whichever app, engine, resource, or interface wants to implement certain policies.

A POV of a Modular Data Stack Modelled after the DDP Standard | Source: Authors X MD101

PEPs exist across domains, data products, and use cases, enabling a localised point of authority. While the PDP sits in the central control plane, enabling end-to-end visibility as well as a superior layer of global authority (handling clashes, context, and reusability of modular policy models)

Catering to Governance Needs With Role-Based Access Policies

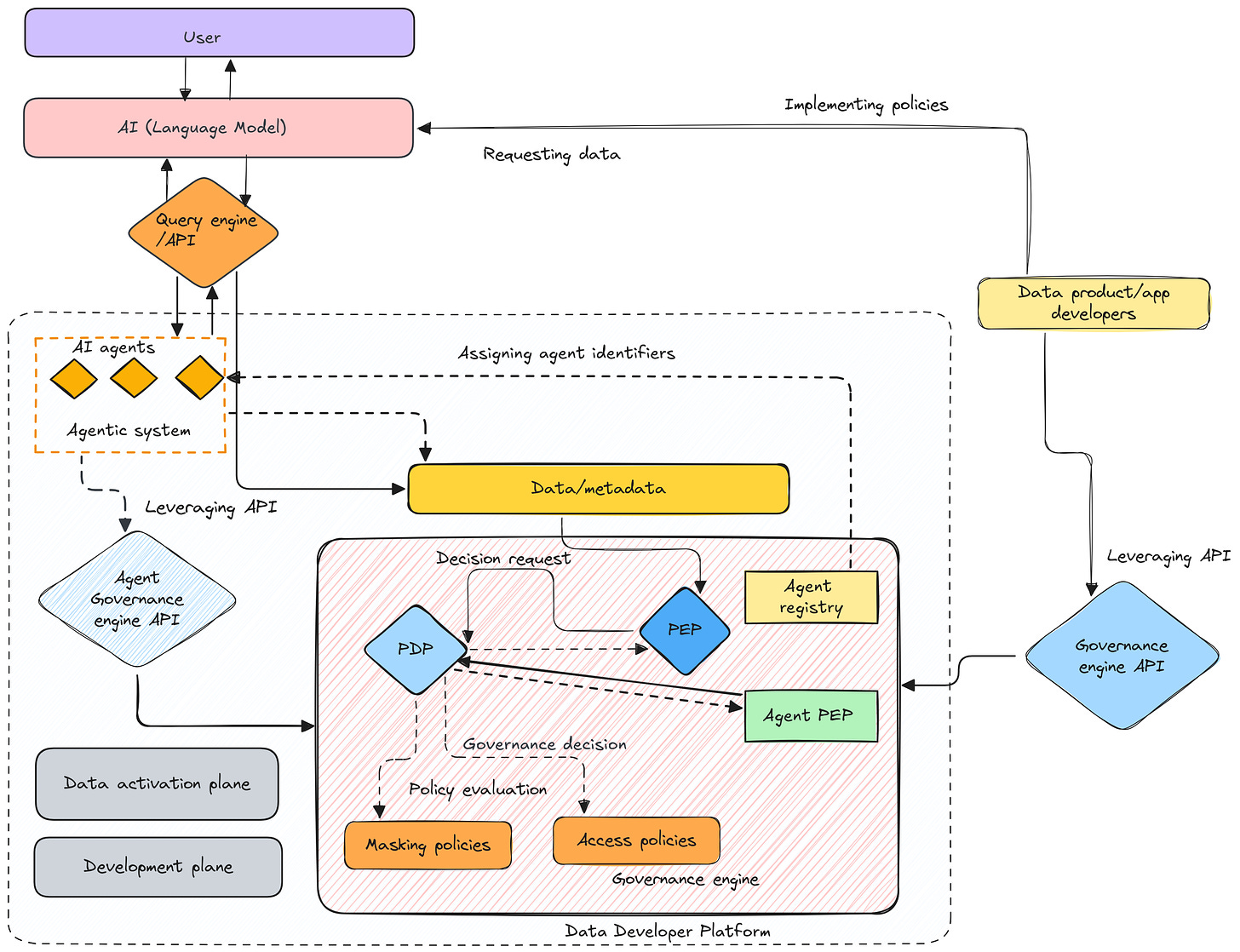

The governance engine in a DDP has tight policies for access to define who can access data and the permissible actions, aligning interactions with organizational and compliance requirements. The governance engine acts as the PDP, providing APIs that developers use to integrate governance directly into their applications. Batman relies on PEPs to implement these policies during execution, ensuring adherence to access and security rules consistently across workflows.

By using Policy Decision Points (PDP) for centralized governance and Policy Execution Points (PEP) for enforcement, the platform ensures distributed, consistent, yet unified policy implementation.

How a Data Developer Platform (DDP) Enables Governance for Agents | Source: Authors

A Real-World Platform Implementation of the DDP Standard (Governance-Specific) | Source: DataOS.info

A DDP can enforce agent-level policies using platform-specific controls, such as identifying whether the action came from a human user, a bot, or an external service to reduce ambiguity and prevent unauthorized agents from accessing sensitive data.

In a similar banking scenario, a customer service bot can access basic account details, while loan officers access credit profiles.

Clubbing the agent-identifier approach to the DDP

Agent identifiers help mark whether and which agent/agents are involved in the given interaction, thereby helping ensure transparency in the workflows. Within a DDP this could translate into tracking who accessed the platform, what code was executed, and ensuring all activity is logged for auditability—critical for enforcing data access policies and ensuring accountability.

The idea of expanding the scope of DDP-facilitated governance to enable the agentic systems to access and enforce governance policies without integration into the core environment | Source: Authors

Securely Storing Credentials With Secret and Access Management Resources of a DDP

To avoid hard-coded credentials, a DDP securely stores the API keys, access tokens, and other secrets in centralized, encrypted systems. The org can leverage the same framework to manage every additional access key and credentials required by the agents.

As we discussed how governance of the AI Agents percolates to the LLM space as well, it is critical to manage LLM credentials securely. For example, if Batman requires OpenAI or other LLM APIs, these sensitive keys aren’t hardcoded but stored securely in a dedicated system vault. This approach prevents credential leaks and aligns with best coding practices.

Hence, the policies govern token usage and rotation, ensuring AI agents can securely access LLMs and external APIs while maintaining compliance with organizational standards. When a new customer or user is onboarded to DDP, they receive credentials such as usernames, passwords, and tokens. These credentials are managed securely, ensuring consistency and compliance across all integrated modules like Batman.

Ensuring Unified Observability and Monitoring

An attempt to improve visibility for AI agents

Simply creating an agent, deploying it to customers, and collecting occasional feedback isn't sufficient. There needs to be a system that continuously observes the conversations—tracking inputs, outputs, and the quality of responses. This system ensures we can assess whether the agent's performance is improving, stagnating, or degrading over time.

Observability mechanisms of a DDP can track AI agent activity and policy adherence in real-time. Metrics from endpoints monitor API calls and data usage, ensuring compliance and flagging anomalies.

Developer action required: simply instantiating an already available DDP monitor resource and tagging it with the Batman bundle.For example, model iterations or queries made by agents are logged and audited to identify compliance gaps or misuse. Again, anomaly alerts highlight unusual activity, like multiple failed login attempts, to prevent unauthorized access.

The capability of a DDP to enable metadata-driven monitoring (e.g., logging each query or runtime operation) ensures every agent or developer's action is trackable. This addresses agent misuse concerns by ensuring a clear trail of accountability.

📝 Editor’s Note: A more detailed reference of monitor as a resource (real-world implementation of DDP’s observability model)The Monitor Resource is an integral part of DataOS’ Observability system, designed to trigger incidents based on specific events or metrics. By leveraging the Monitor Resource alongside the Pager Resource for sending alerts, DataOS users can achieve comprehensive observability and proactive incident management, ensuring high system reliability and performance. ~ DataOS.info

Final Note: It’s How You Bundle Your Agent That Matters!

As SaaS providers, it’s not just about building great software; it’s about how you encapsulate it and offer it to your consumers. Think of it as gift-giving—security layers, governance policies, and easily operable customer interfaces are the ribbon and bow that make it irresistible. If it’s not bundled right, your customers won’t see the value, no matter how great the gift inside is!

Once you've built a software application like an AI agent, the question arises: How do you secure it? How do you safely expose it to customers in their environments?

This requires layers of security—permission wrappers, filters, and robust policies—elements that aren't central to building agents but are essential for offering them securely.

A DDP simplifies this process. By providing out-of-the-box governance, security, and flexibility to experiment with multiple LLMs, DDPs remove the operational complexities, allowing developers to focus purely on developing logic and agent capabilities. It simplifies decisions like fine-tuning models or switching LLMs, ensuring simple one-hop integration and secure deployment while aligning with org, domain, or use case standards.

Originally posted at:

Dive in

Related

13:16

Video

AI-Powered Data Unification for Data Platforms // Shelby Heinecke // DE4AI

By Shelby Heinecke • Sep 18th, 2024 • Views 774

13:16

Video

AI-Powered Data Unification for Data Platforms // Shelby Heinecke // DE4AI

By Shelby Heinecke • Sep 18th, 2024 • Views 774