Using Federated Queries to Query Data from AWS RDS to Redshift

💡 Federated queries can be used to connect our transactional databases to data warehouses for analytics sake

October 31, 2022

💡 Federated queries can be used to connect our transactional databases to data warehouses for analytics sake. This blog shows how to run federated queries from Postgres database to Amazon Redshift.

Introduction

To first understand federated queries, we need to first understand Online Transactional Processing (OLTP) and Online Analytical Processing(OLAP).

Let’s take an example. Imagine you have an e-commerce application where people can make orders online and the orders will be delivered to them. Anytime a user makes an order, it goes to the orders table of the company database. This type of database is responsible for transactional-oriented applications. It captures, stores and processes transactional data of the organization in real-time. This type of database is responsible for the efficient running of an organization. This database is called Online Transactional Processing. Examples of OLTP systems are AWS RDS database, Postgres SQL databases, and traditional RDBMS systems.

However, if you use this database for analysis, it will slow down the operations of the organization. OLTP is not the best option when it comes to analysis and performing complex joins on tables. For these types of operations, we use Online Analytical Processing (OLAP).

OLAP is more efficient for complex queries, complex joins, and general analytics. An example of an OLAP system is a data warehouse. Examples of data warehouses are AWS Redshift, Snowflake, Big query e.t.c.

Basically, federated queries are ways to send a query or a statement to an external database and get the result in the form of a temporal view or table. In this example, you have a Postgresql OLTP database. Based on the explanation above, this type of database is not a good choice for analysis because complex select statements and complex joins slow down your application. Data warehouses are perfect when it comes to running analytical queries. For this reason, you can perform federated queries by connecting some kind of data warehouse or data lake to your remote Postgresql database. You can then run your analytical queries on the data warehouse.

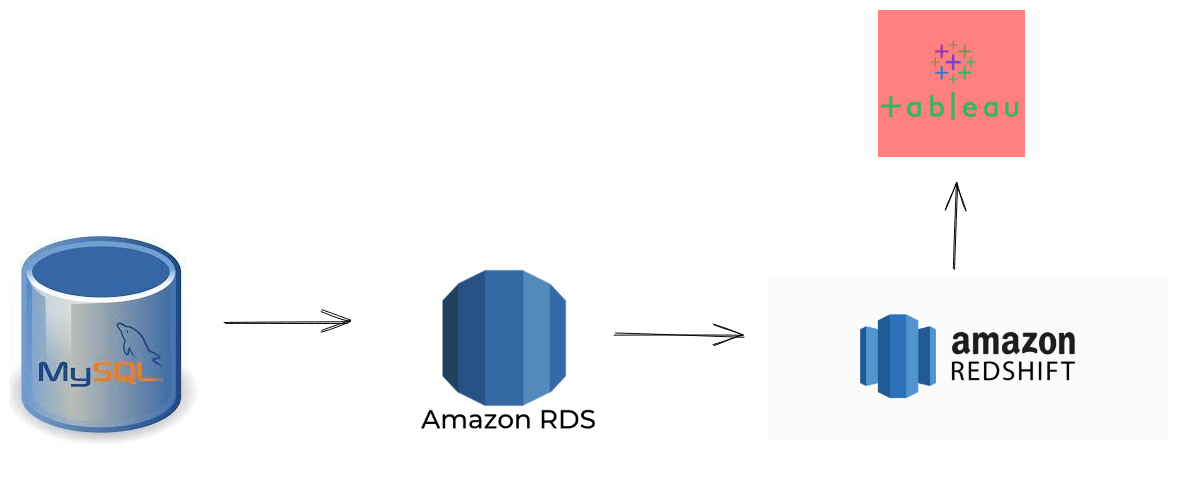

WHAT ARE WE GOING TO BUILD

We have a remote SQL database that contains tables. We are going to connect an AWS RDS database to the remote SQL database. We are then going to use federated queries to connect our RDS to Amazon Redshift.

From Redshift, we can now connect our data to Tableau. However, the connection to Tableau would not be done in this tutorial. Watch out for the new series!

PREREQUISITES

For this to work, you need to have the following:

- An AWS account

- A database in your local server: You can have Mysql or Postgres. In this tutorial, we will be making use of Mysql.

CREATING A SECURITY GROUP

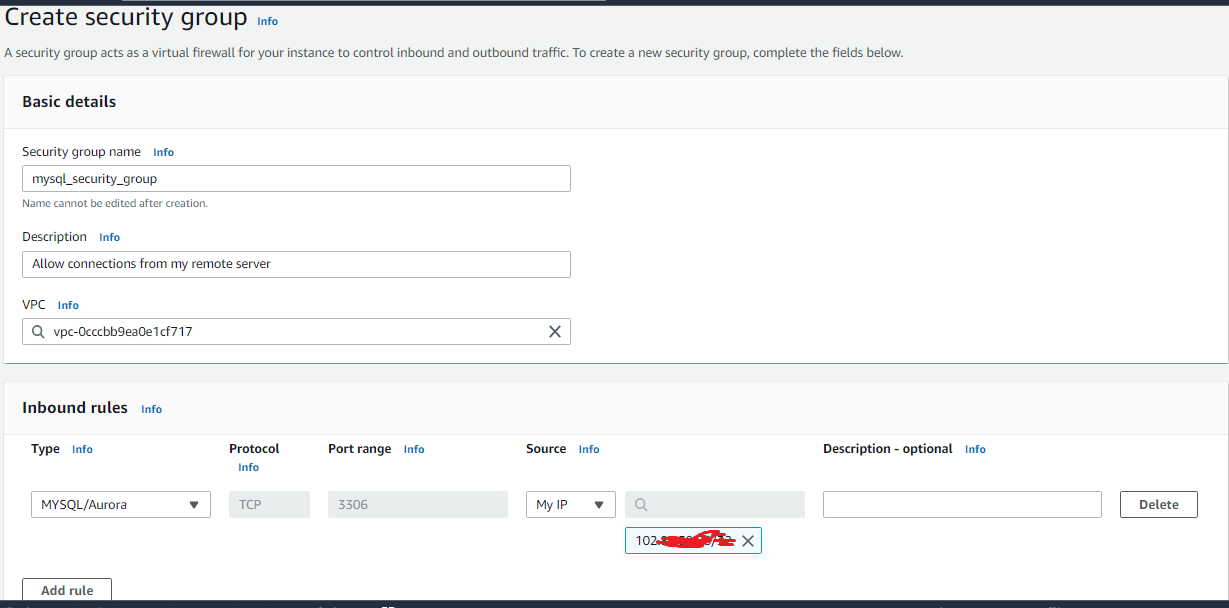

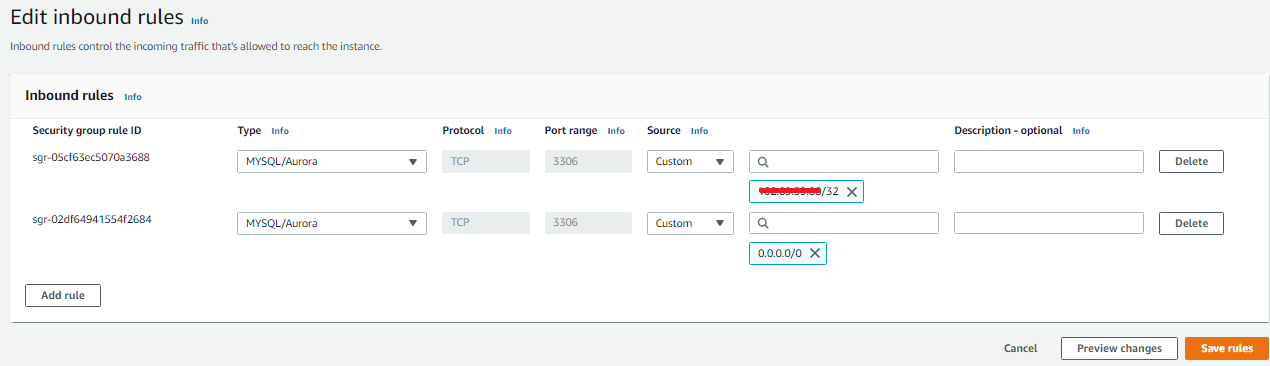

First, we create a security group. This security group will allow us to specify who we want to connect to our network. In this tutorial, we want our Mysql database in our remote server to connect to our network.

To create a security group, we go to the AWS console. We then search EC2 on the search bar. On the left pane, there is a section called Network and Security.

We then click on Security Groups. On the top right corner, we click on Create Security Group.

Click to zoom

In this case, we created a security group with the name mysql_security_group. We also created an inbound rule to allow connections from my IP address.

CREATING THE RDS DATABASE INSTANCE

After creating the security group, we want to create an RDS instance for our remote database. This RDS instance will enable us to connect our remote database to AWS database server.

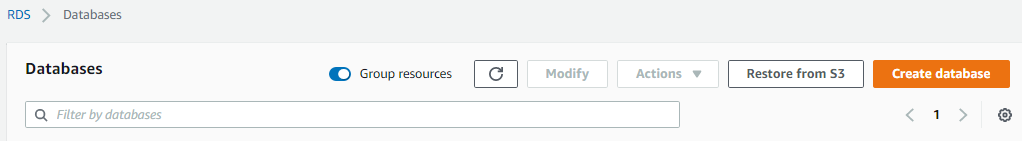

To create an RDS database instance, we go to AWS console and search on RDS. We then click on Create database

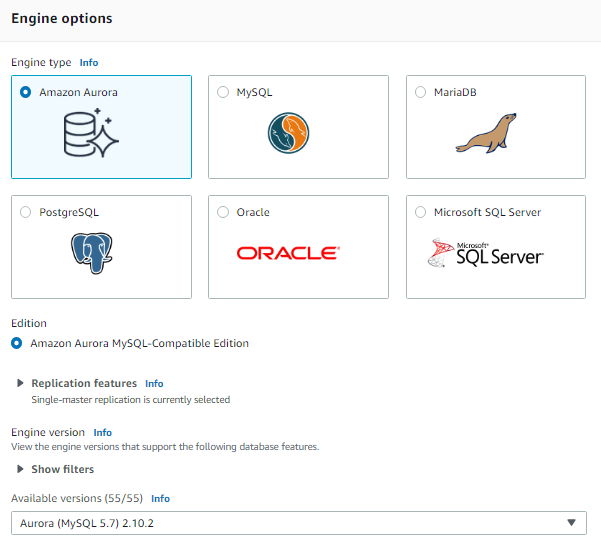

After selecting create database, we then choose the database engine we want to connect to.

Here, we want to connect to MySql database engine. After specifying the database engine, we then specify the admin credentials of the database as shown in the image below.

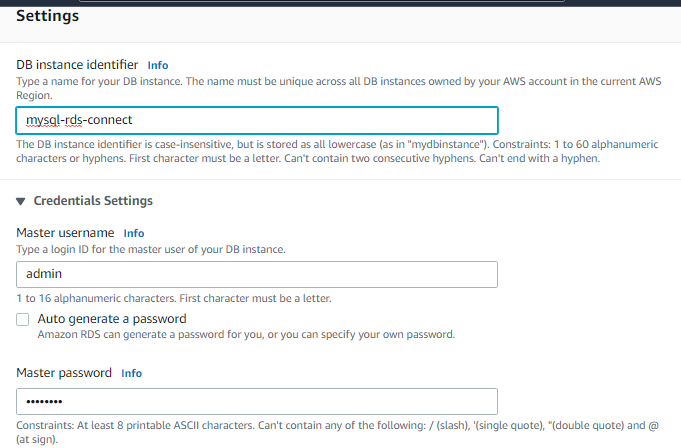

Here, we specified the database identifier as mysql-rds-connect. We also specified the credentials such as the master username and master password.

We then head over to Connectivity.

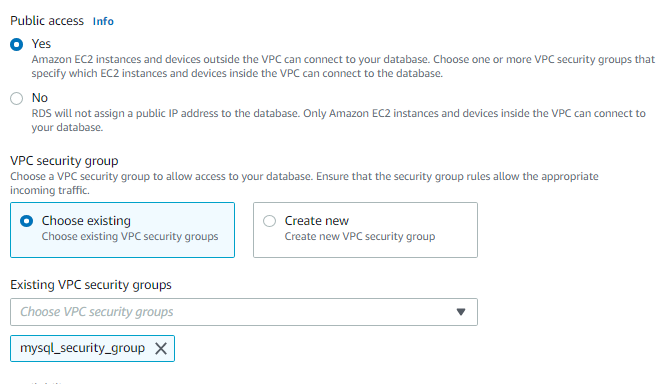

Here, we enabled public access and specified our VPC security group. Without enabling public access, our remote server would not be able to communicate effectively with the RDS and Redshift cluster. We then click on create database.

This will take a while to configure. After about five minutes, our database will be successfully created.

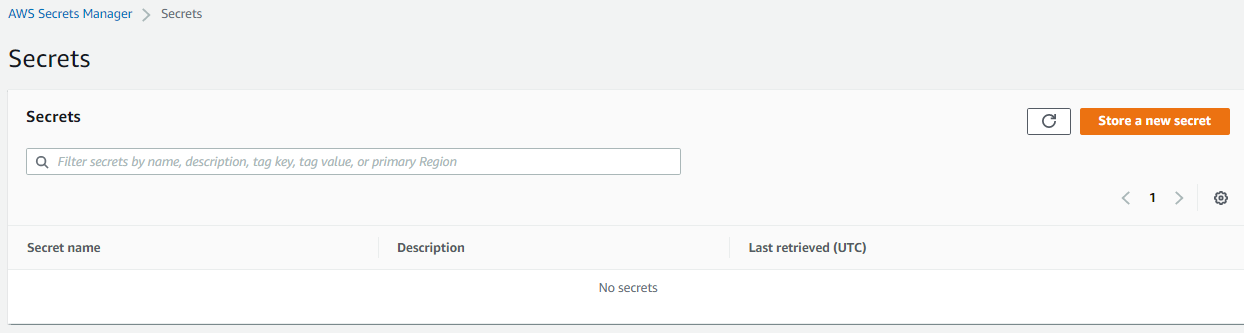

CREATING A SECRETS MANAGER

After creating a RDS, we want to create a secrets manager. For this, we go to AWS console. We then search for Secrets Manager. This Secrets Manager allows us to securely store private and sensitive information such as API credentials, passwords, database information and so many other kinds of sensitive information.

Creating a secret… Click to zoom!

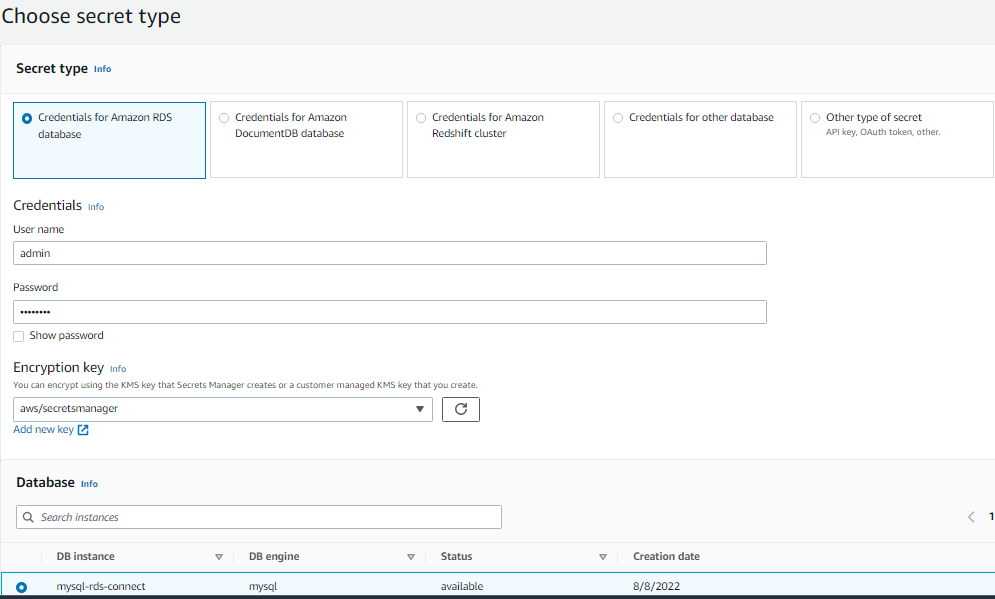

We then click on Store a new secret. After clicking, we have to specify the RDS database we created earlier and specify the login credentials for the database.

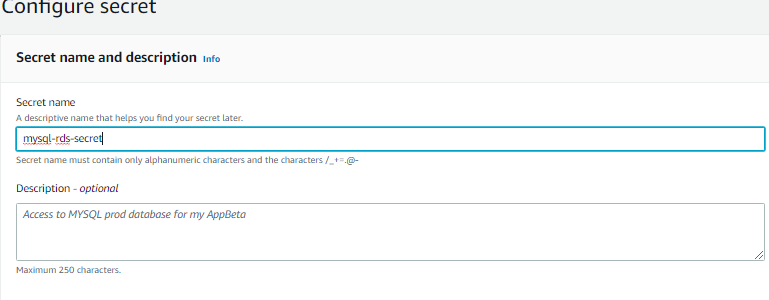

After specifying the credentials, we give our secret a name.

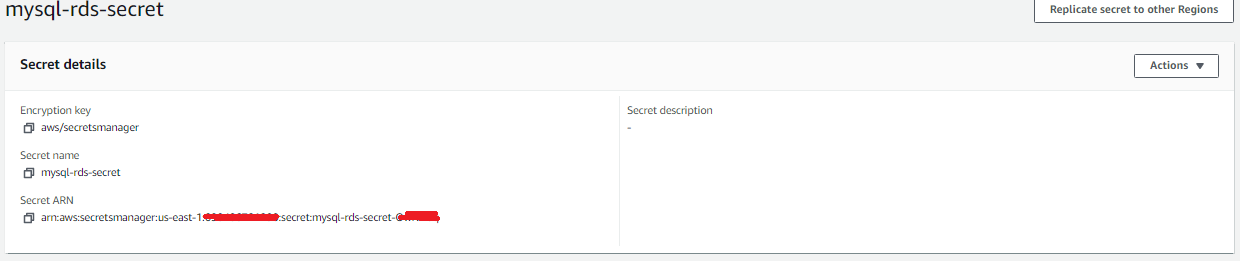

In this case, we gave our secret a name mysql-rds-secret. We then create this secret and store it.

After creating our secret, we will be given a Secret Arn. This secret arn will be used to connect to an IAM role which we will use when querying our data to the Redshift cluster.

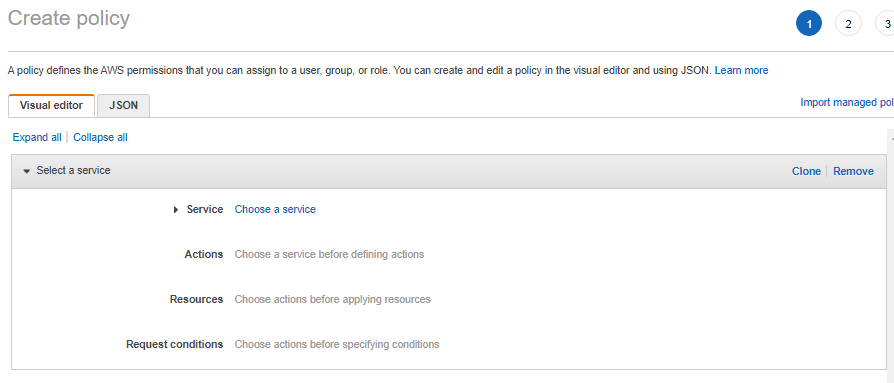

CREATING AN IAM POLICY

Here, we will create an IAM POLICY that has permission to read and write to our secrets manager. To create an IAM policy, we go to IAM Management Console and click on policies. We then create a policy.

We then click on the JSON tab.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "AccessSecret", "Effect": "Allow", "Action": [ "secretsmanager:GetResourcePolicy", "secretsmanager:GetSecretValue", "secretsmanager:DescribeSecret", "secretsmanager:ListSecretVersionIds" ], "Resource": "<your secret arn>" }, { "Sid": "VisualEditor1", "Effect": "Allow", "Action": [ "secretsmanager:GetRandomPassword", "secretsmanager:ListSecrets" ], "Resource": "*" } ]}

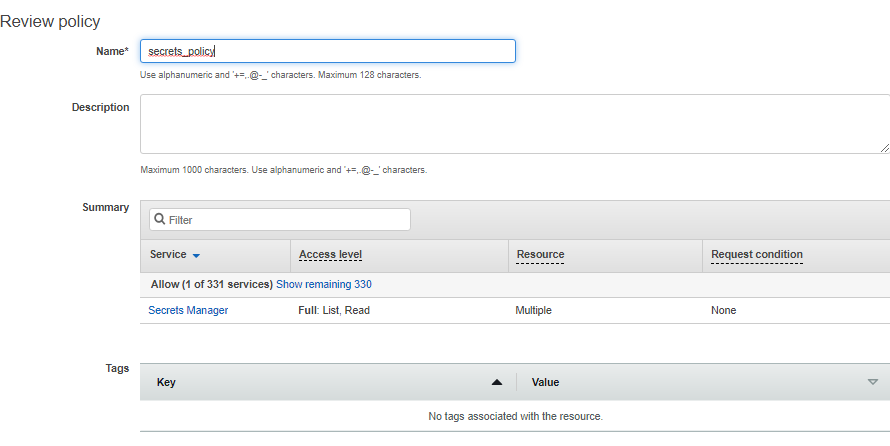

We replace “ <your secret arn> “ with our secret arn which we created earlier. We then give our policy a name and create it.

Here, we created the secrets policy with the name secrets_policy.

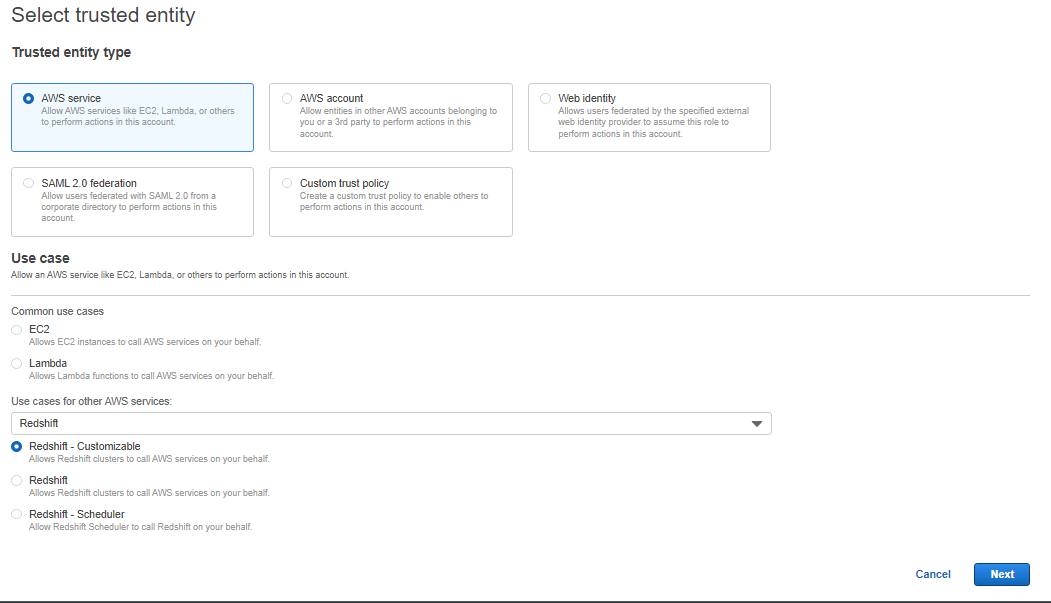

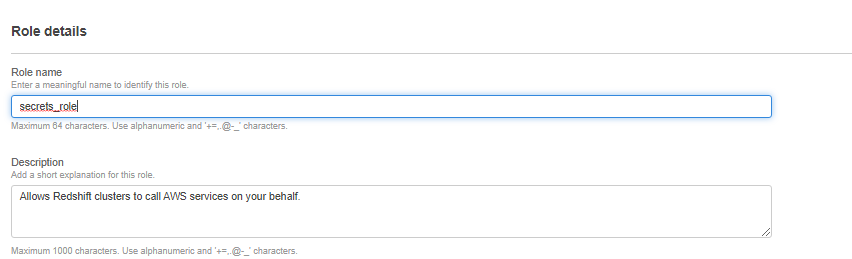

CREATE AN IAM ROLE

Here, we will attach the secrets_policy to an IAM role. We go to IAM Management console and click on roles. We then click on create role. We then select Redshift customizable as our AWS service. We do this because we will use it when we create our Redshift cluster to communicate to our RDS.

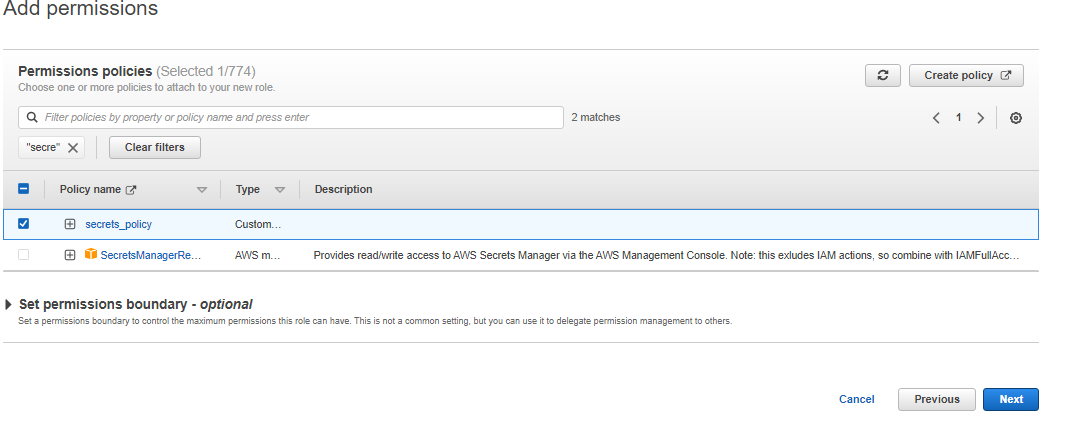

We then attach the permissions we created in the secrets_policy we created earlier.

We then select the name of our role.

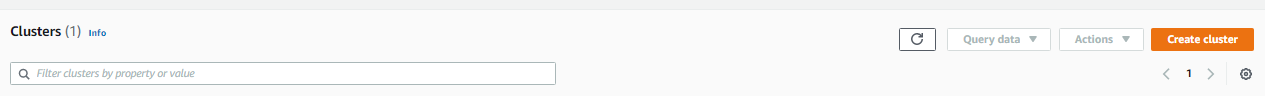

CREATING A REDSHIFT CLUSTER

First, we want to create a Redshift cluster. We will need this cluster to run federated queries from our RDS database to Redshift.

We go to AWS console and search for Redshift. We then go to clusters section and create cluster.

We named our cluster redshift-cluster-1.

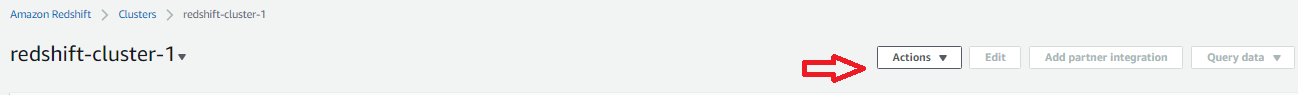

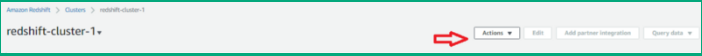

After creating our cluster, we want to enable public access to enable our remote database server to communicate with Redshift. For this, we go to the cluster we created and click on actions.

We then enable modify publicly accessible setting.

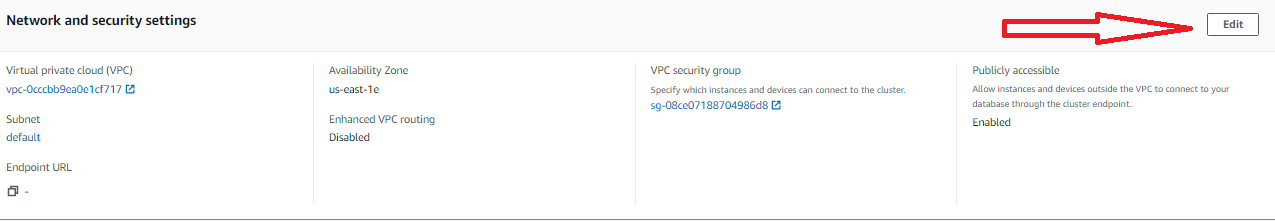

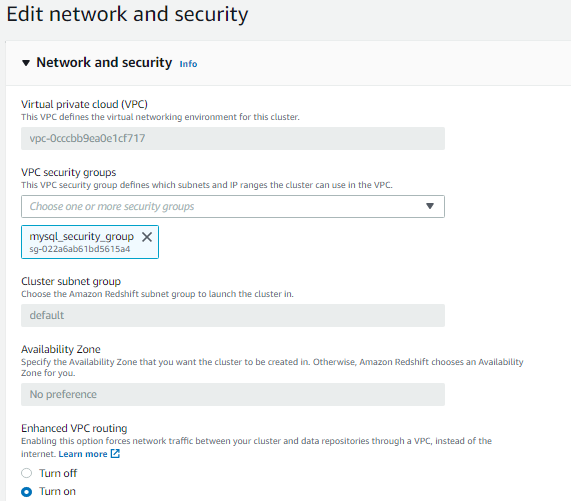

After enabling public access, we also enable VPC routing. This is necessary it allows you to route traffic between servers using private IPv4 addresses. The servers will be able to communicate effectively because they are in the same network. To enable VPC routing, we go to the redshift cluster and go to Properties. We then head over to Network and Security settings and click on Edit.

After clicking on Edit, we see a page as the image shown below.

We then select the security group we created earlier and enable VPC routing.

CONNECTING RDS TO MYSQL

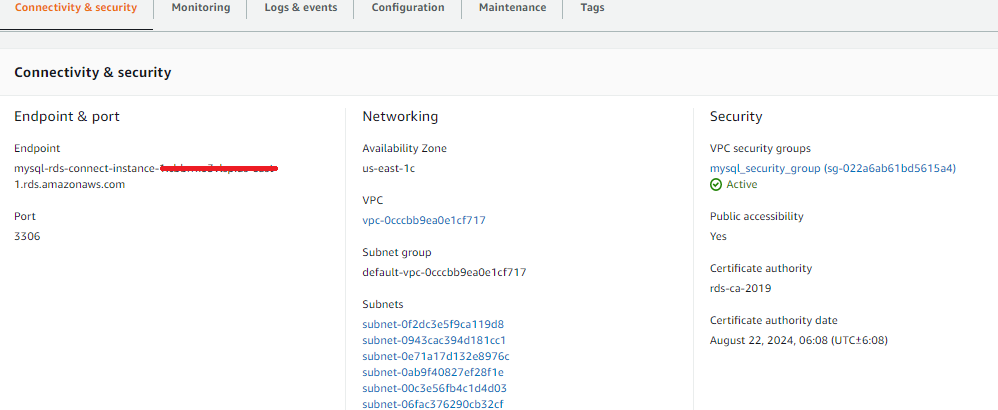

In this section, we will connect RDS database to Mysql database on our remote server. For this, we need to head over to AWS management console and go to the RDS database we created earlier.

We see an endpoint and a port. This is what we will use to connect to our Mysql database.

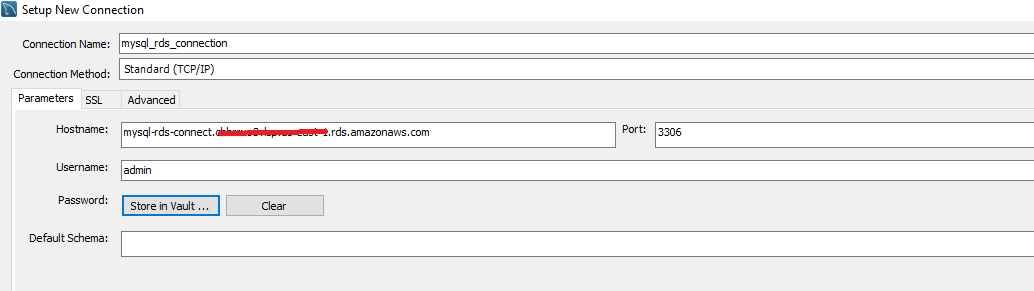

In our Mysql database, we create a new server connection.

We then specify the hostname, our RDS database username, the port number, and the password we created for the RDS instance.

After specifying the credentials, we would have successfully connected our local Mysql database to an RDS instance.

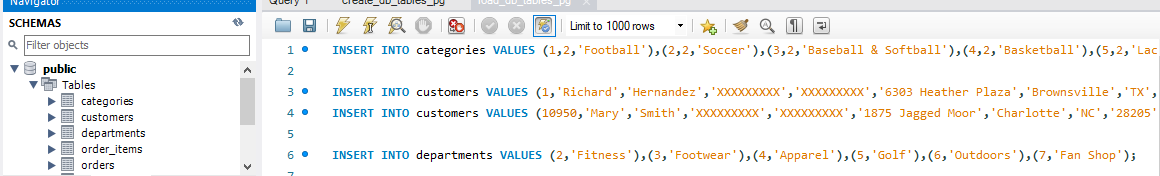

In our Mysql database, we have a public schema. In this schema, we created tables and populated the tables with data.

I created a GitHub repository for the creation of tables and loading the data. The repository is https://github.com/Techtacles/Create-sql-databases. You can clone the repository and create the databases and tables by yourself.

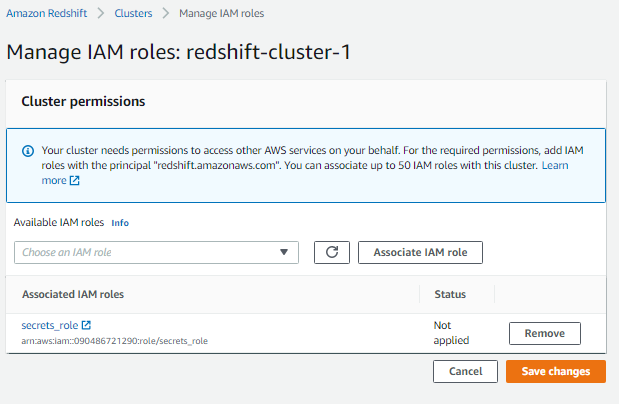

ASSOCIATING THE IAM ROLES TO REDSHIFT CLUSTER

Remember we created an IAM role called secrets_role and attached some policies to it. In this section, we will link that role to our Redshift cluster. To do that, we go to our Redshift cluster and click on Actions.

We then click on Manage IAM roles.

We then associate our secrets_role to our Redshift cluster.

RUNNING FEDERATED QUERIES FROM OUR RDS DATABASE TO REDSHIFT CLUSTER

Now, we want to run federated queries from our RDS database to Redshift cluster.

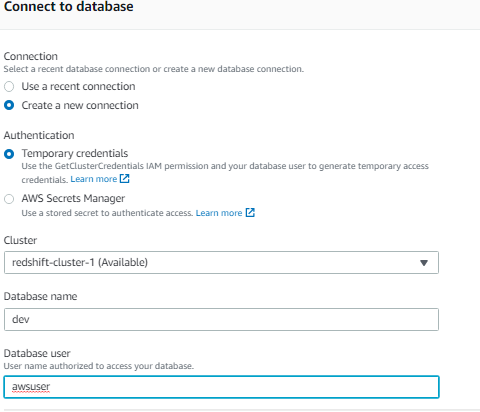

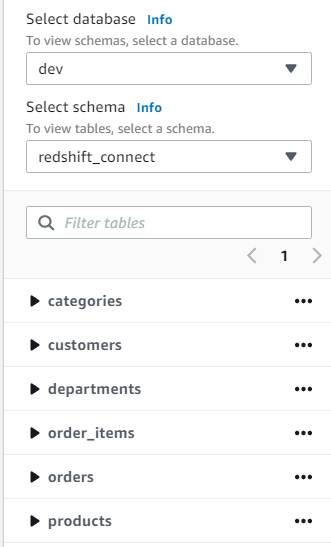

We go to Redshift query editor and connect to our default Redshift database called dev with admin privileges.

After executing this step, we will have access to Redshift query editor.

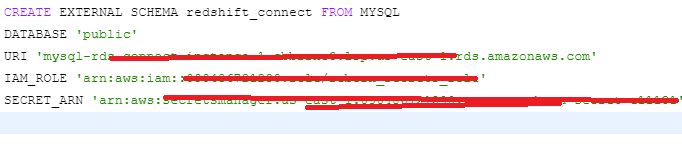

Now let us create an external schema that will hold our federated queries.

Here, we created an external schema redshift_connect which we will use to connect to our RDS. We then specify our SECRET_ARN and the ARN for our IAM role.

After executing this code, we would have a schema below with our tables.

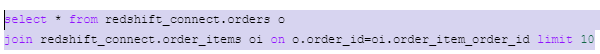

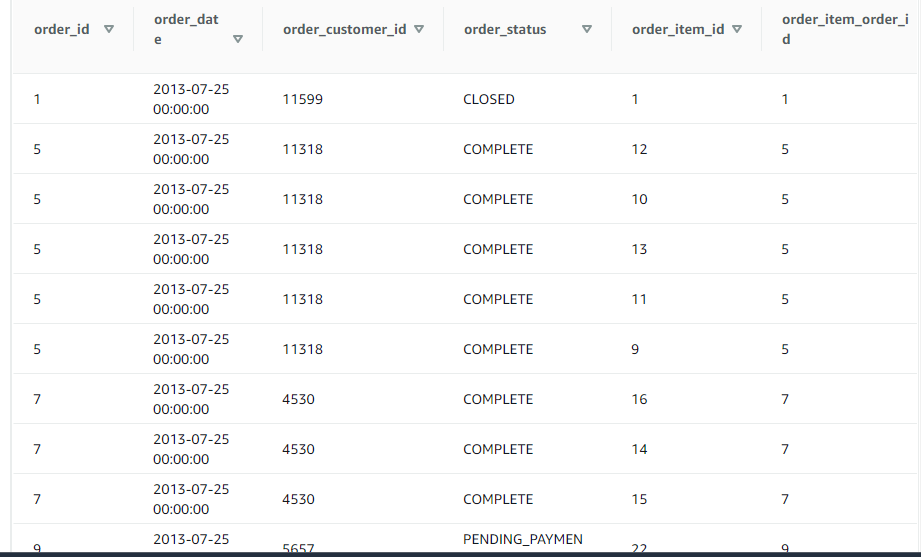

Now, we can run SQL queries and join tables. Let us join our orders table with our order_items table.

Let’s preview the results in our Redshift cluster.

CONCLUSIONS

Now, we have been able to create federated queries to connect our RDS database to Redshift cluster in real-time. We then performed queries on our Redshift query editor.

We can then connect our redshift cluster to visualisation tools like AWS Quicksight, Power Bi, and Tableau.

Author’s Bio: Offisong Emmanuel is a software engineer, focused on data engineering, data science, and machine learning. He builds ETL pipelines and scalable data infrastructure for organizations. He also use statistics and advanced Machine Learning techniques to generate insights from data.

Dive in

Related

39:26

Video

From Intractable to Interactable: Unleashing Sensitive Datasets with Distributed Data Science

By Blaise Thomson • Nov 3rd, 2022 • Views 516

39:26

Video

From Intractable to Interactable: Unleashing Sensitive Datasets with Distributed Data Science

By Blaise Thomson • Nov 3rd, 2022 • Views 516